Hey people,

I noticed recently that my network sometimes doesn't work when I reboot my machine I run it headless with GPU passthrough but I can see when my GPU VM boots up onto a screen (Windows 10) I can see that the network is just not working and therefor it's not showing the Proxmox GUI either.

I also noticed that if I reboot the machine again, or mutliple times in some cases it will eventually work.. I am clueless.

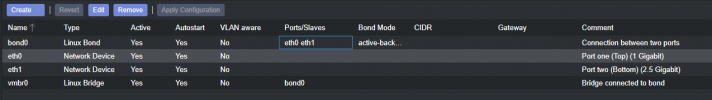

My machine has 2 network ports with a "bond0 (linux bond)" with "active-backup" enabled between "eth0" and "eth1" with bond primary being "eth1" since it is a 2.5Gb/s port.

I tried to mess around with my network config file but I can't seem to find the issue, I will attach the network file and a screenshot of the network options in de GUI.

FYI: I set everything to DHCP and put a static IP for the machine in my PFsense router.

Thanks in advance for all the help, it's extremely frustrating.

Greetings

I noticed recently that my network sometimes doesn't work when I reboot my machine I run it headless with GPU passthrough but I can see when my GPU VM boots up onto a screen (Windows 10) I can see that the network is just not working and therefor it's not showing the Proxmox GUI either.

I also noticed that if I reboot the machine again, or mutliple times in some cases it will eventually work.. I am clueless.

My machine has 2 network ports with a "bond0 (linux bond)" with "active-backup" enabled between "eth0" and "eth1" with bond primary being "eth1" since it is a 2.5Gb/s port.

I tried to mess around with my network config file but I can't seem to find the issue, I will attach the network file and a screenshot of the network options in de GUI.

FYI: I set everything to DHCP and put a static IP for the machine in my PFsense router.

Thanks in advance for all the help, it's extremely frustrating.

Greetings