Hi community,

i have created a hyperconverged ProxMox Cloud Cluster as an experimental project.

features:

- using 1blu.de compute nodes: 1 Euro / month

- using 1blu.de storage node 1 TB: 9 Euro / month

- using LAN based on Vodafone Cable Internet IPV6 DS-LITE (VF NetBox)

- the ProxMox cluster uses public internet for cluster communication

- cluster can only run LXC containers (no VMX flag, no nested virtualization)

- containers can move between compute nodes

- containers can fail-over (restarting mode)

- compute nodes share a Ceph device filling all the local storage of the ProxMox machines left over from the root filesystem after installation of ProxMox

- Ceph is using public internet, each compute node is a Ceph manager and runs a Ceph monitors for the OSDs

- Ceph-FS available on top of the rdb

- containers can use Ceph rdb, local or nfs-nas (1 TB) for images

- containers can use nfs-rclone mounted Google Drive (1 PB) for dumps, container templates, files, databases etc.

- containers can join a software defined (SDN) VXLAN spanning across containers on arbitrary compute nodes and the WireGuard gateway to communicate securely with the LAN

- containers can reach the public internet directly via its compute node (NAT)

- LAN uses gl.inet Mango router as WireGuard gateway into the ProxMox cluster

- LAN client get their routing into the cluster from the DHCP server on the gl.inet Mango router

- prepare the compute nodes by booting the ProxMox 7 ISO, enter public IPs masks, hostnames, your email and stuff, select whole disk for ProxMox

- connect via VNC and edit /etc/network/interfaces: add mac entries into /etc/network/interfaces, as ip-spoofing makes your ProxMox nodes unrechable (see ProxMox upgrade notes) - after that reboot and you can reach the ProxMox UI

- enable ip forwaring on all compute nodes

- install ProxMox 7 repos, update upgrade

- install Ceph (v16.2) on all compute nodes

- create cluster

-

on all compute nodes (optional)Code:

apt install fail2ban - remove pve/data from all compute nodes via shell with:

Code:

lvremove pve/data - create new data using:

this will be the compute node OSD, with 3 compute nodes we will get 3 OSDsCode:

lvcreate -n data -l100%FREE pve - ProxMox does no longer supports partitions as intrastructure for OSDs - so we have do force it to do so, and can not use the ProxMox UI to create our OSDs.

- now the trickery begins: ProxMox does not let us create our OSDs the easy way, as we do NOT have a local network for our Ceph traffic. It is not recommendet to use the public internet for Ceph traffic BUT we have to as we do not have a second NIC installed in our compute nodes. So we force Ceph to use the public internet by editing /etc/pve/ceph.conf

this is not recommended as it created vast amounts of traffic on our productive network (which also is the public internet between our compute nodes). Remember this is an experimental project! - Ceph complaints about not finding ceph.keyring so i ran

to create one on every compute nodeCode:

ceph auth get-or-create client.bootstrap-osd -o /var/lib/ceph/bootstrap-osd/ceph.keyring - force create a new Ceph OSD using our newly created pve/data partition

with the 1€ VPS i used as compute nodes i got 80+ GB OSDs:Code:

ceph-volume lvm create --data /dev/pve/data - using ProxMox UI at the DataCenter level I created an RADOS block device (rbd) using all compute nodes' OSDs

we just created the green Ceph rdb 'network' shown in the overview between 3 VPS using the public internet as Ceph traffic network.

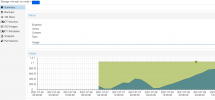

using the UI you may watch Ceph juggling blocks between your compute nodes - not recommended for heavy use as iowait will kill you. But its fun to watch ... - --- we just created a Hyperconverged ProxMox Ceph Cluster in the cloud using 3 VPS ---

- you can also create MetaData servers on each compute node using the Ceph ProxMox UI on each node and furthermore create a CepfFS on top of the rdb we just created (optional). The rdb is a thin-provisioning infrastructure you can use to deploy containers that automatically replicate across all included nodes on a block level basis. You can also create linked clones of containers on an rdb that only use a minimal amount of storage for individual configurations and data and share the common blocks with the master container. All distrucuted across your 3 bucks cluster in the cloud

- setup storage node (i chose the 1 TB variant for 9 €) with nfs kernel server and rclone

- on your storage node setup an rclone mount for your Google Drive (optional). I am using VFS caching and the compute nodes may use all of the 1 TB storage as Rclone cache - but you may restrict Rclone as you like. I can tell my Google Drive is lightning fast shared across all compute nodes.

- Unfortunately i did not manage to have ISOs and container vm disks on that mount - some filesystems features like truncating and random accessing blocks in a file seem not to be provided by a Rclone mount.

- I shared the rest of the 1 TB as a NFS export with all compute nodes (shown as nfs-nas). This can be also used for running containers with thin-provisioning. Speed is reasonable fast. We have just created the violet SAN shown in the overview.

- i have Rclone clean the VFS cache after 24h. Here is the systemd script for rclone on the storage node:

- You can watch Rclone filling and cleaning the cache using ProxMox UI:

- --- we just created a shitty 12€ ProxMox Cluster with 1 TB shared storage and 1 PB secondary/optional storage ---

Attachments

Last edited: