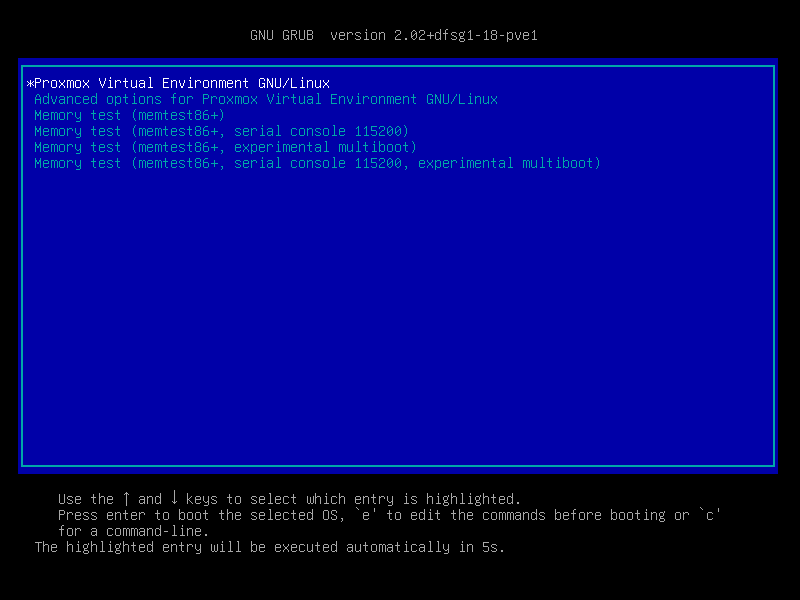

Oh and in case this is useful, I have captures of my boot attempts with the following kernel parameters:

boot_nomodeset

- https://streamable.com/1js6i3

boot_nomodeset_zfs-autoimport-disable

- https://streamable.com/t7q3hg

boot_nomodeset_zfs-autoimport-disable-1 (With HDDs unpluged)

- https://streamable.com/wsfbjk

boot_rootdelay-10_nomodeset_zfs-autoimport-disable

- https://streamable.com/az5hpc

boot_nomodeset

- https://streamable.com/1js6i3

boot_nomodeset_zfs-autoimport-disable

- https://streamable.com/t7q3hg

boot_nomodeset_zfs-autoimport-disable-1 (With HDDs unpluged)

- https://streamable.com/wsfbjk

boot_rootdelay-10_nomodeset_zfs-autoimport-disable

- https://streamable.com/az5hpc