Hey,

I've just installed Proxmox VE 8.0.2 on two different types of PC and I'm having the same issue.

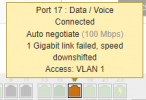

They both are stuck on 100Mb/s network speeds, not sure where to go from here.

I did fresh install's just last week and made sure they are both up to date.

I've just installed Proxmox VE 8.0.2 on two different types of PC and I'm having the same issue.

They both are stuck on 100Mb/s network speeds, not sure where to go from here.

I did fresh install's just last week and made sure they are both up to date.

Code:

root@TestLabServer:~# ethtool eno1

Settings for eno1:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: No

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Speed: 100Mb/s

Duplex: Full

Auto-negotiation: on

Port: Twisted Pair

PHYAD: 1

Transceiver: internal

MDI-X: on (auto)

Supports Wake-on: pumbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

Code:

root@TestLabServer:~# pveversion -v

proxmox-ve: 8.0.2 (running kernel: 6.2.16-10-pve)

pve-manager: 8.0.4 (running version: 8.0.4/d258a813cfa6b390)

pve-kernel-6.2: 8.0.5

proxmox-kernel-helper: 8.0.3

proxmox-kernel-6.2.16-10-pve: 6.2.16-10

proxmox-kernel-6.2: 6.2.16-10

proxmox-kernel-6.2.16-8-pve: 6.2.16-8

pve-kernel-6.2.16-3-pve: 6.2.16-3

Last edited: