Last edited:

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

it depends - /tmp is usually a tmpfs backed by RAM, so not the best place to store temporary copies of backups anyhow  if it is really on ZFS, you can set the acl behaviour via ZFS properties (see

if it is really on ZFS, you can set the acl behaviour via ZFS properties (see

man zfsprops) - if it is a tmpfs, I suggest setting vzdump's tmpdir to a new dataset you create with appropriate zfs properties set.Hi Fabian, it seems /tmp really is on ZFS (rpool/ROOT/pve-1), which is how the system was set up by the Proxmox installation ISO. But I take your point about /tmp normally being tmpfs, which would be problematic. I might create & change it to /var/tmp/vzdump (also on ZFS), unless someone has a better suggestion

I'll have to figure out how to enable ACLs (zfs set aclmode?) since that isn't something I've ever had to fiddle with...

Thanks for the help!

I'll have to figure out how to enable ACLs (zfs set aclmode?) since that isn't something I've ever had to fiddle with...

Thanks for the help!

All this convo helped me fix my problem.

CT disks running on LVM on iSCSI share. Trying to backup to an NFS share. Suspend mode backups failed, but stop mode worked.

Kept getting the rsync failed

@fabian comment about ACL helped.

I ran

I added the ACL support and my suspend mode backups are working perfectly now.

Thanks All!

CT disks running on LVM on iSCSI share. Trying to backup to an NFS share. Suspend mode backups failed, but stop mode worked.

Kept getting the rsync failed

exit code 23. I do have a temp dir for backup configured under /etc/vzdump.conf@fabian comment about ACL helped.

I ran

mount to check the ACL support on temp dir. It wasn't supported.tank-mirror-1/backup-scratch on /backup-scratch type zfs (rw,xattr,noacl)I added the ACL support and my suspend mode backups are working perfectly now.

zfs set acltype=posixacl tank-mirror-1/backup-scratchThanks All!

Last edited:

Hello guys,

I have a similar problem and I can't solve it. Since upgrading to Proxmox 7, an LXC container can no longer be backed up via suspend mode. Only stop mode works. The backup target is an NFS share. Setting the container mount point options for ACLs to Disabled doesnt work for me.

I ran the rsync command manually and found the following in the progress log:

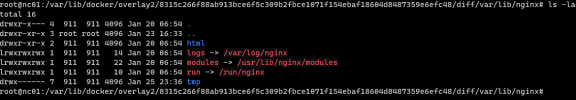

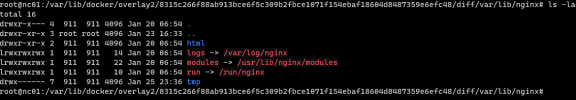

The directory with the errors looks like this:

Does anyone here have an idea?

Kind regards

Lucas

I have a similar problem and I can't solve it. Since upgrading to Proxmox 7, an LXC container can no longer be backed up via suspend mode. Only stop mode works. The backup target is an NFS share. Setting the container mount point options for ACLs to Disabled doesnt work for me.

INFO: Starting Backup of VM 103 (lxc)

INFO: Backup started at 2022-03-06 04:23:53

INFO: status = running

INFO: CT Name: nc01

INFO: including mount point rootfs ('/') in backup

INFO: mode failure - some volumes do not support snapshots

INFO: trying 'suspend' mode instead

INFO: backup mode: suspend

INFO: ionice priority: 7

INFO: CT Name: nc01

INFO: including mount point rootfs ('/') in backup

INFO: starting first sync /proc/1053444/root/ to /tmp/vzdumptmp304152_103/

ERROR: Backup of VM 103 failed - command 'rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/1053444/root//./ /tmp/vzdumptmp304152_103/' failed: exit code 23I ran the rsync command manually and found the following in the progress log:

The directory with the errors looks like this:

Does anyone here have an idea?

Kind regards

Lucas

I tried it again in the container. The same error comes up.it seems like reading some overlayfs related xattrs fails - can you check whether it works from within the container?

root@nc01:~# rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative /var/lib/docker/ /tmp/

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/docker/overlay2/39d58a269d66e14e42884edcc3c523d2f3d87400ccfa84704b74dd6431213495/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/docker/overlay2/39d58a269d66e14e42884edcc3c523d2f3d87400ccfa84704b74dd6431213495/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/docker/overlay2/39d58a269d66e14e42884edcc3c523d2f3d87400ccfa84704b74dd6431213495/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61)

IO error encountered -- skipping file deletion

I set up a new LXC container "Ubuntu 20.04 LTS" and just copied the docker-compose.yaml. I keep getting the same error when backup via suspend mode. I am using the nextcloud docker container from linuxserver. It was still working before upgrading to Proxmox 7.0. If I shut down the docker containers inside the lxc container, the backup works.and what happens if you try to list and read the xattrs manually? e.g., usingxattr?

either those files are broken, or the FS mounted there has some issue with xattrs..

I observed the problem for some time now.

It's not the ACLs. Nor do I use them nor does disabling ACL on root fs solve the problem.

Still, 3/5 of all backup runs succeed, 2/5 fail.

Still no clue.

Is this really no common issue? I didn't setup fancy tinker shit, it's all pretty standard. ;-)

It's not the ACLs. Nor do I use them nor does disabling ACL on root fs solve the problem.

Still, 3/5 of all backup runs succeed, 2/5 fail.

Still no clue.

Is this really no common issue? I didn't setup fancy tinker shit, it's all pretty standard. ;-)

I don't want to revive this topic if there's no reason to, but I think there are still some open questions.

I ran into the same problem now within 2 days on 2 different hosts but with a simillar setup on both.

On both I have portainer deployed and am using a variety of different docker-containers, I manually ran the rsync command and grepped for "error", here is the output of that:

on both hosts I deployed an container that uses nginx, so maybe that's a lead somehow? I'm out of luck on this, just want it fixed somehow and want to contribute my finding

I ran into the same problem now within 2 days on 2 different hosts but with a simillar setup on both.

On both I have portainer deployed and am using a variety of different docker-containers, I manually ran the rsync command and grepped for "error", here is the output of that:

Code:

root@virt-01 ~ # rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/3606348/root//./ /tmp/vzdumptmp3691100_100/ -v --progress | grep error

rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61)

IO error encountered -- skipping file deletion

rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]on both hosts I deployed an container that uses nginx, so maybe that's a lead somehow? I'm out of luck on this, just want it fixed somehow and want to contribute my finding

Dear bennetgallein,I don't want to revive this topic if there's no reason to, but I think there are still some open questions.

I ran into the same problem now within 2 days on 2 different hosts but with a simillar setup on both.

On both I have portainer deployed and am using a variety of different docker-containers, I manually ran the rsync command and grepped for "error", here is the output of that:

Code:root@virt-01 ~ # rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --sparse --one-file-system --relative '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' /proc/3606348/root//./ /tmp/vzdumptmp3691100_100/ -v --progress | grep error rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61) rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61) rsync: [sender] get_xattr_data: lgetxattr("/proc/3606348/root/var/lib/docker/overlay2/0d184cf8b54f102af6476f04488b7d4e3c88fa502b91f1064719b42be66b57fd/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61) IO error encountered -- skipping file deletion rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1333) [sender=3.2.3]

on both hosts I deployed an container that uses nginx, so maybe that's a lead somehow? I'm out of luck on this, just want it fixed somehow and want to contribute my finding

xattr support or lack of it is a clear cause of the symptoms, however, in my case, it's a turnkey container with Nextcloud and enabled full text search with elssticsearch (and readonlyrest). When backup fails, I have to reboot the container and then trigger a file scan and then indexing for full text search within nextcloud.

This reliably resolves the issue for me and still leaves me clueless as I cannot attribute a cause.

So, we have basically two parties here: the party of reason with xattr related errors in temp file creation and the party of the crazy one where I'm the only member and have to to weird rituals that I claim working for me.

As for you, I'd stick to the party of reason as your logs indicate your machine didn't yet evolve consciousness.

Kind regards,

christian

@bennetgallein, my issue seems to be exactly the same as yours. I have an LXC with Docker and in it quite a few containers that are running nginx (nextcloud, swag proxy, heimdall, ...). Now I'd like to move the disk of the container to a different drive, however, the rsync command (initiated from Proxmox frontend using "Move Storage") fails with the same error as yours. The LXC is properly shut down.

Did you manage to solve this somehow?

Edit: Please note that these are symlinks. When accessing them from within the LXC, but outside the docker container, the symlinks are broken, but when accessing them from within the docker container all is fine and they can be resolved.

Did you manage to solve this somehow?

Code:

...

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/5ae2064b28b95eef52bc3ddd5a7d1a6330d4772a6087217464c250ceebe17a57/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/5ae2064b28b95eef52bc3ddd5a7d1a6330d4772a6087217464c250ceebe17a57/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/5ae2064b28b95eef52bc3ddd5a7d1a6330d4772a6087217464c250ceebe17a57/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/7dc98d61aff14288dac18b0de477379fcd475250e32b1edf1d9924de2c9e594b/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/7dc98d61aff14288dac18b0de477379fcd475250e32b1edf1d9924de2c9e594b/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/7dc98d61aff14288dac18b0de477379fcd475250e32b1edf1d9924de2c9e594b/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/eae1510a65c8b9c73832f0788ff78ed34144c10a3de95f521731e3f5ad695403/diff/var/lib/nginx/logs","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/eae1510a65c8b9c73832f0788ff78ed34144c10a3de95f521731e3f5ad695403/diff/var/lib/nginx/modules","user.overlay.origin",0) failed: No data available (61)

rsync: [sender] get_xattr_data: lgetxattr("/var/lib/lxc/101/.copy-volume-2/var/lib/docker/overlay2/eae1510a65c8b9c73832f0788ff78ed34144c10a3de95f521731e3f5ad695403/diff/var/lib/nginx/run","user.overlay.origin",0) failed: No data available (61)

...

TASK ERROR: command 'rsync --stats -X -A --numeric-ids -aH --whole-file --sparse --one-file-system '--bwlimit=0' /var/lib/lxc/101/.copy-volume-2/ /var/lib/lxc/101/.copy-volume-1' failed: exit code 23Edit: Please note that these are symlinks. When accessing them from within the LXC, but outside the docker container, the symlinks are broken, but when accessing them from within the docker container all is fine and they can be resolved.

Last edited:

Saved me....ThanksFor me,was the solution.Code:zfs set acltype=posixacl rpool/ROOT/pve-1

Same for me as I am also using LVM and nginx inside a docker container. I haven't continued my investigation, but I will need to move the container as I am running out of disk space. Have you had any success yet?Interestinly I got the same issue with docker containers that use nginx inside.

Sometimes it works, sometimes it doesn't.

The workaround with zfs set doesn't work for me as I do not use ZFS but LVM.

Any ideas how to solve this once and for all?

I switched to a VM with docker inside and have all my containers now in one VM. Defeats my whole guideline about one VM/LXC for one purpose, but with PBS I can still restore only some directories so it is fine for me for now. But yeah would be great if that would get fixed.Same for me as I am also using LVM and nginx inside a docker container. I haven't continued my investigation, but I will need to move the container as I am running out of disk space. Have you had any success yet?