Greetings friends, im here for some help with my ProxmoxVe installation.

I had my server DL360E G8, and this had a 4 NIC (1gb each) and i installed another PCI with 2x1GB.

Im trying to get more bandwith with LACP.

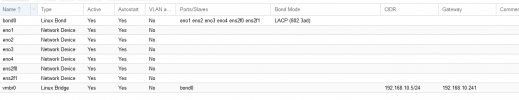

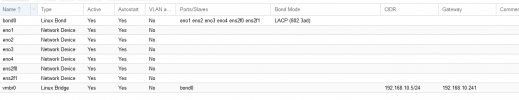

so my configuration is here

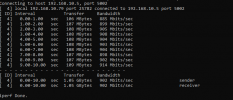

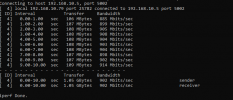

I already installed iperf to check bandwith transfer and here are the results.

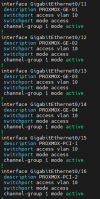

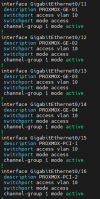

In the switch side the configuration is this

I made a manual test, removing each cable, and the connection persist, so the logical configuration is fine.

and the question is:

Why the bandwith is just 1GB?

How can get more?

Thanks for your help

I had my server DL360E G8, and this had a 4 NIC (1gb each) and i installed another PCI with 2x1GB.

Im trying to get more bandwith with LACP.

so my configuration is here

I already installed iperf to check bandwith transfer and here are the results.

In the switch side the configuration is this

I made a manual test, removing each cable, and the connection persist, so the logical configuration is fine.

and the question is:

Why the bandwith is just 1GB?

How can get more?

Thanks for your help