Hello guys,

I am currently facing an Issue in our Proxmox Cluster as described in the Title.

It does not matter on which Node the VMs are on, or if they run Linux or Windows, it happens on every single one.

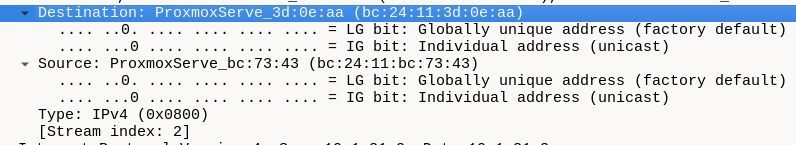

However LXCs in the same VLAN are not affected, since they dont use the VirtIO Adapter, as far as I can tell.

Here are some Infos on the current Setup:

Pakage Versions on each Node:

cat /etc/network/interfaces

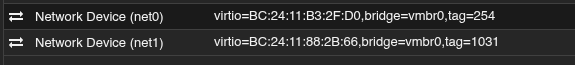

The Issue does not appear in the following VM-Config:

The Issue does appear in the following VM-Config:

As you can see, the only Difference is, that I changed the Network Adapter from e1000 to VirtIO...

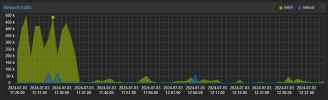

Here some Screenshots of the Network Traffic in the Proxmox Webgui as well:

The Traffic always drops, as soon as I change from VirtIO to e1000!

If you need any more Info from me, please tell me!

Thank you for reading.

I am currently facing an Issue in our Proxmox Cluster as described in the Title.

It does not matter on which Node the VMs are on, or if they run Linux or Windows, it happens on every single one.

However LXCs in the same VLAN are not affected, since they dont use the VirtIO Adapter, as far as I can tell.

Here are some Infos on the current Setup:

Pakage Versions on each Node:

Code:

proxmox-ve: 7.4-1 (running kernel: 5.15.108-1-pve)

pve-manager: 7.4-18 (running version: 7.4-18/b1f94095)

pve-kernel-5.15: 7.4-14

pve-kernel-5.15.158-1-pve: 5.15.158-1

pve-kernel-5.15.131-2-pve: 5.15.131-3

pve-kernel-5.15.131-1-pve: 5.15.131-2

pve-kernel-5.15.108-1-pve: 5.15.108-2

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph: 16.2.15-pve1

ceph-fuse: 16.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx4

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4.3

libpve-apiclient-perl: 3.2-2

libpve-common-perl: 7.4-2

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-3

libpve-rs-perl: 0.7.7

libpve-storage-perl: 7.4-3

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.7-1

proxmox-backup-file-restore: 2.4.7-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.2

proxmox-widget-toolkit: 3.7.4

pve-cluster: 7.3-3

pve-container: 4.4-7

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-4~bpo11+3

pve-firewall: 4.3-5

pve-firmware: 3.6-6

pve-ha-manager: 3.6.1

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.10-1

pve-xtermjs: 4.16.0-2

qemu-server: 7.4-6

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.15-pve1

Code:

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

#Port 1 - 1G

auto eno2

iface eno2 inet manual

#Port 2 - 1G

auto eno3

iface eno3 inet manual

#Port 3 - 1G

auto eno4

iface eno4 inet manual

#Port 4 - 1G

iface enx0a94ef038ed7 inet manual

auto ens1f0

iface ens1f0 inet manual

mtu 9000

#Port 1 - 10G

auto ens1f1

iface ens1f1 inet manual

mtu 9000

#Port 2 - 10G

auto bond100

iface bond100 inet manual

bond-slaves eno1 eno3

bond-miimon 100

bond-mode balance-alb

#Bond 4G

auto bond900

iface bond900 inet manual

bond-slaves ens1f0 ens1f1

bond-miimon 100

bond-mode balance-alb

mtu 9000

#Bond 20G

auto bond800

iface bond800 inet manual

bond-slaves eno2 eno4

bond-miimon 100

bond-mode active-backup

bond-primary eno2

#Corosync

auto vmbr100

iface vmbr100 inet manual

bridge-ports bond100

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

#Bridge 4G

auto vmbr900

iface vmbr900 inet manual

bridge-ports bond900

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094

mtu 9000

#Bridge 20G

auto vlan900

iface vlan900 inet static

address 10.10.20.70/24

mtu 9000

vlan-raw-device vmbr900

#Cluster / CEPH

auto vlan20

iface vlan20 inet static

address 172.17.20.70/24

gateway 172.17.20.1

vlan-raw-device vmbr100

#Management

auto vlan800

iface vlan800 inet static

address 10.10.30.70/24

vlan-raw-device bond800

#CorosyncThe Issue does not appear in the following VM-Config:

Code:

agent: 1

boot: order=virtio0;ide2;net0;ide0

cores: 4

ide0: none,media=cdrom

ide2: none,media=cdrom

machine: pc-i440fx-7.1

memory: 4096

meta: creation-qemu=7.1.0,ctime=1682677511

name: Win10-VLAN

net0: e1000=E6:40:24:02:3D:AF,bridge=vmbr100,firewall=1

net1: e1000=2E:41:FB:9C:80:D1,bridge=vmbr100,firewall=1,tag=12

numa: 0

ostype: win10

scsihw: virtio-scsi-single

smbios1: uuid=10e81047-3e0b-43af-ae12-bcd490754eee

sockets: 1

tags: windows

virtio0: SSD-Pool01:vm-102-disk-2,discard=on,iothread=1,size=64G

vmgenid: f5e5c437-ad2e-4cd6-b5ee-bf21a2bff51bThe Issue does appear in the following VM-Config:

Code:

agent: 1

boot: order=virtio0;ide2;net0;ide0

cores: 4

ide0: none,media=cdrom

ide2: none,media=cdrom

machine: pc-i440fx-7.1

memory: 4096

meta: creation-qemu=7.1.0,ctime=1682677511

name: Win10-VLAN

net0: virtio=E6:40:24:02:3D:AF,bridge=vmbr100,firewall=1

net1: virtio=2E:41:FB:9C:80:D1,bridge=vmbr100,firewall=1,tag=12

numa: 0

ostype: win10

scsihw: virtio-scsi-single

smbios1: uuid=10e81047-3e0b-43af-ae12-bcd490754eee

sockets: 1

tags: windows

virtio0: SSD-Pool01:vm-102-disk-2,discard=on,iothread=1,size=64G

vmgenid: f5e5c437-ad2e-4cd6-b5ee-bf21a2bff51bAs you can see, the only Difference is, that I changed the Network Adapter from e1000 to VirtIO...

Here some Screenshots of the Network Traffic in the Proxmox Webgui as well:

The Traffic always drops, as soon as I change from VirtIO to e1000!

If you need any more Info from me, please tell me!

Thank you for reading.

Last edited: