Hello everyone!

I have proxmox home lab, and now I try to choose filesystem for my virtual machines.

I will run Windows VMs and I need better filesystem for that.

I setup Windows VM with this config:

and I test this filesystems:

ext4

xfs

brtfs

zfs

LVM (without any fs, not thin LVM)

I run ATTO Disk Benchmark inside VM on this filesystem and I have very strange results =(

My test hard drive is - WDC WD10EARS-00Y5B1

(5400 RPM 64MB Cache,SATA 3.0Gb/s)

I run tests on virtio1 disk without Windows OS (empty disk formatted NTFS)

Now my test results inside VM :

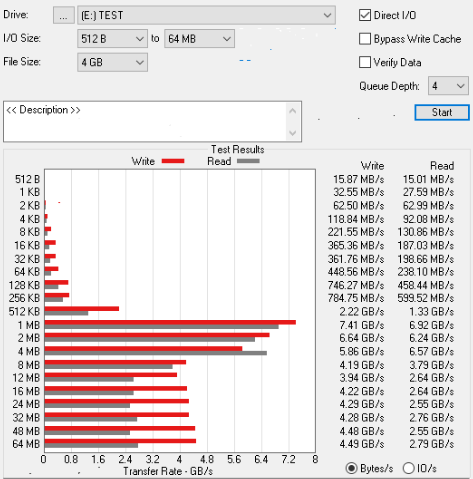

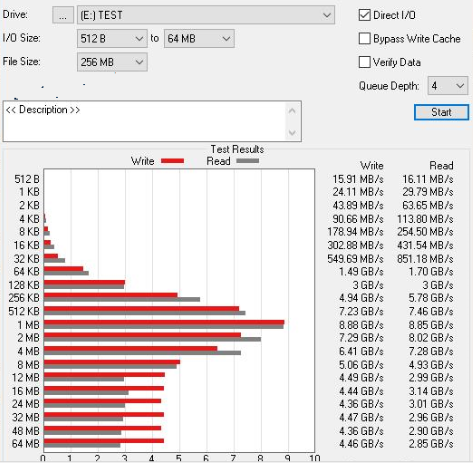

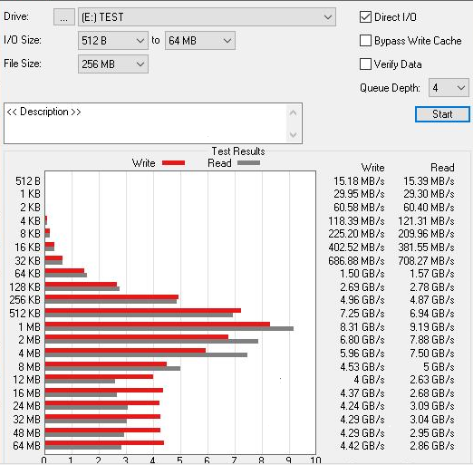

ext4 (qcow2)

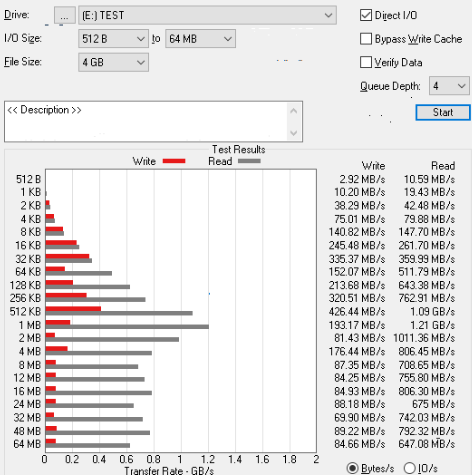

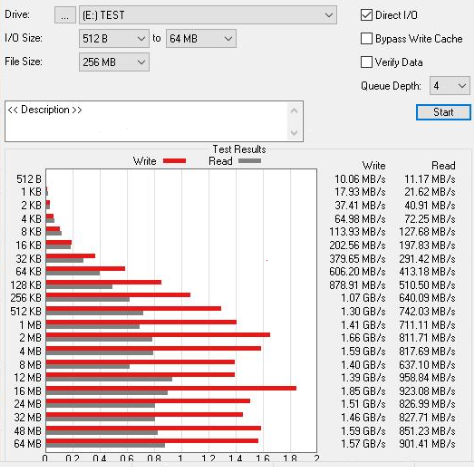

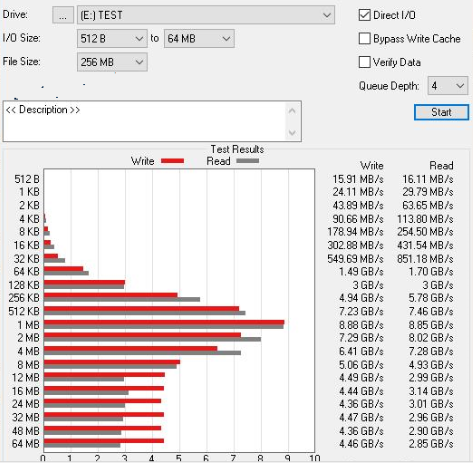

XFS (qcow2)

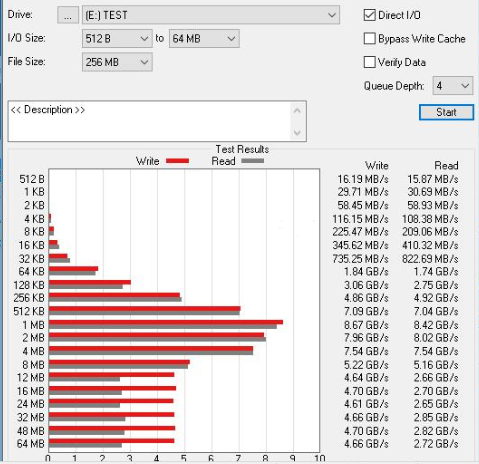

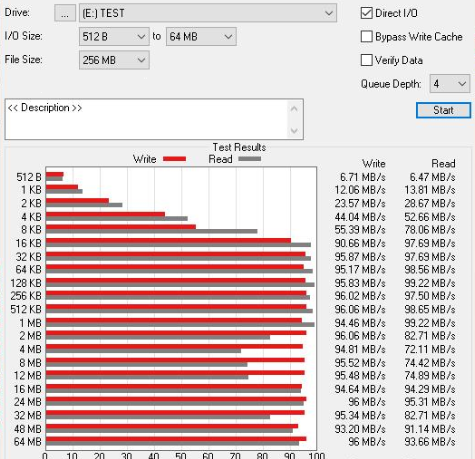

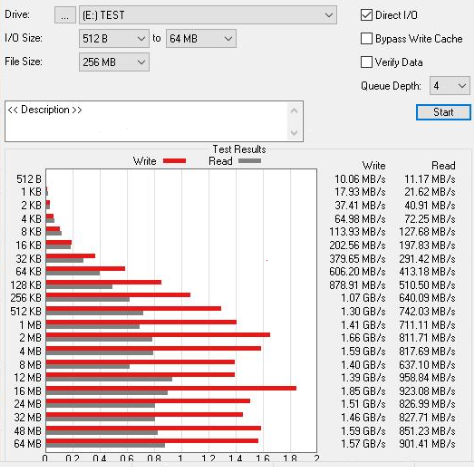

BTRFS (qcow2)

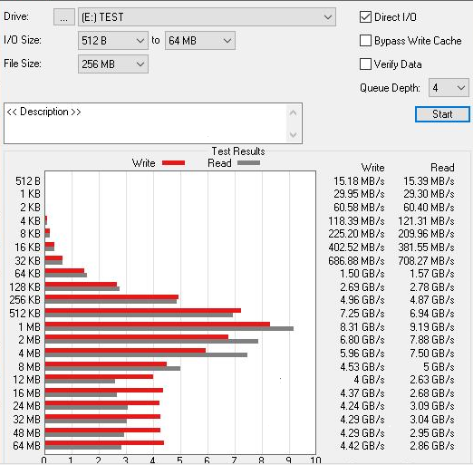

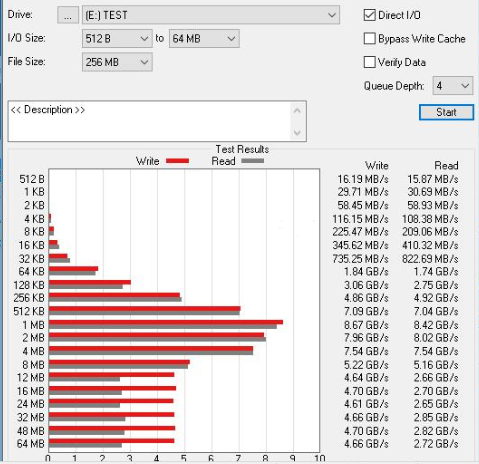

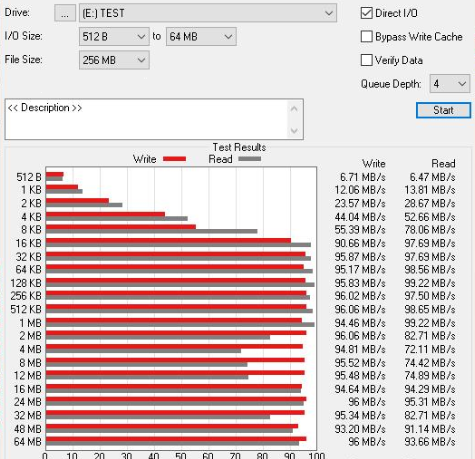

ZFS (pool, no compression)

LVM (not thin)

I dont understand how ext4,xfs and btrfs got 8GB/s speed on this hard drive?

Maybe its cache magic?

And why zfs and lvm got poor performance onlike ext4,xfs and btrfs ?

What I do wrong and what is filesystem got best performance?

Thanks in advance!

I have proxmox home lab, and now I try to choose filesystem for my virtual machines.

I will run Windows VMs and I need better filesystem for that.

I setup Windows VM with this config:

Code:

agent: 1

balloon: 0

bootdisk: virtio0

cores: 4

cpu: host

memory: 4096

name: Test-IOPS

net0: virtio=A6:62:8E:A2:02:B7,bridge=vmbr0

numa: 0

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=206d4f33-dbf2-4a33-a9b9-0622092a7897

sockets: 1

virtio0: Silver:vm-109-disk-1,size=30G

virtio1: Gold:vm-110-disk-2,size=30Gand I test this filesystems:

ext4

xfs

brtfs

zfs

LVM (without any fs, not thin LVM)

I run ATTO Disk Benchmark inside VM on this filesystem and I have very strange results =(

My test hard drive is - WDC WD10EARS-00Y5B1

(5400 RPM 64MB Cache,SATA 3.0Gb/s)

I run tests on virtio1 disk without Windows OS (empty disk formatted NTFS)

Now my test results inside VM :

ext4 (qcow2)

XFS (qcow2)

BTRFS (qcow2)

ZFS (pool, no compression)

LVM (not thin)

I dont understand how ext4,xfs and btrfs got 8GB/s speed on this hard drive?

Maybe its cache magic?

And why zfs and lvm got poor performance onlike ext4,xfs and btrfs ?

What I do wrong and what is filesystem got best performance?

Thanks in advance!