Hello,

Been a 'browser' here for a few years, and been using Proxmox for several, but just started a new server, and have this never-before-seen issue.

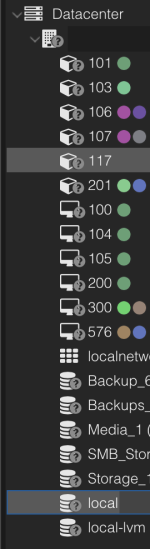

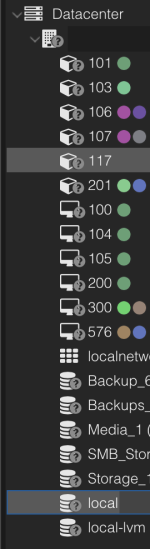

As per title, not only the VMs, but all the drives are marked as unknown (the VM numbers are wonky because I'm moving VMs from another node using SMB as a backup destination/ restore source).

I've tried a few things, including this, and then this (albeit in German, the image is similar) which suggested checking

I found out how to view the logs (though obviously they were printed with the status) and here they are:

I'm not in a node but tried

I'm not sure where to go from here, if anyone can advise I'd be most grateful.

Thank you.

I actually found my own solution, but I'll post this anyway.

I updated the hosts file to include a .local domain rather than the .home domain I was using, and also updated my AdGuard (different machine) by removing a 'Custom filtering rule' that wasn't even the IP of the node, but may have been causing the issue as it was

Soon after I removed it - and restarted

Suffice to say, while things still worked, apparently the (local) DNS can cause issues...

Been a 'browser' here for a few years, and been using Proxmox for several, but just started a new server, and have this never-before-seen issue.

As per title, not only the VMs, but all the drives are marked as unknown (the VM numbers are wonky because I'm moving VMs from another node using SMB as a backup destination/ restore source).

I've tried a few things, including this, and then this (albeit in German, the image is similar) which suggested checking

systemctl status pvestatd.service which is down.I found out how to view the logs (though obviously they were printed with the status) and here they are:

Code:

Sep 12 16:53:17 prox2 systemd[1]: Starting pvestatd.service - PVE Status Daemon...

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[1] failed: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[2] failed: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[3] failed: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[1] failed: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: Unable to load access control list: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[2] failed: Connection refused

Sep 12 16:53:18 prox2 pvestatd[1865]: ipcc_send_rec[3] failed: Connection refused

Sep 12 16:53:18 prox2 systemd[1]: pvestatd.service: Control process exited, code=exited, status=111/n/a

Sep 12 16:53:18 prox2 systemd[1]: pvestatd.service: Failed with result 'exit-code'.

Sep 12 16:53:18 prox2 systemd[1]: Failed to start pvestatd.service - PVE Status Daemon.I'm not in a node but tried

systemctl restart pve-cluster then systemctl restart pvestatd (a full reboot is also of no use)I'm not sure where to go from here, if anyone can advise I'd be most grateful.

Thank you.

I actually found my own solution, but I'll post this anyway.

journalctl -u pve-cluster -b -n 50 found:

Code:

Sep 12 16:53:17 prox2 pmxcfs[1760]: [main] crit: Unable to resolve node name 'prox2' to a non-loopback IP address - missing entry in >

Sep 12 16:53:17 prox2 systemd[1]: pve-cluster.service: Control process exited, code=exited, status=255/EXCEPTION

Sep 12 16:53:17 prox2 systemd[1]: pve-cluster.service: Failed with result 'exit-code'.

Sep 12 16:53:17 prox2 systemd[1]: Failed to start pve-cluster.service - The Proxmox VE cluster filesystem.

......

Sep 12 16:53:23 prox2 pmxcfs[1880]: [main] notice: resolved node name 'prox2' to '192.168.68.6' for default node IP addressI updated the hosts file to include a .local domain rather than the .home domain I was using, and also updated my AdGuard (different machine) by removing a 'Custom filtering rule' that wasn't even the IP of the node, but may have been causing the issue as it was

192.168.68.6 my.home and the node IP is .5 but it was using a subdomain e.g. prox.my.home and I think was affected but the custom rule.Soon after I removed it - and restarted

pvestatd everything came back (though I'm not sure I needed to restart pvestatd..).Suffice to say, while things still worked, apparently the (local) DNS can cause issues...

Last edited: