Hi Fiona,

I have a positive update. It seems that that this issue is not specific to Proxmox. Our CU vendor, who had supplied the instructions on how to setup this virtual machine, have observed a similar behaviour on Vmware as well. Digging further, it seems that this issue comes after executing the last step in below series of steps.

1. Install real-time kernel on the VM and check that it should be 5.14.0-162.12.1.rt21.175.el9_1.x86_64.

2. systemctl disable firewalld --now.

3. sudo setenforce 0.

4. sudo sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config.

5. sudo sed -i 's/blacklist/#blacklist/' /etc/modprobe.d/sctp*.

6. Edit /etc/tuned/realtime-virtual-host-variables.conf and set "isolated_cores=2-15".

7. tuned-adm profile realtime-virtual-host.

8. Edit /etc/default/grub and set

GRUB_CMDLINE_LINUX="crashkernel=1G-4G:192M,4G-64G:256M,64G-:512M rd.lvm.lv=rl/root processor.max_cstate=1 intel_idle.max_cstate=0 intel_pstate=disable idle=poll default_hugepagesz=1G hugepagesz=1G hugepages=1 intel_iommu=on iommu=pt selinux=0 enforcing=0 nmi_watchdog=0 audit=0 mce=off".

9. Add below 2 entries to grub file:

GRUB_CMDLINE_LINUX_DEFAULT="${GRUB_CMDLINE_LINUX_DEFAULT:+$GRUB_CMDLINE_LINUX_DEFAULT}\$tuned_params" GRUB_INITRD_OVERLAY="${GRUB_INITRD_OVERLAY:+$GRUB_INITRD_OVERLAY }\$tuned_initrd"

10. grub2-mkconfig -o /boot/grub2/grub.cfg.

11. reboot

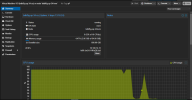

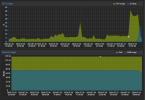

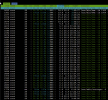

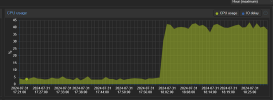

This is where both Proxmox and Vmware show the same behaviour and show that the CPU utilization of the VM is 100%.

They are currently working on trying to figure out why this is happening. In case you spot something unusual in above commands (especially for a VM), please let me know. I would be really greatful.

Will keep you posted.

Regards,

Vikrant

I have a positive update. It seems that that this issue is not specific to Proxmox. Our CU vendor, who had supplied the instructions on how to setup this virtual machine, have observed a similar behaviour on Vmware as well. Digging further, it seems that this issue comes after executing the last step in below series of steps.

1. Install real-time kernel on the VM and check that it should be 5.14.0-162.12.1.rt21.175.el9_1.x86_64.

2. systemctl disable firewalld --now.

3. sudo setenforce 0.

4. sudo sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config.

5. sudo sed -i 's/blacklist/#blacklist/' /etc/modprobe.d/sctp*.

6. Edit /etc/tuned/realtime-virtual-host-variables.conf and set "isolated_cores=2-15".

7. tuned-adm profile realtime-virtual-host.

8. Edit /etc/default/grub and set

GRUB_CMDLINE_LINUX="crashkernel=1G-4G:192M,4G-64G:256M,64G-:512M rd.lvm.lv=rl/root processor.max_cstate=1 intel_idle.max_cstate=0 intel_pstate=disable idle=poll default_hugepagesz=1G hugepagesz=1G hugepages=1 intel_iommu=on iommu=pt selinux=0 enforcing=0 nmi_watchdog=0 audit=0 mce=off".

9. Add below 2 entries to grub file:

GRUB_CMDLINE_LINUX_DEFAULT="${GRUB_CMDLINE_LINUX_DEFAULT:+$GRUB_CMDLINE_LINUX_DEFAULT}\$tuned_params" GRUB_INITRD_OVERLAY="${GRUB_INITRD_OVERLAY:+$GRUB_INITRD_OVERLAY }\$tuned_initrd"

10. grub2-mkconfig -o /boot/grub2/grub.cfg.

11. reboot

This is where both Proxmox and Vmware show the same behaviour and show that the CPU utilization of the VM is 100%.

They are currently working on trying to figure out why this is happening. In case you spot something unusual in above commands (especially for a VM), please let me know. I would be really greatful.

Will keep you posted.

Regards,

Vikrant