Hi,

please try the latest available version. Just to make sure, you need to reboot via the button in the Web UI? Reboot from inside the guest is not enough to pick up the newly installed version.

Also looks different. The original issue in this thread is about just the IO thread(s) looping with 100%. Do you really have this many disks with IO thread attached? Otherwise those are likely the vCPU threads.

Please share the output of

pveversion -v and

qm config 102 after upgrading and testing with the latest version. Another thing you might want to check if you have latest BIOS upgrades and CPU microcode installed:

https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysadmin_firmware_cpu

Hi Fiona,

I have updated the BIOS to the latest release and have installed the CPU microcode following the link you shared. i have also updated the pve to latest version and did a reboot after all this using the proxmox gui buttons.

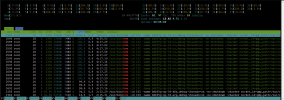

The issue is when i look at my VM on the proxmox gui it shows 100% CPU usage, but when i login to the VM, inside the VM the CPU usage is less than 1%. I'm attaching a fresh snapshot where i show both CPU usage values captured at the same.

Output of given commands:

root@bdbf5g-sp-34-kvm:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.5.13-1-pve)

pve-manager: 8.1.4 (running version: 8.1.4/ec5affc9e41f1d79)

proxmox-kernel-helper: 8.1.0

proxmox-kernel-6.5.13-1-pve-signed: 6.5.13-1

proxmox-kernel-6.5: 6.5.13-1

proxmox-kernel-6.5.11-8-pve-signed: 6.5.11-8

ceph-fuse: 17.2.7-pve2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx8

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.3

libpve-access-control: 8.1.2

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.1

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.5

libpve-rs-perl: 0.8.8

libpve-storage-perl: 8.1.0

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve4

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.1.4-1

proxmox-backup-file-restore: 3.1.4-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.3

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.5

proxmox-widget-toolkit: 4.1.4

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.4

pve-edk2-firmware: 4.2023.08-4

pve-firewall: 5.0.3

pve-firmware: 3.9-2

pve-ha-manager: 4.0.3

pve-i18n: 3.2.1

pve-qemu-kvm: 8.1.5-3

pve-xtermjs: 5.3.0-3

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.2-pve2

root@bdbf5g-sp-34-kvm:~# qm config 102

agent: 1

balloon: 0

boot: order=scsi0;ide2;net0

cores: 16

cpu: host

ide2: local:iso/Rocky-9.1-x86_64-minimal.iso,media=cdrom,size=1555264K

memory: 131072

meta: creation-qemu=8.1.5,ctime=1710449085

name: bdbf5g-sp-34-cu

net0: virtio=BC:24:11:21:C2:B3,bridge=vmbr0,firewall=1

net1: virtio=BC:24:11:2D:6D

7,bridge=vmbr1,firewall=1

net2: virtio=BC:24:11

2:B7:27,bridge=vmbr2,firewall=1

net3: virtio=BC:24:11:9C:21:35,bridge=vmbr3,firewall=1

numa: 0

onboot: 1

ostype: l26

scsi0: local-zfs:vm-102-disk-0,iothread=1,size=600G

scsihw: virtio-scsi-single

smbios1: uuid=fe3f4063-1d86-4213-9764-e4481b5e4d1a

sockets: 1

vmgenid: 7478bb4d-0160-448e-a223-3057491ac322

root@bdbf5g-sp-34-kvm:~#