Well, that's strange if you can already access to evpn from lan ^_^.

Why? I mean.. wasn't the the whole purpose of setting EVPN with my MikroTik using additional BGP controller from proxmox to mikrotik? If not than.. what we were doing the whole time here?

You remember the chart?

1666898334487-png.42662

it says there my ip 192.168.1.1 needs to access a custom network created on SDN using EVPN and Mikrotik (RouterOS 6 without evpn support, we talked about that too) and for that I needed extra BGP Controller.

So answering your next question

Is your mikrotik doing evpn ? Maybe it's acting as exit node, and forward traffic between your evpn network and lan ?

No, because it can't (RouterOS 6 remember?) so additional config layer

10.0.1.30 is mikrotik

10.0.1.1 is Proxmox host

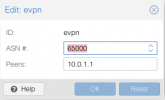

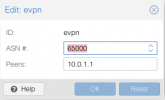

and proxmox it's on EVPN controller as peer

so basically

type5 is announced by Proxmox

Code:

root@pve:~# vtysh -c "sh ip bgp l2vpn evpn" |grep "\[5"

EVPN type-5 prefix: [5]:[EthTag]:[IPlen]:[IP]

*> [5]:[0]:[0]:[0.0.0.0]

*> [5]:[0]:[0]:[::] 10.0.1.1(pve) 32768 i

and as you see it is.

I think that DNS should work. As prove take a look

ping from VM on overlay network

10.0.101.1 => 10.0.1.1

works

Code:

ansible@srv-app-1:~$ ping -c 4 10.0.1.1

PING 10.0.1.1 (10.0.1.1) 56(84) bytes of data.

64 bytes from 10.0.1.1: icmp_seq=1 ttl=64 time=0.146 ms

64 bytes from 10.0.1.1: icmp_seq=2 ttl=64 time=0.224 ms

64 bytes from 10.0.1.1: icmp_seq=3 ttl=64 time=0.135 ms

64 bytes from 10.0.1.1: icmp_seq=4 ttl=64 time=0.174 ms

--- 10.0.1.1 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3049ms

rtt min/avg/max/mdev = 0.135/0.169/0.224/0.034 ms

but there is some issue with services when I want to check them by sending something on specific port on proxmox it's says that connection is refused.

For example, my laptop to DNS server that stands on Proxmox Host

Code:

❯ host unifi.sonic 10.0.1.1

Using domain server:

Name: 10.0.1.1

Address: 10.0.1.1#53

Aliases:

unifi.sonic is an alias for rasp-poz-1.hw.sonic.

rasp-poz-1.hw.sonic has address 10.255.0.20

There is a nice answer.

Now from VM on

10.0.101.0/24 network that is in the SDN type EVPN Network

Code:

ansible@srv-app-1:~$ host unifi.sonic 10.0.1.1

;; connection timed out; no servers could be reached

SSH to proxmox from VM also doesn't work

Code:

ansible@srv-app-1:~$ ssh 10.0.1.1

ssh: connect to host 10.0.1.1 port 22: Connection refused

I mean it looks like Proxmox isn't allowing VM to use their services.

But for example VM can reach unifi controller that stands on different VLAN on my Raspberry Pie in

10.255.0.0/24

Code:

ansible@srv-app-1:~$ curl -kIs https://10.255.0.20:8443

HTTP/1.1 302

Location: /manage

Transfer-Encoding: chunked

Date: Wed, 04 Jan 2023 19:25:19 GMT

There is a lot of entries in

iptables -S

and Specific Chain called

PVESIG-Reject

Code:

Chain PVEFW-Reject (0 references)

target prot opt source destination

PVEFW-DropBroadcast all -- anywhere anywhere

ACCEPT icmp -- anywhere anywhere icmp fragmentation-needed

ACCEPT icmp -- anywhere anywhere icmp time-exceeded

DROP all -- anywhere anywhere ctstate INVALID

PVEFW-reject udp -- anywhere anywhere multiport dports 135,445

PVEFW-reject udp -- anywhere anywhere udp dpts:netbios-ns:139

PVEFW-reject udp -- anywhere anywhere udp spt:netbios-ns dpts:1024:65535

PVEFW-reject tcp -- anywhere anywhere multiport dports epmap,netbios-ssn,microsoft-ds

DROP udp -- anywhere anywhere udp dpt:1900

DROP tcp -- anywhere anywhere tcp flags:!FIN,SYN,RST,ACK/SYN

DROP udp -- anywhere anywhere udp spt:domain

all -- anywhere anywhere /* PVESIG:h3DyALVslgH5hutETfixGP08w7c */

There is DROP on 53(domain) at UDP protocol.

and

btw

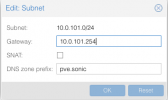

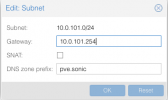

There is some issue with SNAT options

by checking

SNAT option here

and disabling it after and of course applying between that process a network restart, the SNAT although it's not checked it's still active

Code:

root@pve:~# iptables -L -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

SNAT all -- 10.0.101.0/24 anywhere to:10.0.1.1

root@pve:~#