I can't see a process, however there is a socket on udp/4789:Hello!, using VXLAN, should I see a process listening on *:4789?, I don't see any on my nodes.

root@pve01:~# ss -tulpn | grep 4789

udp UNCONN 0 0 0.0.0.0:4789 0.0.0.0:*

I can't see a process, however there is a socket on udp/4789:Hello!, using VXLAN, should I see a process listening on *:4789?, I don't see any on my nodes.

vrfvx_vm is the vrf of the zone, with his own routing table (ip route show vrf vrfvx_vm).Ok I see, packets leave on pve01 and answer arrives on pve02, where it is routed to pve01 via vrfvx_vm which does not have any routes.

so, it's working with net.ipv4.conf.all.rp_filter=0 ?sysctl -w net.ipv4.conf.all.rp_filter=0 made the packets flow, default is 2 (loose source)

I have two upstream BGP router (with ECMP). So it is always possible for a packet to be asymmetric. But this shouldn't matter as I am not using the firewall, only thing that matters is routing table for rp_filter as I understand it.so, it's working with net.ipv4.conf.all.rp_filter=0 ?

what is the value of other net.ipv4.conf.*.rp_filter ? because on my server, it's 0 by default, only all=2. (but I think that other values override it)

what is your kernel version ?

net.ipv4.conf.all.rp_filter=0

I have looked in my archive, I had made a note about this some year ago in early pve-doc vxlan, but not in the official sdn docI have two upstream BGP router (with ECMP). So it is always possible for a packet to be asymmetric. But this shouldn't matter as I am not using the firewall, only thing that matters is routing table for rp_filter as I understand it.

Kernel 6.2, but also same issue with the standard kernel (5.19?).

Individual interfaces have rp_filter=0, but all has 2 as a default in PVE.

I can set it to 0 for all and to 2 for individual interfaces, except for the uplink interfaces which I leave on 0.

Edit: yes it is working with

I have looked in my archive, I had made a note about this some year ago in early pve-doc vxlan, but not in the official sdn doc

https://lists.proxmox.com/pipermail/pve-devel/2019-September/038893.html

I think it's a security in evpn routing between different vrf, even if you don't use firewall.

I'll add the note if official sdn doc.

Thans for the report !

What was your question ? (I'm a bit lost in the thread exchanges ) ^_^@spirit and what about my question that is slowly dying in the depths of the abyss ?

It was hard to missed but you did itWhat was your question ? (I'm a bit lost in the thread exchanges ) ^_^

(BTW, the doc has been updated for rp_filter)

ok, sorry, I think I was on holiday.It was hard to missed but you did it=> #555

@spiritok, sorry, I think I was on holiday.

so, metallb is annoucing

10.0.50.1/32 ----> 10.0.10.1 to your mikroting

But I already have. BGP remember? This was already done10.0.10.1/32 gw <exit-node physical ip> or full subnet 10.0.10.0/24 gw <exit-node physical ip>

So ok this is irrelevant I thought its visible on screens that I providedif your subnet 10.0.10.0/24 is already configured on the vnet,

you need to announce this subnet to your mikrotik (create an extra bgp controller for each exit-node, and add your mikrotik ip)

Then more difficult, on the exit-node, you need a route to join 10.0.50.1...

we need some a route like: 10.0.50.1/32 ----> 10.0.10.1

konrad@pve:~$ ip r

default via 10.0.1.30 dev bond0.11 proto kernel onlink

10.0.1.0/27 dev bond0.11 proto kernel scope link src 10.0.1.1

10.0.10.0/24 nhid 25 dev vmpoz1 proto bgp metric 20

10.0.50.0/24 dev vmpoz1 scope link

192.168.0.0/16 nhid 62 via 10.0.1.30 dev bond0.11 proto bgp metric 20konrad@srv-app-1:~$ ip a |grep inet

inet 127.0.0.1/8 scope host lo

inet 10.0.10.1/24 brd 10.0.10.255 scope global ens18

inet 10.0.21.0/32 scope global flannel.1

inet 10.0.21.1/24 brd 10.0.21.255 scope global cni0

konrad@srv-app-1:~$ curl -Is 10.0.50.1

HTTP/1.1 404 Not Found

Server: nginx/1.23.3

Date: Wed, 29 Mar 2023 11:23:17 GMT

Content-Type: text/html

Content-Length: 153

Connection: keep-alive[recover@Mike[1]] > ip route pr

Flags: X - disabled, A - active, D - dynamic, C - connect, S - static, r - rip, b - bgp, o - ospf, m - mme, B - blackhole, U - unreachable, P - prohibit

# DST-ADDRESS PREF-SRC GATEWAY DISTANCE

0 ADS 0.0.0.0/0 109.173.130.129 1

1 X S ;;; WAN1

0.0.0.0/0 85.221.204.253 1

2 A S 10.0.0.0/24 10.255.253.2 1

3 ADC 10.0.1.0/27 10.0.1.30 SRV_11 0

4 ADb 10.0.10.0/24 10.0.1.1 200

5 ADb 10.0.50.1/32 10.0.10.1 200 <= hereyes, I really dont known@spirit

Ee no? 10.0.10.1 is VM in VNET on SDN on Proxmox (10.0.1.1) , sorry I don't know how to explain that much easier

[ (metallb 10.0.50.1/32) VM (10.0.10.1) ] => Mikrotik 10.0.1.30

But I already have. BGP remember? This was already done

View attachment 48607

The above advertisement 10.0.1.30 <= (10.0.10.0/24) => 10.0.1.1

and I can reach 10.0.10.1 where k3s stands.

So ok this is irrelevant I thought its visible on screens that I providedI don't know maybe look closely or I can correct something if its not visible already

So If I thinking the same way that the exit-node you mean Proxmox Host (10.0.1.1) then .. route like this

Code:konrad@pve:~$ ip r default via 10.0.1.30 dev bond0.11 proto kernel onlink 10.0.1.0/27 dev bond0.11 proto kernel scope link src 10.0.1.1 10.0.10.0/24 nhid 25 dev vmpoz1 proto bgp metric 20 10.0.50.0/24 dev vmpoz1 scope link 192.168.0.0/16 nhid 62 via 10.0.1.30 dev bond0.11 proto bgp metric 20

there is already 10.0.50.0/24

and as you see 10.0.10.0/24 and 10.0.50.0/24 are on the same dev.

and on 10.0.10.1 I can reach 10.50.0.1 because it is a Node (in Kubernetes terminology)

Code:konrad@srv-app-1:~$ ip a |grep inet inet 127.0.0.1/8 scope host lo inet 10.0.10.1/24 brd 10.0.10.255 scope global ens18 inet 10.0.21.0/32 scope global flannel.1 inet 10.0.21.1/24 brd 10.0.21.255 scope global cni0 konrad@srv-app-1:~$ curl -Is 10.0.50.1 HTTP/1.1 404 Not Found Server: nginx/1.23.3 Date: Wed, 29 Mar 2023 11:23:17 GMT Content-Type: text/html Content-Length: 153 Connection: keep-alive

I think there is some issue between Proxmox (not VM on proxmox) which is the 10.0.1.1 and 10.0.10.1 That Proxmox can't reach and question is should it ?

Because what I achieved I've just added additional network layer in SDN which is Overlay of K3s in the VM. And I want to reach custom network 10.0.50.0/24 Which I thought I already did by pushing it to mikronik using BGP

Code:[recover@Mike[1]] > ip route pr Flags: X - disabled, A - active, D - dynamic, C - connect, S - static, r - rip, b - bgp, o - ospf, m - mme, B - blackhole, U - unreachable, P - prohibit # DST-ADDRESS PREF-SRC GATEWAY DISTANCE 0 ADS 0.0.0.0/0 109.173.130.129 1 1 X S ;;; WAN1 0.0.0.0/0 85.221.204.253 1 2 A S 10.0.0.0/24 10.255.253.2 1 3 ADC 10.0.1.0/27 10.0.1.30 SRV_11 0 4 ADb 10.0.10.0/24 10.0.1.1 200 5 ADb 10.0.50.1/32 10.0.10.1 200 <= here

So to summarise that. I needed access to 10.0.10.0/24 a Subnet from VNet first and number 4 in above routing table shows that I had done it using BGP controller. So next from a host in that network (10.0.10.0/24) I run another BGP to announce additional network 10.50.0.0/24 but this time the gateway is 10.0.10.1 from previous BGP

So I'm stuck

@spirityes, I really dont known

as workaround,

I have some customers using a pair a vm with a simple keepalived vip + haproxy , in front of k8s (and haproxy redirect to all ingress).

and the k8s outbound traffic is natted through the worker vm ip.

Ok if it comes to MetalLB I managed to deal with by loosing 10.0.50.0/24 network and set 10.0.10.128/25 which is the part of 10.0.10.0/24 that already works because of the SDN troubleshooting part and that works@spirit

Hm but from What I achieved it seems like I’m so close to get this done and working. Maybe it’s firewall rule ?

Btw you think that upgrading mikrotik to v7 with even support will resolve those problems ?

btw @spirit

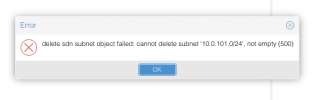

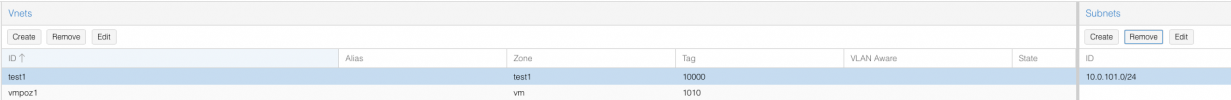

Why can't I remove a unnecessary VNET

View attachment 49401

I had that issue always when a Subnet had Gateway defined but this time it doesn't have

View attachment 49402

# pveversion --verbose

proxmox-ve: 7.4-1 (running kernel: 5.15.104-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.4-1

pve-kernel-5.15.104-1-pve: 5.15.104-1

pve-kernel-5.15.102-1-pve: 5.15.102-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-2

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-4

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-network-perl: 0.7.3

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.4.1-1

proxmox-backup-file-restore: 2.4.1-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.6.5

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20230228-2

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.12-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1View attachment 49994

The layout of the SDN zone configuration page appears to be incorrect in the new version. Could someone please review it?

SDN > Zones > Add

I confirm this issue. It's impossible to delete any subnetWhy can't I remove a unnecessary VNET

you need to delete the gateway first if a gateway exist in the subnet. (It's on my todo to fix this)I confirm this issue. It's impossible to delete any subnet

That obvious. But I wasn't able to remove even without gateway. There wasn't even a gateway added at all.you need to delete the gateway first if a gateway exist in the subnet. (It's on my todo to fix this)

Jul 4 22:08:42 pve kernel: [ 1110.090480] vmbr0: port 1(bond0) entered disabled state

Jul 4 22:08:42 pve kernel: [ 1110.187661] device bond0 left promiscuous mode

Jul 4 22:08:42 pve kernel: [ 1110.188462] vmbr0: port 1(bond0) entered disabled state

Jul 4 22:08:42 pve kernel: [ 1110.439809] bond0 (unregistering): (slave enp8s0f2): Releasing backup interface

Jul 4 22:08:42 pve kernel: [ 1110.541858] bond0 (unregistering): (slave enp8s0f3): Removing an active aggregator

Jul 4 22:08:42 pve kernel: [ 1110.542030] bond0 (unregistering): (slave enp8s0f3): Releasing backup interface

Jul 4 22:08:42 pve kernel: [ 1110.662830] bond0 (unregistering): Released all slaves

Jul 4 22:08:44 pve kernel: [ 1112.514522] bond0: (slave enp8s0f2): Enslaving as a backup interface with a down link

Jul 4 22:08:44 pve kernel: [ 1112.569960] bond0: (slave enp8s0f3): Enslaving as a backup interface with a down link

Jul 4 22:09:05 pve networking[27748]: warning: bond0.11: post-up cmd 'sleep 20 && /usr/bin/ip route add default via 10.0.1.30 dev bond0.11 proto kernel onlink' failed: returned 2 (Error: Nexthop device is not up.

Jul 4 22:09:05 pve kernel: [ 1132.886136] vmbr0: port 1(bond0) entered blocking state

Jul 4 22:09:05 pve kernel: [ 1132.886144] vmbr0: port 1(bond0) entered disabled state

Jul 4 22:09:05 pve kernel: [ 1132.886649] device bond0 entered promiscuous mode

Jul 4 22:09:05 pve kernel: [ 1132.891754] bond0: Warning: No 802.3ad response from the link partner for any adapters in the bond

Jul 4 22:09:05 pve kernel: [ 1132.892121] 8021q: adding VLAN 0 to HW filter on device bond0

Jul 4 22:09:05 pve kernel: [ 1132.892767] IPv6: ADDRCONF(NETDEV_CHANGE): bond0.11: link becomes ready

Jul 4 22:09:05 pve kernel: [ 1132.897216] vmbr0: port 1(bond0) entered blocking state

Jul 4 22:09:05 pve kernel: [ 1132.897222] vmbr0: port 1(bond0) entered forwarding state

Jul 4 22:09:05 pve kernel: [ 1132.898653] bond0: (slave enp8s0f2): link status definitely up, 1000 Mbps full duplex

Jul 4 22:09:05 pve kernel: [ 1132.898667] bond0: active interface up!

Jul 4 22:09:05 pve kernel: [ 1132.898865] bond0: (slave enp8s0f3): link status definitely up, 1000 Mbps full duplexYou should enable the option 'advertise subnet' on the zone if you have "silent hosts"Hello

vxlan works for me without problems, so does EVPN, but only if there is no other default gateway as described below. If I can ask you for your opinion when I try to configure EVPN according to the instructions in the manual, with the difference that I have 2x Proxmox 8 as separate nodes without a cluster. There is 1xVM with RockyLinux 9 on each.

On the VM, I have two networks eth0 with default gateway and EVPN is configured on eth2. After restarting both VMs, the pings work between them, but if there is no activity for a minute, the FRR BGP forgets the MAC/IP addresses and when I ping VM1->VM2, only the MAC from VM1 is added to BGP and the ping does not work, and when I ping VM2->VM1, the MAC from VM2 is also added to BGP and the ping starts on both VMs. I tried to add the default GW to the eth2 interface, set "Disable arp-nd suppression", firewalls off, but the same thing happens. Does EVPN work with such a connection scheme?

Than You very much

We use essential cookies to make this site work, and optional cookies to enhance your experience.