Hi all,

I have a build using standard PC parts, and one of my VMs is OpenMediaVault NAS OS.

Storage wise I currently have:

I have a build using standard PC parts, and one of my VMs is OpenMediaVault NAS OS.

Storage wise I currently have:

- 1st NVMe - Proxmox OS.

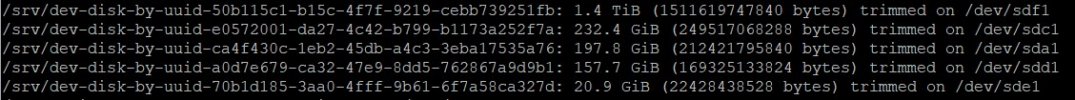

- Passed through to NAS from mobo SATA ports (using Passthrough Physical Disk to Virtual Machine (VM)

- 2nd NVMe

- 1st SSD

- 2nd SSD

- 1st HDD

- I've recently read that using that passthrough is not a good idea for NVMe and SSD, as the trim function isn't automatically set up. What do I need to do to ensure trim is being used? Bearing in mind I've not changed the settings other than saying "SSD emulation".

- Would I be better off not passing through the SSDs, and making the discs proxmox storage, effectively making a virtual disk using up all the storage of the individual drives?

- As the information on the SSD isn't critical they are not backed up - does this cause potential issues if the VM is restored?

- As the information isn't being written to disk, it's being written to the virtual disk. I assume if it's not backed up using proxmox, I would lose the disc data if it's restored?

- The cache default is "no cache" - as i'm passing through disks, should this be changed to "write through"? Is that for only SSDs or HDDs too?

- An HBA might be better, however unfortunately, HBAs that support TRIM are more expensive and quite energy hungry. Living in the UK I would like a minimum increase in watts, so I was going to potentially go hybrid set up if I can, with SSDs using mobo SATA ports and HBA for HDDs. That might be the best of both worlds to allow me to use a cheap and power sipping HBA!