Hello,

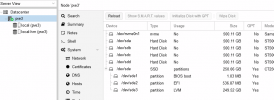

At the moment I have 3 hdds on a hardware raid card as raid 5. This gives me about 260gb of storage available to Proxmox.

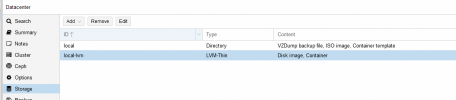

60gb of this is used for ISOs etc. the rest is for the VM LXC storage. I am running out of room.

The Proxmox host has some customisation so I can pass a gpu through and get logs into grafana in a container.

I'm looking at getting a 2tb Raid 1 set up to replace the raid 5.

How can I move the Proxmox host, all VMs and containers to the new virtual hard drive?

If possible I don't want to have to reinstall Proxmox as I would have to configure the GPU pass through and logs again, hence I'd like to copy the whole system.

I hope you understand what I'm after, I can't word it very well!

Thanks,

Zac.

At the moment I have 3 hdds on a hardware raid card as raid 5. This gives me about 260gb of storage available to Proxmox.

60gb of this is used for ISOs etc. the rest is for the VM LXC storage. I am running out of room.

The Proxmox host has some customisation so I can pass a gpu through and get logs into grafana in a container.

I'm looking at getting a 2tb Raid 1 set up to replace the raid 5.

How can I move the Proxmox host, all VMs and containers to the new virtual hard drive?

If possible I don't want to have to reinstall Proxmox as I would have to configure the GPU pass through and logs again, hence I'd like to copy the whole system.

I hope you understand what I'm after, I can't word it very well!

Thanks,

Zac.