Proxmox says that it moves 128GB, but in reality it only moves the used space. You can see that in the speed of the free space increases enormously.

That's interesting. I installed netdata on proxmox and monitored it. When montoring what happened when I migrated from one nfs share to the other, all free space on the virtual drive was still read bit by bit from my nfs share, even though it was empty.

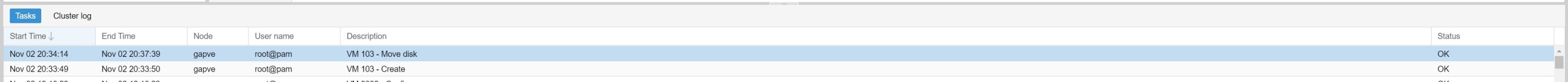

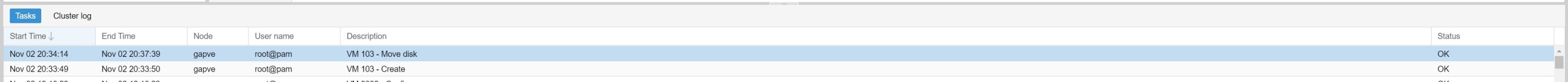

Here is a test that I just did that copied from the nfs share to my local storage array.

-Created a brand new VM with a 64GB virtual disk (never booted it)

-Proceeded to migrate it from the nfs share that the disk was created on to a local disk array.

What should migrate instantly (as it is empty) took minutes to do with a link that's over a gigabit. See the attached screenshot.

Header

Proxmox

Virtual Environment 7.0-13

Search

Virtual Machine 103 on node 'gapve'

Pool View

Logs

()

scsi0

nvme

Raw disk image (raw)

create full clone of drive scsi0 (freenas:103/vm-103-disk-0.qcow2)

WARNING: You have not turned on protection against thin pools running out of space.

WARNING: Set activation/thin_pool_autoextend_threshold below 100 to trigger automatic extension of thin pools before they get full.

Logical volume "vm-103-disk-0" created.

WARNING: Sum of all thin volume sizes (576.61 GiB) exceeds the size of thin pool nvme/nvme and the size of whole volume group (238.47 GiB).

transferred 0.0 B of 64.0 GiB (0.00%)

transferred 655.4 MiB of 64.0 GiB (1.00%)

transferred 1.3 GiB of 64.0 GiB (2.00%)

transferred 1.9 GiB of 64.0 GiB (3.00%)

transferred 2.6 GiB of 64.0 GiB (4.00%)

transferred 3.2 GiB of 64.0 GiB (5.00%)

transferred 3.8 GiB of 64.0 GiB (6.01%)

transferred 4.5 GiB of 64.0 GiB (7.01%)

transferred 5.1 GiB of 64.0 GiB (8.01%)

transferred 5.8 GiB of 64.0 GiB (9.01%)

transferred 6.4 GiB of 64.0 GiB (10.01%)

transferred 7.0 GiB of 64.0 GiB (11.01%)

transferred 7.7 GiB of 64.0 GiB (12.01%)

transferred 8.3 GiB of 64.0 GiB (13.01%)

transferred 9.0 GiB of 64.0 GiB (14.01%)

transferred 9.6 GiB of 64.0 GiB (15.01%)

transferred 10.3 GiB of 64.0 GiB (16.02%)

transferred 10.9 GiB of 64.0 GiB (17.02%)

transferred 11.5 GiB of 64.0 GiB (18.02%)

transferred 12.2 GiB of 64.0 GiB (19.02%)

transferred 12.8 GiB of 64.0 GiB (20.02%)

transferred 13.5 GiB of 64.0 GiB (21.02%)

transferred 14.1 GiB of 64.0 GiB (22.02%)

transferred 14.7 GiB of 64.0 GiB (23.02%)

transferred 15.4 GiB of 64.0 GiB (24.02%)

transferred 16.0 GiB of 64.0 GiB (25.02%)

transferred 16.7 GiB of 64.0 GiB (26.03%)

transferred 17.3 GiB of 64.0 GiB (27.03%)

transferred 17.9 GiB of 64.0 GiB (28.03%)

transferred 18.6 GiB of 64.0 GiB (29.03%)

transferred 19.2 GiB of 64.0 GiB (30.03%)

transferred 19.9 GiB of 64.0 GiB (31.03%)

transferred 20.5 GiB of 64.0 GiB (32.03%)

transferred 21.1 GiB of 64.0 GiB (33.03%)

transferred 21.8 GiB of 64.0 GiB (34.03%)

transferred 22.4 GiB of 64.0 GiB (35.03%)

transferred 23.1 GiB of 64.0 GiB (36.04%)

transferred 23.7 GiB of 64.0 GiB (37.04%)

transferred 24.3 GiB of 64.0 GiB (38.04%)

transferred 25.0 GiB of 64.0 GiB (39.04%)

transferred 25.6 GiB of 64.0 GiB (40.04%)

transferred 26.3 GiB of 64.0 GiB (41.04%)

transferred 26.9 GiB of 64.0 GiB (42.04%)

transferred 27.5 GiB of 64.0 GiB (43.04%)

transferred 28.2 GiB of 64.0 GiB (44.04%)

transferred 28.8 GiB of 64.0 GiB (45.04%)

transferred 29.5 GiB of 64.0 GiB (46.04%)

transferred 30.1 GiB of 64.0 GiB (47.05%)

transferred 30.8 GiB of 64.0 GiB (48.05%)

transferred 31.4 GiB of 64.0 GiB (49.05%)

transferred 32.0 GiB of 64.0 GiB (50.05%)

transferred 32.7 GiB of 64.0 GiB (51.05%)

transferred 33.3 GiB of 64.0 GiB (52.05%)

transferred 34.0 GiB of 64.0 GiB (53.05%)

transferred 34.6 GiB of 64.0 GiB (54.05%)

transferred 35.2 GiB of 64.0 GiB (55.05%)

transferred 35.9 GiB of 64.0 GiB (56.05%)

transferred 36.5 GiB of 64.0 GiB (57.06%)

transferred 37.2 GiB of 64.0 GiB (58.06%)

transferred 37.8 GiB of 64.0 GiB (59.06%)

transferred 38.4 GiB of 64.0 GiB (60.06%)

transferred 39.1 GiB of 64.0 GiB (61.06%)

transferred 39.7 GiB of 64.0 GiB (62.06%)

transferred 40.4 GiB of 64.0 GiB (63.06%)

transferred 41.0 GiB of 64.0 GiB (64.06%)

transferred 41.6 GiB of 64.0 GiB (65.06%)

transferred 42.3 GiB of 64.0 GiB (66.06%)

transferred 42.9 GiB of 64.0 GiB (67.07%)

transferred 43.6 GiB of 64.0 GiB (68.07%)

transferred 44.2 GiB of 64.0 GiB (69.07%)

transferred 44.8 GiB of 64.0 GiB (70.07%)

transferred 45.5 GiB of 64.0 GiB (71.07%)

transferred 46.1 GiB of 64.0 GiB (72.07%)

transferred 46.8 GiB of 64.0 GiB (73.07%)

transferred 47.4 GiB of 64.0 GiB (74.07%)

transferred 48.0 GiB of 64.0 GiB (75.07%)

transferred 48.7 GiB of 64.0 GiB (76.07%)

transferred 49.3 GiB of 64.0 GiB (77.08%)

transferred 50.0 GiB of 64.0 GiB (78.08%)

transferred 50.6 GiB of 64.0 GiB (79.08%)

transferred 51.3 GiB of 64.0 GiB (80.08%)

transferred 51.9 GiB of 64.0 GiB (81.08%)

transferred 52.5 GiB of 64.0 GiB (82.08%)

transferred 53.2 GiB of 64.0 GiB (83.08%)

transferred 53.8 GiB of 64.0 GiB (84.08%)

transferred 54.5 GiB of 64.0 GiB (85.08%)

transferred 55.1 GiB of 64.0 GiB (86.08%)

transferred 55.7 GiB of 64.0 GiB (87.08%)

transferred 56.4 GiB of 64.0 GiB (88.09%)

transferred 57.0 GiB of 64.0 GiB (89.09%)

transferred 57.7 GiB of 64.0 GiB (90.09%)

transferred 58.3 GiB of 64.0 GiB (91.09%)

transferred 58.9 GiB of 64.0 GiB (92.09%)

transferred 59.6 GiB of 64.0 GiB (93.09%)

transferred 60.2 GiB of 64.0 GiB (94.09%)

transferred 60.9 GiB of 64.0 GiB (95.09%)

transferred 61.5 GiB of 64.0 GiB (96.09%)

transferred 62.1 GiB of 64.0 GiB (97.09%)

transferred 62.8 GiB of 64.0 GiB (98.10%)

transferred 63.4 GiB of 64.0 GiB (99.10%)

transferred 64.0 GiB of 64.0 GiB (100.00%)

transferred 64.0 GiB of 64.0 GiB (100.00%)

TASK OK