Low ZFS read performance Disk->Tape

- Thread starter Lephisto

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

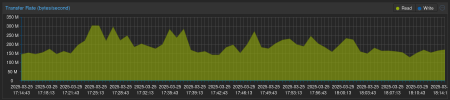

nice great to hear//update: This looks a whole lot better. Seems to sustain the LTO Drive speed except a very few dips. Thank you!

regards.

as for the slowdowns:

AFAICS from the logs you posted and what i observed there are 3 types of slowdowns that are expected now:

* when the tape has to reverse directions (this has to be done since for one track the tape has to reverse the direction a few times)

* when we sync/flush/commit (this should only once happen every 128GiB now)

* when the storage slows down, e.g. in your case i think that happens sometimes when the chunks are very small and the disk array can't keep up with that many IO requests (i also saw it here a correlation between lower speeds and higher chunk# in the logs)

Just note that in the final version of the first patch (with the threads) the name of the datastore property will change, so you have to either delete that again before upgrading to version with the patch applied or manually editing the config after upgrading since the gui will produce an error for an unknown option

I was wondering all the time how LTO is handling constant buffer underruns. Will it just pause and wait until the buffer is filled or will the constant tape speed just be tapered down?

afaik that depends on the individual hardware.

old (before LTO) drives had to do a full stop rewind and restart, which was not healthy, google "shoe-shining", "Back-Hitching" and tape drives.

i think LTO had DRM "data rate matching" from the start.

modern LTO drives can slow down, afaik IBM drives have like 7 speed's at what they can write to the tape.

HP has afaik a patent on "infinite" speeds for their drives.

(each vendor has different speeds for their drives)

and i think some even support (can be configured) to write "zero's" to the tape in favor to keep it running, but wasting space.

so in short on modern LTO drives buffer underrun should not be that often and not as harm full as in the past.

check the tech specs for your quantum drive, chances are that with your ~100MB/s you never had a buffer underrun.

one part is already applied and should be already in proxmox-backup-server 3.2.3-1Is there any ETA when this will make it into a release?

(this fix: https://lists.proxmox.com/pipermail/pbs-devel/2024-May/009192.html)

but the multithreading was held up because of some concerns regarding how we want to decide e.g. the number of threads (to not overload the host etc..)

i hope i can pick that part up soon again

Thank you for the great work! Seems really promising. This might fix the issues with tape backup that I have also encountered (although with much older hardware).one part is already applied and should be already in proxmox-backup-server 3.2.3-1

(this fix: https://lists.proxmox.com/pipermail/pbs-devel/2024-May/009192.html)

but the multithreading was held up because of some concerns regarding how we want to decide e.g. the number of threads (to not overload the host etc..)

i hope i can pick that part up soon again

Any eta yet for the multithreading improvement patch?

sorry for the late answer, no not yet, after some discussion simply upping the thread count is probably not the way to go here, otherwise it becomes to easy to overload the pbs (storage and cpu wise), but we're still looking into that and hopefully will come up with a better fixAny eta yet for the multithreading improvement patch?

Okay, thanks. Is there a bugzilla ticket for this?sorry for the late answer, no not yet, after some discussion simply upping the thread count is probably not the way to go here, otherwise it becomes to easy to overload the pbs (storage and cpu wise), but we're still looking into that and hopefully will come up with a better fix

Hi, we're struggling with the same slow disk->tape writing speed, so I wanted to ask whether there is already an expected release date for the fix/patch. Just offering the option to use multiple threads (selectable by the user) seems to be perfectly reasonable to me, as settings always need to be configured with some care...

Anyway, it's great to know that the issue has been identified and addressed. Keep up the great work

Anyway, it's great to know that the issue has been identified and addressed. Keep up the great work

Hi, we're struggling with the same slow disk->tape writing speed, so I wanted to ask whether there is already an expected release date for the fix/patch. Just offering the option to use multiple threads (selectable by the user) seems to be perfectly reasonable to me, as settings always need to be configured with some care...

Anyway, it's great to know that the issue has been identified and addressed. Keep up the great work

You can build yourself the packages, which is a bit clumsy but worked for me. I could send you the .deb files if you trust me, but I would also propose that Proxmox should integrate this upstream.

Thanks for the offerYou can build yourself the packages, which is a bit clumsy but worked for me. I could send you the .deb files if you trust me, but I would also propose that Proxmox should integrate this upstream.

Dunno if this helps anybody else but my old spinning rust was happy with changing these settings:

Seems like keeping stuff a while longer in the ARC is the way to go.

Code:

echo 12000 > /sys/module/zfs/parameters/zfs_arc_min_prefetch_ms

echo 10000 > /sys/module/zfs/parameters/zfs_arc_min_prescient_prefetch_msSeems like keeping stuff a while longer in the ARC is the way to go.

i pinged the latest patch recently, here a link to the mali thread: https://lore.proxmox.com/pbs-devel/20250221150631.3791658-1-d.csapak@proxmox.com/

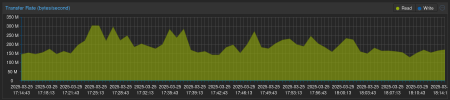

I tried the latest patch (https://forum.proxmox.com/threads/problems-compiling-pbs-v3-3-2.161721/) against 3.3.4. Mixed results.

Somehow i don't seem to be able to sustain speeds.

It's the only task running.

Is there something significantly different?

//edit ping @dcsapak

Somehow i don't seem to be able to sustain speeds.

It's the only task running.

Is there something significantly different?

//edit ping @dcsapak

Last edited: