@_gabriel exactly as @RoCE-geek said.

Hardware: 2x Xeon 6230R and both VMs had this config.

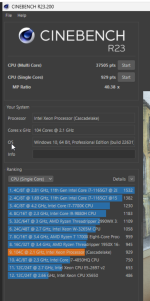

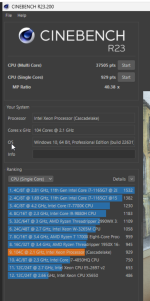

Win23H2 is only marginally faster (margin of error) on all cores but the single core speedup is 18% which kinda reflects the max boost frequency during the single core test run.

Xeon 6230R was able to boost up to 3.75GHz in Win11 23H2 vs 3.1GHz turbo boost on Win11 25H2.

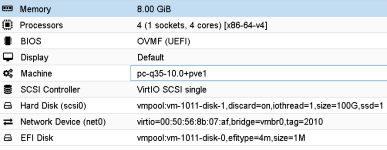

Hardware: 2x Xeon 6230R and both VMs had this config.

Code:

agent: 1

bios: ovmf

boot: order=scsi0;ide2;ide0;net0

cores: 52

cpu: Cascadelake-Server-v5,flags=+md-clear;+pcid;+spec-ctrl;+pdpe1gb;+hv-tlbflush;+hv-evmcs

efidisk0: linstor_nvme_1:pm-33087c22_114,efitype=4m,ms-cert=2023,pre-enrolled-keys=1,size=3080K

hotplug: 0

ide0: ISO-1:iso/virtio-win-0.1.285.iso,media=cdrom,size=771138K

ide2: ISO-1:iso/Win11_23H2_English_x64.iso,media=cdrom,size=6548134K

machine: pc-q35-10.1

memory: 64000

meta: creation-qemu=10.1.2,ctime=1764535929

name: win11-23h2

net0: virtio=BC:24:11:E4:D1:51,bridge=vmbr0,firewall=1

numa: 1

ostype: win11

scsi0: linstor_nvme_1:pm-a0c6d8aa_114,discard=on,iothread=1,size=159383560K,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=xxxxxxxxxxxxxxxxxxxxxxxxxxxx

sockets: 2

tpmstate0: linstor_nvme_1:pm-29d19dc3_114,size=4M,version=v2.0

vga: virtioWin23H2 is only marginally faster (margin of error) on all cores but the single core speedup is 18% which kinda reflects the max boost frequency during the single core test run.

Xeon 6230R was able to boost up to 3.75GHz in Win11 23H2 vs 3.1GHz turbo boost on Win11 25H2.