I'm just sharing my perf stats, regardless it'd be useful or not.

For me it's hard to determine if I'm "affected" (by the increased idle load or other perf problems).

I've no other WS2025 VM stats to compare, as well as I cannot simply test it on Intel.

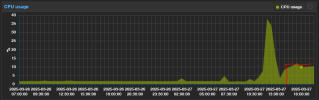

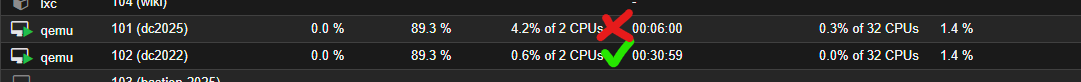

Testing setup: Proxmox 8.4.0 (running kernel: 6.8.12-15-pve), qemu-server 8.4.3 and four WS2025 VMs with CPU setting "EPYC-Milan-v2". I also use "high performance" profile setting in BIOS, as well as scaling_governor=performance. And with these VM and system settings, idle host-side CPU load is under 2% for 4x WS2025 VMs, running on 16C EPYC3 processor (16C/32vCPUs, 3.2GHz base clock). Guest power management is "High performance".

VM details: 4 VMs running, all WS2025 (6+6+8+8 = 28 vCPUs occupied, out of 32), all in (almost) idle state. Technically 3 of these VMs are "real" VMs, but at that moment there was no impactful load. For this reason I've been also waiting some time to catch the lowest possible values. In other (idle) cases there was more often 60/40 MSR/HLT ratio (with MSR 16.000-18.000).

I've experimentally concluded (by switching VMs ON/OFF), that any active (idle, but running) WS2025 vCPU consumes around 550-600 MSR events here, so what you see is 28 vCPUs running in total, within four WS2025 VMs (running, but all almost idle):

Code:

VM-EXIT Samples Samples% Time% Min Time Max Time Avg time

msr 15323 68.74% 32.99% 1.84us 47349.95us 413.92us ( +- 5.23% )

hlt 6648 29.82% 66.41% 1.47us 49426.35us 1920.90us ( +- 0.94% )

interrupt 160 0.72% 0.07% 1.63us 13357.33us 88.83us ( +- 93.94% )

write_cr8 61 0.27% 0.04% 0.97us 7741.68us 128.75us ( +- 98.55% )

vintr 33 0.15% 0.02% 1.29us 4028.20us 123.92us ( +- 98.45% )

hypercall 27 0.12% 0.00% 2.56us 15.92us 5.12us ( +- 10.49% )

io 20 0.09% 0.28% 6.13us 23578.13us 2675.70us ( +- 58.43% )

npf 20 0.09% 0.19% 4.59us 12490.88us 1788.86us ( +- 54.34% )