We have a "Lab" Ceph Object Storage consisting of a 4x Multinode Server and the following Node components:

Per Node:

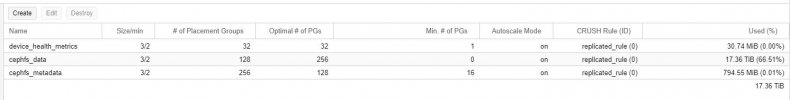

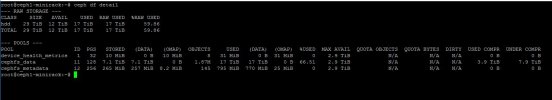

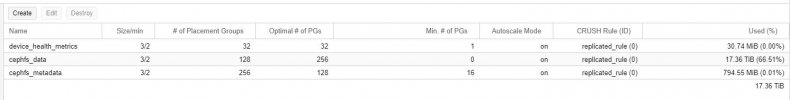

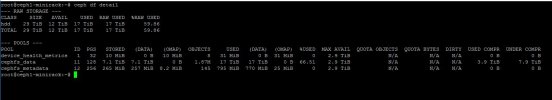

The Ceph Cluster thus consists of (2x 4 TB per node) x4 = a total of 8 OSDs with 32 TB gross capacity.

The cephfs_data pool is compressed with LZ4 under aggressive.

The Ceph storage is accessed exclusively via a "Samba-to-Ceph" Debian 11 based VM, on a remote Proxmox node, which has mounted the cephfs pool under /ceph-data and provides it via Samba (as Active Directory Domain Member) for file storage as an archive system.

root@samba-to-ceph:~# tail -n 6 /etc/fstab

### ### ###

#// Mini-Rack Ceph Object Storage

172.16.100.11:6789,172.16.100.12:6789,172.16.100.13:6789,172.16.100.14:6789:/ /ceph-data ceph name=admin,secret="SECRET",noatime,acl,_netdev 0 2

### ### ###

# EOF

root@samba-to-ceph:~#

Up to the 60% pool occupancy, SMB access was performant with an average of 120-130 MB/s copying from a Windows VM client (via the Samba-To-Ceph VM)

However, the entire Ceph performance seems to have plummeted after the last scrub!

!!! The write performance is now around the 200-300 Kbit/s !!!!

The RAM is completely in use (approx. 50% is CACHED)

The SWAP is also running fuller and fuller.

... after much debugging ...

!!! To regain normal performance, a cleanup of the PageCache and a SWAP cleanup helped !!!!

root@ceph1-minirack:~#

root@ceph1-minirack:~# sync; echo 1 > /proc/sys/vm/drop_caches

root@ceph1-minirack:~#

root@ceph1-minirack:~# swapoff -a

root@ceph1-minirack:~#

root@ceph1-minirack:~# swapon /dev/zram0 -p 10

root@ceph1-minirack:~#

root@ceph1-minirack:~# free

total used free shared buff/cache available

Mem: 24656724 11893748 12400936 71968 362040 12346804

Swap: 1048572 0 1048572

root@ceph1-minirack:~#

What is a permanent solution to this phenomenon ?

What parameters on the Linux kernel? Proxmox? or Ceph itself? need to be adjusted?

Per Node:

- PVE Manager Version pve-manager/7.1-7/df5740ad

- Kernel Version Linux 5.13.19-2-pve #1 SMP PVE 5.13.19-4 (Mon, 29 Nov 2021 12:10:09 +0100)

- 24 x Intel(R) Xeon(R) CPU X5675 @ 3.07GHz (2 Sockets)

- RAM usage 48.34% (11.37 GiB of 23.51 GiB)

- SWAP usage 0.00% (0 B of 1024.00 MiB) - ZRAM

- / HD space 0.07% (2.58 GiB of 3.51 TiB) - ZFS (Root)

- SATA - 2x 4 TB Ceph OSDs

- Ceph Cluster Network - 1 Gbit - 9000 MTU

- Proxmox Cluster / Ceph Mon. - 1 Gbit - 1500 MTU

The Ceph Cluster thus consists of (2x 4 TB per node) x4 = a total of 8 OSDs with 32 TB gross capacity.

The cephfs_data pool is compressed with LZ4 under aggressive.

The Ceph storage is accessed exclusively via a "Samba-to-Ceph" Debian 11 based VM, on a remote Proxmox node, which has mounted the cephfs pool under /ceph-data and provides it via Samba (as Active Directory Domain Member) for file storage as an archive system.

root@samba-to-ceph:~# tail -n 6 /etc/fstab

### ### ###

#// Mini-Rack Ceph Object Storage

172.16.100.11:6789,172.16.100.12:6789,172.16.100.13:6789,172.16.100.14:6789:/ /ceph-data ceph name=admin,secret="SECRET",noatime,acl,_netdev 0 2

### ### ###

# EOF

root@samba-to-ceph:~#

Up to the 60% pool occupancy, SMB access was performant with an average of 120-130 MB/s copying from a Windows VM client (via the Samba-To-Ceph VM)

However, the entire Ceph performance seems to have plummeted after the last scrub!

!!! The write performance is now around the 200-300 Kbit/s !!!!

The RAM is completely in use (approx. 50% is CACHED)

The SWAP is also running fuller and fuller.

... after much debugging ...

!!! To regain normal performance, a cleanup of the PageCache and a SWAP cleanup helped !!!!

root@ceph1-minirack:~#

root@ceph1-minirack:~# sync; echo 1 > /proc/sys/vm/drop_caches

root@ceph1-minirack:~#

root@ceph1-minirack:~# swapoff -a

root@ceph1-minirack:~#

root@ceph1-minirack:~# swapon /dev/zram0 -p 10

root@ceph1-minirack:~#

root@ceph1-minirack:~# free

total used free shared buff/cache available

Mem: 24656724 11893748 12400936 71968 362040 12346804

Swap: 1048572 0 1048572

root@ceph1-minirack:~#

What is a permanent solution to this phenomenon ?

What parameters on the Linux kernel? Proxmox? or Ceph itself? need to be adjusted?