Hey guys,

I'm an IT veteran but a Proxmox & Linux noob.

I'm configuring a set of Proxmox 6.3-2 servers for use in a relatively complex web hosting environment and I just wanted to get some confirmation that the way I'm configuring the networking is correct.

The network will used a bridged model and public IPs are assigned to the main (physical) firewall with ports forwarded to VMs on internal IPs as appropriate.

There will be VLANs in use on the network, including with the VMs.

There will be 3 clustered Proxmox servers.

Basically the IP network environment is:

10.0.10.x/24 - Public (Connectivity to the Internet via the main (physical) firewall)

10.0.20.x/24 - Management (Internal LAN / Backups etc)

10.0.30.x/24 - Cluster (ProxMox Cluster Traffic)

10.0.40.x/24 - Storage (Dedicated Host IP Connected Storage)

The existing physical switching setup is fully redundant with failover between switches.

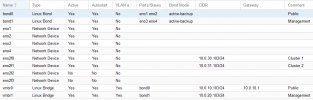

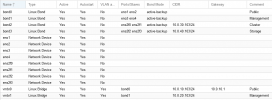

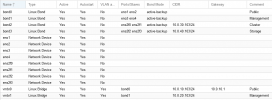

Based on this I have created bonded NIC pairs for all 4 IP subnets and bridges for both the Public and Management networks as follows:

So, how'd I do?

Anyone see any problems / mistakes?

I want to get this right before I configure clustering!

Any feedback greatly appreciated!

Thanks!

I'm an IT veteran but a Proxmox & Linux noob.

I'm configuring a set of Proxmox 6.3-2 servers for use in a relatively complex web hosting environment and I just wanted to get some confirmation that the way I'm configuring the networking is correct.

The network will used a bridged model and public IPs are assigned to the main (physical) firewall with ports forwarded to VMs on internal IPs as appropriate.

There will be VLANs in use on the network, including with the VMs.

There will be 3 clustered Proxmox servers.

Basically the IP network environment is:

10.0.10.x/24 - Public (Connectivity to the Internet via the main (physical) firewall)

10.0.20.x/24 - Management (Internal LAN / Backups etc)

10.0.30.x/24 - Cluster (ProxMox Cluster Traffic)

10.0.40.x/24 - Storage (Dedicated Host IP Connected Storage)

The existing physical switching setup is fully redundant with failover between switches.

Based on this I have created bonded NIC pairs for all 4 IP subnets and bridges for both the Public and Management networks as follows:

So, how'd I do?

Anyone see any problems / mistakes?

I want to get this right before I configure clustering!

Any feedback greatly appreciated!

Thanks!