We're currently testing ZFS replication in order to use it on our production servers.

I made a test cluster of 3 nested Proxmox VMs:

I created a VM and a container (referred to as "VMs" from now on) on

Then I simulate node failure by shutting down

The only way to recover from it is to remove the volumes from

And for some reason it only happens for

Any ideas on what's causing the issue would be great!

I made a test cluster of 3 nested Proxmox VMs:

at1, at2 and at3. Each node has a single 16GB drive with a ZFS pool.I created a VM and a container (referred to as "VMs" from now on) on

at1, enabled replication to at2 and at3. And configured HA so that at1 is the preferred node for the VMs.Then I simulate node failure by shutting down

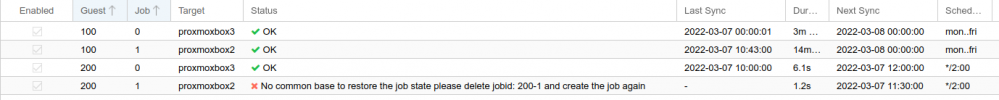

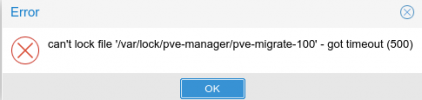

at1. The VMs migrate to at2, as expected. Replication also changes to at1 and at3, as expected. But often, about 1/2 of times, the replication job for at3 fails with the following error log:

Code:

2022-02-01 06:05:00 100-1: start replication job

2022-02-01 06:05:00 100-1: guest => VM 100, running => 90283

2022-02-01 06:05:00 100-1: volumes => zfs:vm-100-disk-0

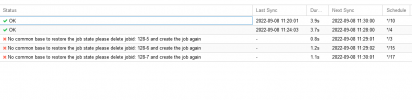

2022-02-01 06:05:02 100-1: end replication job with error: No common base to restore the job state

please delete jobid: 100-1 and create the job againThe only way to recover from it is to remove the volumes from

at3 and run the job again, but it's obviously not practical to do that for big volumes. Deleting and creating the job like the error suggests doesn't help.And for some reason it only happens for

at3. at2 is always ok. Interestingly, same doesn't seem to happen to at2 when I change the HA priority from at1→at2→at3 to at1→at3→at2. But maybe I just didn't test it for long enough.Any ideas on what's causing the issue would be great!