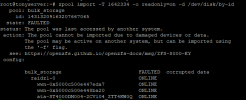

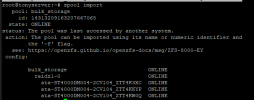

I know this is a pretty common question, but I haven't found a situation quite like mine.To cut it short, I had a ZFS pool on my home server on bare metal of proxmox, wanted to move it to an open media vault installation. It went fine, but then i removed the zfs storage from proxmox, not realizing i put the stupid OS drive of the OMV installation on that zfs pool... So then I attempt to export the pool, and create a whole new OMV installation, and am now unable to reimport it. All of the pool seems fine and healthy, all disks are showing online, it is just refusing to import. And since its my home setup and I haven't got the money for a secondary proper backup, this was all I had of my Plex collection.. Any advice would be greatly appreciated.

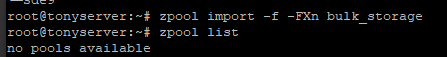

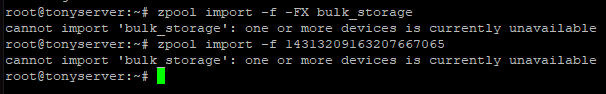

My current thought process is to turn it into a read-only pool (if that works) and then copy that to an external drive I have and recreate the pool. Would prefer if I didn't have to do that, but I understand I screwed myself as is.I attempted to import as read-only and this is what came up now.

My current thought process is to turn it into a read-only pool (if that works) and then copy that to an external drive I have and recreate the pool. Would prefer if I didn't have to do that, but I understand I screwed myself as is.I attempted to import as read-only and this is what came up now.