Hello,

I have a very peculiar case of which I did not find any solution elswhere. I have a Proxmox VE 8.2.4 running on my homelab server. I have a 256 SSD as my boot drive and one 620GB HDD for some of my data drive. The HDD is configured as a zfs pool (ID:

I have mounted the zfs pool to path

But, as you might have already spotted, the mounted directory only shows a size of 410GB, compared to the 620GB of my ZFS pool. I have tried unmounting and mounting it again but with no luck. Till about a couple of weeks ago it was working fine with a full size of 620GB, but recently, it suddenly got shrunk to 410GB. Some code outputs are given below for your reference.

#mount:

# df -h

# zfs list

# zpool list

# zpool status

# zfs get all WdcZfs

Thanks in advance for all the help!

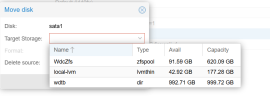

I have a very peculiar case of which I did not find any solution elswhere. I have a Proxmox VE 8.2.4 running on my homelab server. I have a 256 SSD as my boot drive and one 620GB HDD for some of my data drive. The HDD is configured as a zfs pool (ID:

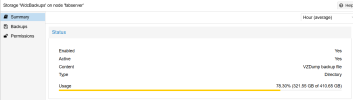

WdcZfs) with the entire 620GB for its size. Image:

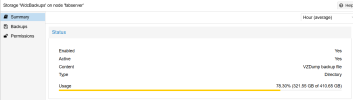

I have mounted the zfs pool to path

/mnt/WdcZfs and imported into proxmox with name WdcBackups. Image:

But, as you might have already spotted, the mounted directory only shows a size of 410GB, compared to the 620GB of my ZFS pool. I have tried unmounting and mounting it again but with no luck. Till about a couple of weeks ago it was working fine with a full size of 620GB, but recently, it suddenly got shrunk to 410GB. Some code outputs are given below for your reference.

#mount:

Bash:

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=16346088k,nr_inodes=4086522,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,noexec,relatime,size=3275880k,mode=755,inode64)

/dev/mapper/pve-root on / type ext4 (rw,relatime,errors=remount-ro)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

efivarfs on /sys/firmware/efi/efivars type efivarfs (rw,nosuid,nodev,noexec,relatime)

bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=6390)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

ramfs on /run/credentials/systemd-sysusers.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

ramfs on /run/credentials/systemd-tmpfiles-setup-dev.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

/dev/sdb2 on /boot/efi type vfat (rw,relatime,fmask=0022,dmask=0022,codepage=437,iocharset=iso8859-1,shortname=mixed,errors=remount-ro)

ramfs on /run/credentials/systemd-sysctl.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

/dev/sdc1 on /mnt/pve/wdtb type xfs (rw,relatime,attr2,inode64,logbufs=8,logbsize=32k,noquota)

ramfs on /run/credentials/systemd-tmpfiles-setup.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,nosuid,nodev,noexec,relatime)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

lxcfs on /var/lib/lxcfs type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

/dev/fuse on /etc/pve type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other)

overlay on /var/lib/docker/overlay2/55b6361f85416a93cd686ddedd9eb9e74da30fe2d0aa8aff72832feef36fa86d/merged type overlay (rw,relatime,lowerdir=/var/lib/docker/overlay2/l/LKTT3D73OTH2OKLJJFCENKGRW5:/var/lib/docker/overlay2/l/AQ326LCWGYDID2S6MWNW6UOWXJ:/var/lib/docker/overlay2/l/IJBYZ3WNHMGDUU4WSG2YGUQUJN:/var/lib/docker/overlay2/l/4B2WXY7UL3EAPVKMGSB3XEVANK:/var/lib/docker/overlay2/l/RR5A3KKFN4QLTGBXZBHRW6OI7Q:/var/lib/docker/overlay2/l/4NRNMFJCEAZRU6PQGGR5XQVXWR:/var/lib/docker/overlay2/l/NDPVSQRXRGOC6AH54UENYGTPB7:/var/lib/docker/overlay2/l/Z5H56NBJJ3LTNJGWSDHMQHMWP4:/var/lib/docker/overlay2/l/ZN65KGQNUTJ4YVGWBK324V6KPB:/var/lib/docker/overlay2/l/Y4UGLCSEKLZ2YBQJWLWFMWHX7A,upperdir=/var/lib/docker/overlay2/55b6361f85416a93cd686ddedd9eb9e74da30fe2d0aa8aff72832feef36fa86d/diff,workdir=/var/lib/docker/overlay2/55b6361f85416a93cd686ddedd9eb9e74da30fe2d0aa8aff72832feef36fa86d/work,nouserxattr)

nsfs on /run/docker/netns/f7fb68638292 type nsfs (rw)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=3275876k,nr_inodes=818969,mode=700,inode64)

WdcZfs on /mnt/WdcZfs type zfs (rw,relatime,xattr,noacl,casesensitive)# df -h

Bash:

Filesystem Size Used Avail Use% Mounted on

udev 16G 0 16G 0% /dev

tmpfs 3.2G 1.4M 3.2G 1% /run

/dev/mapper/pve-root 44G 31G 12G 74% /

tmpfs 16G 34M 16G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

efivarfs 128K 64K 60K 52% /sys/firmware/efi/efivars

/dev/sdb2 1022M 12M 1011M 2% /boot/efi

/dev/sdc1 932G 6.6G 925G 1% /mnt/pve/wdtb

/dev/fuse 128M 24K 128M 1% /etc/pve

overlay 44G 31G 12G 74% /var/lib/docker/overlay2/55b6361f85416a93cd686ddedd9eb9e74da30fe2d0aa8aff72832feef36fa86d/merged

tmpfs 3.2G 0 3.2G 0% /run/user/0

WdcZfs 383G 300G 83G 79% /mnt/WdcZfs# zfs list

Bash:

NAME USED AVAIL REFER MOUNTPOINT

WdcZfs 495G 83.0G 299G /mnt/WdcZfs

WdcZfs/vm-101-disk-0 130G 167G 45.6G -

WdcZfs/vm-104-disk-0 3M 83.0G 92K -

WdcZfs/vm-104-disk-1 65.0G 139G 9.13G -

WdcZfs/vm-104-disk-2 6M 83.0G 64K -# zpool list

Bash:

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

WdcZfs 596G 354G 242G - - 0% 59% 1.00x ONLINE -# zpool status

Bash:

pool: WdcZfs

state: ONLINE

scan: scrub repaired 0B in 01:51:35 with 0 errors on Sun Aug 11 02:15:36 2024

config:

NAME STATE READ WRITE CKSUM

WdcZfs ONLINE 0 0 0

wwn-0x50014ee657db94a2 ONLINE 0 0 0

errors: No known data errors# zfs get all WdcZfs

Bash:

NAME PROPERTY VALUE SOURCE

WdcZfs type filesystem -

WdcZfs creation Fri Feb 16 19:56 2024 -

WdcZfs used 495G -

WdcZfs available 83.0G -

WdcZfs referenced 299G -

WdcZfs compressratio 1.02x -

WdcZfs mounted yes -

WdcZfs quota none default

WdcZfs reservation none default

WdcZfs recordsize 128K default

WdcZfs mountpoint /mnt/WdcZfs local

WdcZfs sharenfs off default

WdcZfs checksum on default

WdcZfs compression on local

WdcZfs atime on default

WdcZfs devices on default

WdcZfs exec on default

WdcZfs setuid on default

WdcZfs readonly off default

WdcZfs zoned off default

WdcZfs snapdir hidden default

WdcZfs aclmode discard default

WdcZfs aclinherit restricted default

WdcZfs createtxg 1 -

WdcZfs canmount on default

WdcZfs xattr on default

WdcZfs copies 1 default

WdcZfs version 5 -

WdcZfs utf8only off -

WdcZfs normalization none -

WdcZfs casesensitivity sensitive -

WdcZfs vscan off default

WdcZfs nbmand off default

WdcZfs sharesmb off default

WdcZfs refquota none default

WdcZfs refreservation none default

WdcZfs guid 15889226908735833203 -

WdcZfs primarycache all default

WdcZfs secondarycache all default

WdcZfs usedbysnapshots 0B -

WdcZfs usedbydataset 299G -

WdcZfs usedbychildren 195G -

WdcZfs usedbyrefreservation 0B -

WdcZfs logbias latency default

WdcZfs objsetid 54 -

WdcZfs dedup off default

WdcZfs mlslabel none default

WdcZfs sync standard default

WdcZfs dnodesize legacy default

WdcZfs refcompressratio 1.00x -

WdcZfs written 299G -

WdcZfs logicalused 362G -

WdcZfs logicalreferenced 299G -

WdcZfs volmode default default

WdcZfs filesystem_limit none default

WdcZfs snapshot_limit none default

WdcZfs filesystem_count none default

WdcZfs snapshot_count none default

WdcZfs snapdev hidden default

WdcZfs acltype off default

WdcZfs context none default

WdcZfs fscontext none default

WdcZfs defcontext none default

WdcZfs rootcontext none default

WdcZfs relatime on default

WdcZfs redundant_metadata all default

WdcZfs overlay on default

WdcZfs encryption off default

WdcZfs keylocation none default

WdcZfs keyformat none default

WdcZfs pbkdf2iters 0 default

WdcZfs special_small_blocks 0 default

WdcZfs prefetch all defaultThanks in advance for all the help!