Hi,

I am using a pfSense 2.4 (FreeBSD based) virtual machine on KVM and I see a different RAM usage in Proxmox than in the VM itself.

Proxmox shows more than 90% of RAM usage (~ 15Gb of 16 Gb):

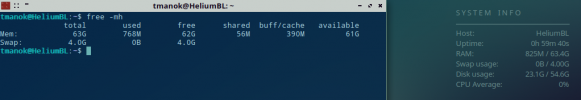

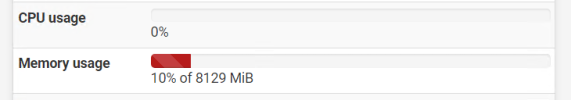

but both pfSense and FreeBSD are showing only 2% of usage:

But the virtual machine is giving me some "Cannot allocate memory" errors, so I am thinking we are having some problems on memory allocation from Proxmox to FreeBSD.

My PVE version:

My VM configuration:

What's wrong?

Could you help me please?

Thank you very much!

I am using a pfSense 2.4 (FreeBSD based) virtual machine on KVM and I see a different RAM usage in Proxmox than in the VM itself.

Proxmox shows more than 90% of RAM usage (~ 15Gb of 16 Gb):

but both pfSense and FreeBSD are showing only 2% of usage:

But the virtual machine is giving me some "Cannot allocate memory" errors, so I am thinking we are having some problems on memory allocation from Proxmox to FreeBSD.

My PVE version:

Code:

root@node03:/# pveversion -v

proxmox-ve: 5.1-38 (running kernel: 4.13.13-5-pve)

pve-manager: 5.1-43 (running version: 5.1-43/bdb08029)

pve-kernel-4.13.4-1-pve: 4.13.4-26

pve-kernel-4.13.13-2-pve: 4.13.13-33

pve-kernel-4.13.13-5-pve: 4.13.13-38

pve-kernel-4.13.13-3-pve: 4.13.13-34

libpve-http-server-perl: 2.0-8

lvm2: 2.02.168-pve6

corosync: 2.4.2-pve3

libqb0: 1.0.1-1

pve-cluster: 5.0-19

qemu-server: 5.0-20

pve-firmware: 2.0-3

libpve-common-perl: 5.0-25

libpve-guest-common-perl: 2.0-14

libpve-access-control: 5.0-7

libpve-storage-perl: 5.0-17

pve-libspice-server1: 0.12.8-3

vncterm: 1.5-3

pve-docs: 5.1-16

pve-qemu-kvm: 2.9.1-6

pve-container: 2.0-18

pve-firewall: 3.0-5

pve-ha-manager: 2.0-4

ksm-control-daemon: 1.2-2

glusterfs-client: 3.8.8-1

lxc-pve: 2.1.1-2

lxcfs: 2.0.8-1

criu: 2.11.1-1~bpo90

novnc-pve: 0.6-4

smartmontools: 6.5+svn4324-1

zfsutils-linux: 0.7.4-pve2~bpo9My VM configuration:

Code:

root@node03:/# cat /etc/pve/qemu-server/301.conf

#Firewall primario (master)

bootdisk: virtio0

cores: 2

cpu: qemu64

memory: 16384

name: fw1

net0: virtio=AE:07:1B:36:63:36,bridge=vmbr0

net1: virtio=1E:DC:6A:BF:15:74,bridge=vmbr1,tag=11

net2: virtio=FA:2D:27:2B:02:1C,bridge=vmbr1,tag=12

net3: virtio=9E:09:23:ED:37:08,bridge=vmbr1,tag=14

net4: virtio=76:8B:4E:AF:43:1A,bridge=vmbr1,tag=235

net5: virtio=46:BE:88:AD:6F:91,bridge=vmbr1,tag=1988

net6: virtio=AA:4C:A9:70:8C:63,bridge=vmbr1,tag=3297

net7: virtio=5A:90:45:0B:AF:CB,bridge=vmbr1,tag=13

numa: 0

onboot: 1

ostype: other

parent: Before_Upgrade

smbios1: uuid=a0a1af13-55ad-43a9-afa5-770c106f530b

sockets: 1

virtio0: local-lvm:vm-301-disk-1,size=32G

[PENDING]

balloon: 0

[Before_Upgrade]

#Before upgrade to 2.4.1

bootdisk: virtio0

cores: 2

cpu: qemu64

machine: pc-i440fx-2.9

memory: 16384

name: fw1

net0: virtio=AE:07:1B:36:63:36,bridge=vmbr0

net1: virtio=1E:DC:6A:BF:15:74,bridge=vmbr1,tag=11

net2: virtio=FA:2D:27:2B:02:1C,bridge=vmbr1,tag=12

net3: virtio=9E:09:23:ED:37:08,bridge=vmbr1,tag=14

net4: virtio=76:8B:4E:AF:43:1A,bridge=vmbr1,tag=235

net5: virtio=46:BE:88:AD:6F:91,bridge=vmbr1,tag=1988

net6: virtio=AA:4C:A9:70:8C:63,bridge=vmbr1,tag=3297

net7: virtio=5A:90:45:0B:AF:CB,bridge=vmbr1,tag=13

numa: 0

onboot: 1

ostype: other

smbios1: uuid=a0a1af13-55ad-43a9-afa5-770c106f530b

snaptime: 1518724974

sockets: 1

virtio0: local-lvm:vm-301-disk-1,size=32G

vmstate: local-lvm:vm-301-state-Before_UpgradeWhat's wrong?

Could you help me please?

Thank you very much!