i have this bizarre situation where using ZFS on a Dell R730xd leads to something completely useless. i have spent most of an entire week trying to troubleshoot it in every manner possible. it simply. doesn't. budge.

system topology:

Dell R730xd

it was two 2.5" sata ports in the back connected directly to the motherboard

12x 3.5" SAS drives on the front connected to system using backplane and, i've tried both perc in HBA mode and actual Dell HBA

so firstly, no matter what system topology i use, both windows and proxmox on EXT4 will perfectly saturate the SATA bus in the back, pegging out at 450+ MB/s on the metal, and about 250 MB/s in a virtual machine. (for writes. reads in a vm are also 450 MB/s)

as soon as i switch to ZFS, things completely fall apart. i have tried an ashift of 9, 12, and 13. i have tried turning compression off and on. i have tried an ARC of 64MB to 16GB. i have tried zfs_dirty_data_max/max_max of 0 - 32GB. absolutely NOTHING functions.

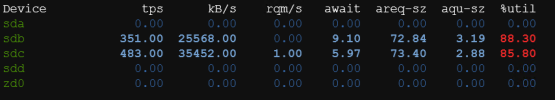

symptoms: using zfs, the 2.5" data drives will completely peg out at about 60MB/s, and the 12k SAS drives on the front backplate will peg out at 5 MB/s. (5,000 KB/s) this is on sequential 1M writes using 20 threads.

with a large zfs_dirty_data pool you can see the RAM just eating those disk performance tests, and they will shoot up to 16-17 GB/s (16-17,000 MB/s) as soon as the dirty data pool gets full though, the vm will essentially lock up as ZFS slowly writes the now massive pool of dirty data to the ssd's at 60 MB/s. after a bunch of minutes, the vm will come to and disk utilization will finally move of 100% utilized.

what the heck is this. it has eaten most of a weak for me. and people are installing ZFS on much worse systems than and r730xd with 256gb of ram and 2x xeon e5-2678 v3 CPU's.

also, i'm using the proxmox ve 8.2 for these test installs.

system topology:

Dell R730xd

it was two 2.5" sata ports in the back connected directly to the motherboard

12x 3.5" SAS drives on the front connected to system using backplane and, i've tried both perc in HBA mode and actual Dell HBA

so firstly, no matter what system topology i use, both windows and proxmox on EXT4 will perfectly saturate the SATA bus in the back, pegging out at 450+ MB/s on the metal, and about 250 MB/s in a virtual machine. (for writes. reads in a vm are also 450 MB/s)

as soon as i switch to ZFS, things completely fall apart. i have tried an ashift of 9, 12, and 13. i have tried turning compression off and on. i have tried an ARC of 64MB to 16GB. i have tried zfs_dirty_data_max/max_max of 0 - 32GB. absolutely NOTHING functions.

symptoms: using zfs, the 2.5" data drives will completely peg out at about 60MB/s, and the 12k SAS drives on the front backplate will peg out at 5 MB/s. (5,000 KB/s) this is on sequential 1M writes using 20 threads.

with a large zfs_dirty_data pool you can see the RAM just eating those disk performance tests, and they will shoot up to 16-17 GB/s (16-17,000 MB/s) as soon as the dirty data pool gets full though, the vm will essentially lock up as ZFS slowly writes the now massive pool of dirty data to the ssd's at 60 MB/s. after a bunch of minutes, the vm will come to and disk utilization will finally move of 100% utilized.

what the heck is this. it has eaten most of a weak for me. and people are installing ZFS on much worse systems than and r730xd with 256gb of ram and 2x xeon e5-2678 v3 CPU's.

also, i'm using the proxmox ve 8.2 for these test installs.