Hi , since a few days i'm experiencing some pretty troublesome problems with my PBS.

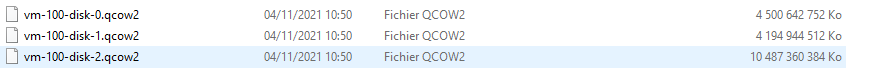

I increased the size of my datastore from 4TB to 10TB and since then, I have a lot of verify tasks that are failing.

Here is one of the many failed log that i'm recieving:

It's a pretty serious problem for me as it's blocking me from restoring certain VMs

I'm kind of lost and i'm not seeing a lot of threads related to this problem.

Thank you in advance.

I increased the size of my datastore from 4TB to 10TB and since then, I have a lot of verify tasks that are failing.

Here is one of the many failed log that i'm recieving:

Code:

()

2021-11-03T02:13:50+01:00: Automatically verifying newly added snapshot

2021-11-03T02:13:50+01:00: verify Filer3:ct/102/2021-11-03T01:00:02Z

2021-11-03T02:13:50+01:00: check pct.conf.blob

2021-11-03T02:13:50+01:00: check root.pxar.didx

2021-11-03T02:14:25+01:00: can't verify chunk, load failed - store 'Filer3', unable to load chunk '02afb848a1352d195663d5f05ddf22dfe7acb8e0a80eab18fbdb1d89c8547c2e' - unable to parse raw blob - wrong magic

2021-11-03T02:14:25+01:00: corrupted chunk renamed to "/mnt/Filer3/.chunks/02af/02afb848a1352d195663d5f05ddf22dfe7acb8e0a80eab18fbdb1d89c8547c2e.0.bad"

2021-11-03T02:25:47+01:00: can't verify chunk, load failed - store 'Filer3', unable to load chunk '6cc08edc6c11418eefdd0b7ddd78c80dba12137889b9ec9d23c4b6a96e5ddf22' - Data blob has wrong CRC checksum.

2021-11-03T02:25:47+01:00: corrupted chunk renamed to "/mnt/Filer3/.chunks/6cc0/6cc08edc6c11418eefdd0b7ddd78c80dba12137889b9ec9d23c4b6a96e5ddf22.0.bad"

2021-11-03T02:34:21+01:00: can't verify chunk, load failed - store 'Filer3', unable to load chunk 'bcca078640b1a93c2b2ec1b2d86f225d259ffa06d0ad4b584f011a6580d447be' - Data blob has wrong CRC checksum.

2021-11-03T02:34:21+01:00: corrupted chunk renamed to "/mnt/Filer3/.chunks/bcca/bcca078640b1a93c2b2ec1b2d86f225d259ffa06d0ad4b584f011a6580d447be.0.bad"

2021-11-03T02:41:32+01:00: verified 48621.34/85962.92 MiB in 1662.36 seconds, speed 29.25/51.71 MiB/s (3 errors)

2021-11-03T02:41:32+01:00: verify Filer3:ct/102/2021-11-03T01:00:02Z/root.pxar.didx failed: chunks could not be verified

2021-11-03T02:41:32+01:00: check catalog.pcat1.didx

2021-11-03T02:41:33+01:00: verified 0.40/1.17 MiB in 0.15 seconds, speed 2.62/7.61 MiB/s (0 errors)

2021-11-03T02:41:33+01:00: TASK ERROR: verification failed - please check the log for detailsIt's a pretty serious problem for me as it's blocking me from restoring certain VMs

Code:

Wiping dos signature on /dev/serv6-nvme/vm-1014-disk-0.

Logical volume "vm-1014-disk-0" created.

new volume ID is 'serv6-nvme:vm-1014-disk-0'

restore proxmox backup image: /usr/bin/pbs-restore --repository root@pam@10.10.0.25:Filer3 vm/1014/2021-11-03T08:15:02Z drive-ide0.img.fidx /dev/serv6-nvme/vm-1014-disk-0 --verbose --format raw

connecting to repository 'root@pam@10.10.0.25:Filer3'

open block backend for target '/dev/serv6-nvme/vm-1014-disk-0'

starting to restore snapshot 'vm/1014/2021-11-03T08:15:02Z'

download and verify backup index

progress 1% (read 603979776 bytes, zeroes = 15% (92274688 bytes), duration 17 sec)

progress 2% (read 1203765248 bytes, zeroes = 7% (92274688 bytes), duration 32 sec)

progress 3% (read 1807745024 bytes, zeroes = 5% (92274688 bytes), duration 48 sec)

progress 4% (read 2407530496 bytes, zeroes = 3% (92274688 bytes), duration 64 sec)

progress 5% (read 3007315968 bytes, zeroes = 3% (92274688 bytes), duration 76 sec)

progress 6% (read 3611295744 bytes, zeroes = 2% (92274688 bytes), duration 92 sec)

progress 7% (read 4211081216 bytes, zeroes = 2% (92274688 bytes), duration 105 sec)

progress 8% (read 4810866688 bytes, zeroes = 1% (92274688 bytes), duration 119 sec)

progress 9% (read 5414846464 bytes, zeroes = 1% (92274688 bytes), duration 138 sec)

progress 10% (read 6014631936 bytes, zeroes = 1% (92274688 bytes), duration 154 sec)

progress 11% (read 6614417408 bytes, zeroes = 1% (92274688 bytes), duration 170 sec)

progress 12% (read 7218397184 bytes, zeroes = 1% (92274688 bytes), duration 185 sec)

progress 13% (read 7818182656 bytes, zeroes = 1% (92274688 bytes), duration 200 sec)

progress 14% (read 8422162432 bytes, zeroes = 1% (92274688 bytes), duration 215 sec)

progress 15% (read 9021947904 bytes, zeroes = 1% (92274688 bytes), duration 226 sec)

progress 16% (read 9621733376 bytes, zeroes = 0% (92274688 bytes), duration 241 sec)

progress 17% (read 10225713152 bytes, zeroes = 0% (92274688 bytes), duration 257 sec)

progress 18% (read 10825498624 bytes, zeroes = 0% (92274688 bytes), duration 269 sec)

progress 19% (read 11425284096 bytes, zeroes = 3% (419430400 bytes), duration 275 sec)

progress 20% (read 12029263872 bytes, zeroes = 4% (549453824 bytes), duration 288 sec)

progress 21% (read 12629049344 bytes, zeroes = 4% (557842432 bytes), duration 303 sec)

progress 22% (read 13228834816 bytes, zeroes = 4% (566231040 bytes), duration 319 sec)

progress 23% (read 13832814592 bytes, zeroes = 4% (566231040 bytes), duration 336 sec)

progress 24% (read 14432600064 bytes, zeroes = 3% (570425344 bytes), duration 350 sec)

progress 25% (read 15032385536 bytes, zeroes = 3% (583008256 bytes), duration 366 sec)

progress 26% (read 15636365312 bytes, zeroes = 3% (583008256 bytes), duration 384 sec)

progress 27% (read 16236150784 bytes, zeroes = 3% (583008256 bytes), duration 400 sec)

progress 28% (read 16840130560 bytes, zeroes = 3% (583008256 bytes), duration 421 sec)

progress 29% (read 17439916032 bytes, zeroes = 3% (583008256 bytes), duration 437 sec)

progress 30% (read 18039701504 bytes, zeroes = 3% (583008256 bytes), duration 450 sec)

restore failed: reading file "/mnt/Filer3/.chunks/5cff/5cfffe3f1cac7c79282ed244bbb1791a687a0e70b3f9be8523d80a9fb37cc850" failed: No such file or directory (os error 2)

Logical volume "vm-1014-disk-0" successfully removed

temporary volume 'serv6-nvme:vm-1014-disk-0' sucessfuly removed

error before or during data restore, some or all disks were not completely restored. VM 1014 state is NOT cleaned up.

TASK ERROR: command '/usr/bin/pbs-restore --repository root@pam@10.10.0.25:Filer3 vm/1014/2021-11-03T08:15:02Z drive-ide0.img.fidx /dev/serv6-nvme/vm-1014-disk-0 --verbose --format raw' failed: exit code 25I'm kind of lost and i'm not seeing a lot of threads related to this problem.

Thank you in advance.