I am hoping I provide enough info off the bat to give a good idea of what is going on. But I am a little lost and just have a lot of questions I guess. I will also do my best to update with what has been answered, and link or say what the answer/solution was.

Note: Node 3 is a little different as we were troubleshooting another issue and it was suggested by support folk.

1) We see speeds of only 350MB/s during backups. While currently there is about 5TB of VM storage used, it only takes about 10 minutes to backup what it needs. We would like to increase this if possible as that would theoretically increase the restore speed as well. [Sounds like this is due to the storage configuration in PBS. We will investigate this later.]

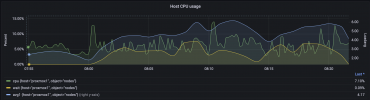

2) The main problem, is that during a backup, VMs start to suffer. Not very responsive, web servers not loading sites as fast, databases are slow, etc.

The setup:

So we have 4 HP DL360p Gen9 servers, for 3 PVE nodes and a PBS. The nodes just have 2 SSDs in a ZFS mirror, and the PBS has 2 SSDs in mirror for boot, and 8 2.4TB SAS drives in a raid Z2. Shared storage for the nodes is a Jetstor, with 7 1.92 TB SAS SSDs raid 10 with hot spare. Each node and PBS has 1 of possible 2 10Gb connections to a switch for all traffic (I know it is suggested to separate the networks, we are looking into it). The Cisco switch has 16 10Gb ports, 8 of which are wirespeed (where we are plugged in), while the other 8 are 2:1 oversubscribed. The Jetstor has 4x 10Gb links, but is only using one currently (we are looking to increase this).proxmox-ve: 7.3-1 (running kernel: 5.15.83-1-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

proxmox-ve: 7.3-1 (running kernel: 5.15.83-1-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

proxmox-ve: 7.3-1 (running kernel: 5.19.17-2-pve)

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.19: 7.2-15

pve-kernel-5.13: 7.1-9

pve-kernel-5.19.17-2-pve: 5.19.17-2

pve-kernel-5.19.17-1-pve: 5.19.17-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

pve-manager: 7.3-4 (running version: 7.3-4/d69b70d4)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.19: 7.2-15

pve-kernel-5.13: 7.1-9

pve-kernel-5.19.17-2-pve: 5.19.17-2

pve-kernel-5.19.17-1-pve: 5.19.17-1

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

ceph-fuse: 15.2.15-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.3

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.3-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-2

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.2-1

proxmox-backup-file-restore: 2.3.2-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-2

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.6-3

pve-ha-manager: 3.5.1

pve-i18n: 2.8-2

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1

proxmox-backup: 2.3-1 (running kernel: 5.15.83-1-pve)

proxmox-backup-server: 2.3.2-1 (running version: 2.3.2)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-1-pve: 5.13.19-3

ifupdown2: 3.1.0-1+pmx3

libjs-extjs: 7.0.0-1

proxmox-backup-docs: 2.3.2-1

proxmox-backup-client: 2.3.2-1

proxmox-mini-journalreader: 1.2-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-xtermjs: 4.16.0-1

smartmontools: 7.2-pve3

zfsutils-linux: 2.1.9-pve1

proxmox-backup-server: 2.3.2-1 (running version: 2.3.2)

pve-kernel-helper: 7.3-3

pve-kernel-5.15: 7.3-1

pve-kernel-5.13: 7.1-9

pve-kernel-5.15.83-1-pve: 5.15.83-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-1-pve: 5.13.19-3

ifupdown2: 3.1.0-1+pmx3

libjs-extjs: 7.0.0-1

proxmox-backup-docs: 2.3.2-1

proxmox-backup-client: 2.3.2-1

proxmox-mini-journalreader: 1.2-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-xtermjs: 4.16.0-1

smartmontools: 7.2-pve3

zfsutils-linux: 2.1.9-pve1

The problems:

There are 2 main problems I would say, but they may be related, I'm not sure...1) We see speeds of only 350MB/s during backups. While currently there is about 5TB of VM storage used, it only takes about 10 minutes to backup what it needs. We would like to increase this if possible as that would theoretically increase the restore speed as well. [Sounds like this is due to the storage configuration in PBS. We will investigate this later.]

2) The main problem, is that during a backup, VMs start to suffer. Not very responsive, web servers not loading sites as fast, databases are slow, etc.

What I have tried/done so far:

- I tried changing the MTU to 4000, and 9000 (as those are the only 2 jumbo frame sizes supported by the Jetstor), and things went to crap. <75MB/s on backups now, seemed to be recreating a bunch of bitmaps, instead of 10 minutes it was taking over an hour. I did make sure I changed all nodes, PBS, Jetstor, and switch to the higher MTU.

- Also per Proxmox support suggestion, I changed machines over to VirtIO SCSI single from the LSI option I had.

- Checked that I have SR-IOV enabled, per this post. I don't see anything in the BIOS specifically for I/OAT however.

What I will do/try:

- Try using iothread on the VMs that have the most i/o (Postgres and FileMaker server).

- I can play around with compression and see if anything can be gained there (zstd, lzo, gzip, pigz). Currently the compression says it is using zstd, but it is also grayed out, and I did not change manually. Default says it is 0, so I'm not sure.

Future considerations:

- Changing the network typology to what is recommended.

- Adding some SSD/NVMe cache to PBS in front of the spinning drives.

- Changing node 1 and 2 to UEFI boot.

Questions I have:

- [Answered] Would it be worth separating the backups into multiple jobs to parallelize it?

- [Answered] What is the read/write relative to on a backup? Is it reading from PBS to know what needs to be written?

- [Answered] Is backing up single threaded?

- [Answered] If we separate the corosync network, would it be worth changing the migration method to insecure, like here?

- With dual Xeon E5-2690 v3 in each PVE, and dual Xeon E5-2670 v3 in PBS, are we CPU bottlenecked?

- If so, would a second attempt at upping the MTU be worth it? So for the same amount of packets handled, more data is moved (is my understanding).

- If the backup server can only do 350MB/s, but migrations can saturate the full 10Gb between nodes, what might be causing the slowness while doing backups?

- Has anyone had luck changing the queue size on the NICs, like here?

- How about changing the MTU, like here, or here?

Last edited: