Redhat VirtIO developers would like to coordinate with Proxmox devs re: "[vioscsi] Reset to device ... system unresponsive"

- Thread starter ylluminate

- Start date

-

- Tags

- virtio virtio disk virtio scsi

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Thanks @RoCE-geek.@benyamin - I'm sorry, but it's still buggy.

VM102 - the only one VM on a PVE node

Drivers back to 0.1.240

SCSI Single

Both disks (scsi0 + scsi1) on aio=threads, iothread=1, ssd=1

EFI disk unchanged (as no option is there)

Hanged in the first run, in the initialization phase of Write for Q32T16

Picture is from recent tests (SCSI Basic + Native), but the behavior is the same for the "threads/single/iothread" setup now: https://i.postimg.cc/JnR8yCQH/VM102-SCSI-Basic-Native.png

Could you retry with a Q35 + SeaBIOS machine to eliminate confounding EFI disk...?

@RoCE-geek, you might recall in the other thread I mentioned this:

"It is worth mentioning that there was a demonstrable performance difference when using Machine and BIOS setting combinations. This was especially evident when using the OVMF UEFI BIOS. The Q35 Machine type was also better performing than the i440fx. So in the end I used the Q35 running SeaBIOS.

I also disabled memory ballooning (balloon=0) and set the CPU to host."

Also, just above that I discussed setting the PhysicalBreaks registry entry. This is because the driver is unable to determine what this should be. Using HKLM\System\CurrentControlSet\Services\vioscsi\Parameters\Device : PhysicalBreaks = 0x20 (32), is a good catch-all for most environments.

I note I'm still using the 0.1.248 driver package.

Thank you for your willingness to explore this further.

"It is worth mentioning that there was a demonstrable performance difference when using Machine and BIOS setting combinations. This was especially evident when using the OVMF UEFI BIOS. The Q35 Machine type was also better performing than the i440fx. So in the end I used the Q35 running SeaBIOS.

I also disabled memory ballooning (balloon=0) and set the CPU to host."

Also, just above that I discussed setting the PhysicalBreaks registry entry. This is because the driver is unable to determine what this should be. Using HKLM\System\CurrentControlSet\Services\vioscsi\Parameters\Device : PhysicalBreaks = 0x20 (32), is a good catch-all for most environments.

I note I'm still using the 0.1.248 driver package.

Thank you for your willingness to explore this further.

Hi @benyamin, I did all you've asked.@RoCE-geek, you might recall in the other thread I mentioned this:

"It is worth mentioning that there was a demonstrable performance difference when using Machine and BIOS setting combinations. This was especially evident when using the OVMF UEFI BIOS. The Q35 Machine type was also better performing than the i440fx. So in the end I used the Q35 running SeaBIOS.

I also disabled memory ballooning (balloon=0) and set the CPU to host."

Also, just above that I discussed setting the PhysicalBreaks registry entry. This is because the driver is unable to determine what this should be. Using HKLM\System\CurrentControlSet\Services\vioscsi\Parameters\Device : PhysicalBreaks = 0x20 (32), is a good catch-all for most environments.

I note I'm still using the 0.1.248 driver package.

Thank you for your willingness to explore this further.

Technically it's a new VM (VM104), the only one running:

SeaBIOS, so no EFI disk

Ballon=0, CPU=host

The same Q35-8.1

SCSI Single

Both disks (scsi0 + scsi1) on aio=threads, iothread=1, ssd=1

Created key "Device" under "Computer\HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\vioscsi\Parameters"

Created value "Device\PhysicalBreaks" as DWORD with "0x20"

Unfortunately, the same buggy behavior...

Thanks for giving it a go.

I just gave it another try myself using both the reproducer mentioned in GitHub issue #756 and also with CrystalDiskMark 8.0.5 with your parameters. I was unable to trigger the issue. I will try again later on different hardware and different disk subsystems, but I suspect I will not be able to reproduce the problem.

As an aside, i thought the following PVE CPU Usage graph was interesting. For context, the Y-axis max is 40%. The first IO blip is the VM booting, the CPU blip is the diskspd test, the second IO blip is CrystalDiskMark.

Don't forget set CDM Settings to "NVMe SSD mode" + Profil "Peak Performance".

Here, VM hang or crash or VM reboot , not always Windows event , but during the hang, error in journalctl is present.

Here, VM hang or crash or VM reboot , not always Windows event , but during the hang, error in journalctl is present.

journalctl | grep "kvm: virtio: zero sized buffers are not allowed"Workload type - CrystalDiskMark is used, not as a benchmark here, but as a disk stress-tool only.

For this use-case it’s very sufficient, but some important settings required:

- Use „NVMe SSD“ mode (menu Settings) for higher random load (up to Q32T16)

- For all tests, use Disk D only (aka scsi1 = „data disk“)

- Use default 5 repetitions (it’s usually sufficient)

- Use sample file size 8 - 32GB. Start with 8GB, but higher data size does not mean higher probability of the issue

Last edited:

Hi @_gabriel, exactly, NVMe SSD mode is crucial for Q32T16 benchmark.Don't forget set CDM Settings to "NVMe SSD mode" + Profil "Peak Performance".

Here, VM hang or crash or VM reboot , not always Windows event , but during the hang, error in journalctl is present.

journalctl | grep "kvm: virtio: zero sized buffers are not allowed"

And regarding Peak performance profile - it's just a quicker test, doing only SEQ 1MB Q8T1 R/W and RND 4KB Q32T16 R/W.

At the same time it's showing IOPS and latency in the bottom lines, but these numbers are always visible if the user will do "File - Save (Text)".

So yes, it can be used as a quicker variant, but I generally use "Default profile" and in highly sensitive environment it's usually enough to run just the Q32T16 R/W test (left button with captions for each R/W combo). But I also encountered that running the whole test (aka Default) at least once may be a good "warmup".

And it's also worth mentioning that the default 5 sec delay is very "gentle" to the storage, so it's also possible to shorten this interval.

The reproducer on Github doesnt do any writes so less wear to nand.

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-1649961285

Good to see more eyes on this issue.

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-1649961285

Good to see more eyes on this issue.

@_gabriel and @RoCE-geek , I've run it with both, but so far no issues at all.

I do note I'm running monolithic RAW images on a Directory Backend formatted with ext4 on LVM, created with

I noticed CrystalDiskMark just uses diskspd, and the oddity in the graph above when running the GitHub reproducer is due to the image file creation. If I don't delete the file it produces I/O delay consistent with CrystalDiskMark runs. The lack of I/O on file creation indicates that zeroes are being discarded. As @davemcl just mentioned, the reproducer doesn't seem to perform any writes (even with

I hope to try some other local storage backends in a few hours. I'm also invetigating some oddities with driver registry entries simultaneously.

I thought I should also ask, if using ZFS, have you set

I do note I'm running monolithic RAW images on a Directory Backend formatted with ext4 on LVM, created with

qemu-img create/convert -S 0 and copied with cp --sparse=never.I noticed CrystalDiskMark just uses diskspd, and the oddity in the graph above when running the GitHub reproducer is due to the image file creation. If I don't delete the file it produces I/O delay consistent with CrystalDiskMark runs. The lack of I/O on file creation indicates that zeroes are being discarded. As @davemcl just mentioned, the reproducer doesn't seem to perform any writes (even with

-Sh / -Suw), but this might be due to underlying discards.I hope to try some other local storage backends in a few hours. I'm also invetigating some oddities with driver registry entries simultaneously.

I thought I should also ask, if using ZFS, have you set

zfs_arc_min and zfs_arc_max to limit memory pressure? I dont use ZFS so this might be a factor. I also set vm.swappiness to 0 to avoid memorty pressure from exessive memory paging. I also added options kvm ignore_msrs=1 report_ignored_msrs=0 to /etc/modprobe.d/kvm.conf.Well, the mentioned reproducer is the same like the one in CrystalDiskMark, as the underlying tool is the same - diskspeed (DiskSpd64).The reproducer on Github doesnt do any writes so less wear to nand.

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-1649961285

Good to see more eyes on this issue.

Since it's all about probability, everyone can do adjustments to invoke it better. There's no static sweet-spot.

But Q32T16 is a good all-rounder. And of course, it's simple to invoke a custom test in CDM.

And I had a lot of tests where the Read was OK, but the Write has failed.

As of my VM101, running of ZFS, I have ZFS ARC Min/Max on 32GB limit on the corresponding PVE node.@_gabriel and @RoCE-geek , I've run it with both, but so far no issues at all.

I do note I'm running monolithic RAW images on a Directory Backend formatted with ext4 on LVM, created withqemu-img create/convert -S 0and copied withcp --sparse=never.

I noticed CrystalDiskMark just uses diskspd, and the oddity in the graph above when running the GitHub reproducer is due to the image file creation. If I don't delete the file it produces I/O delay consistent with CrystalDiskMark runs. The lack of I/O on file creation indicates that zeroes are being discarded. As @davemcl just mentioned, the reproducer doesn't seem to perform any writes (even with-Sh / -Suw), but this might be due to underlying discards.

I hope to try some other local storage backends in a few hours. I'm also invetigating some oddities with driver registry entries simultaneously.

I thought I should also ask, if using ZFS, have you setzfs_arc_minandzfs_arc_maxto limit memory pressure? I dont use ZFS so this might be a factor. I also setvm.swappinessto 0 to avoid memorty pressure from exessive memory paging. I also addedoptions kvm ignore_msrs=1 report_ignored_msrs=0to/etc/modprobe.d/kvm.conf.

But honestly, as everyone can see, there's no huge difference in incidence between VM101on ZFS and other VMs on LVM with HW RAIDs.

I should mention I've settled on using the following:The reproducer on Github doesnt do any writes so less wear to nand.

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-1649961285

Good to see more eyes on this issue.

diskspd.exe -b8K -d400 -Sh -o32 -t8 -r -w0 -c3g t:\random.dat diskspd.exe -b64K -d400 -Sh -o32 -t8 -r -w0 -c3g t:\random.dat Based on @fweber and Fabien's (GreYWolfBW - not sure of his handle here) variations. Running on WinServer2019.

Ok, my bad. I had thoughtThe reproducer on Github doesnt do any writes so less wear to nand.

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-1649961285

Good to see more eyes on this issue.

-w0 was no warm-up, but that's -W0. The -w parameter is for percent writes, with -w and -w0 being equivalent. Trying some -w20 runs on spindles. Considering sacrificing a small amount of SSD too... So far I haven't been able to reproduce any faults.Ok everyone, I wasn't able to reproduce the problem with the GitHub reproducer or CrystalDiskMark until I crossed 1 GiB/s using SSDs in a JBOD.

I tested single SATA and a MegaRAID RAID50 with CacheCade, and lower capacity JBOD SSDs to max speed without fault. However, I'm now testing with a single 7.3GiB/s NVMe SSD and can easily reproduce both the

I've since come up with a reliable DiskSpd-based reproducer using 4KiB blocks that can reproduce the fault on any storage backend:

It's based on the CrystalDiskMark random read, but runs longer (30s instead of 5s). The 4KiB block size pushes I/O past the threshold despite low throughput and reliably produces

I wouldn't recommend prolonged use of CrystalDiskMark with 8GiB files, even in Read only mode. It's definitely burning bits away. 8^(

I'm presently testing thread limits for each storage backend after which I will delve into the driver, including effect of registry entries. 8^d

More to follow...

I tested single SATA and a MegaRAID RAID50 with CacheCade, and lower capacity JBOD SSDs to max speed without fault. However, I'm now testing with a single 7.3GiB/s NVMe SSD and can easily reproduce both the

kvm: Desc next is 3 and the kvm: virtio: zero sized buffers are not allowed errors.I've since come up with a reliable DiskSpd-based reproducer using 4KiB blocks that can reproduce the fault on any storage backend:

diskspd.exe -b4K -d30 -Sh -o32 -t16 -r -W0 -w0 -ag -c400m <target_disk>:\random_4k.datIt's based on the CrystalDiskMark random read, but runs longer (30s instead of 5s). The 4KiB block size pushes I/O past the threshold despite low throughput and reliably produces

kvm: virtio: zero sized buffers are not allowed and hangs the VM. This one doesn't seem to hang other VMs, but then I'm not leaving it up for long, preferring to clean shutdown before doing a hard stop.I wouldn't recommend prolonged use of CrystalDiskMark with 8GiB files, even in Read only mode. It's definitely burning bits away. 8^(

I'm presently testing thread limits for each storage backend after which I will delve into the driver, including effect of registry entries. 8^d

More to follow...

So I wasn't able to find a reliable number of thread for each storage backend because repeat tests would crash out. The same went for bandwidth throttling, whether ops(/s) or MB(/s). Repeat runs would require ever more decreasing values to remain stable. I couldn't see a memory leak or similar in the guest, but it would appear something is clearly amiss in the QEMU subsystem.

I mentioned the

Downgrading the vioscsi driver to 100.85.104.20800 from package virtio-win-0.1.208 resolved it for me too.

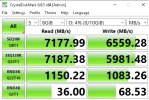

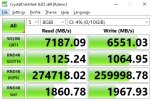

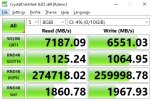

Screens following downgrade for R/W NVMe SSD default and peak performance tests respectively:

@RoCE-geek, thanks for persisting with your hypothesis. I'll have a closer look at the driver in a few hours. I've also asked in the GitHub issue if someone can try the reproducer in RH. Hopefully we can track down where the actual root cause is and get it properly fixed.

I mentioned the

kvm: Desc next is 3 error earlier, and should mention it was produced when running DiskSpd with 64KiB blocks and 16 threads.Downgrading the vioscsi driver to 100.85.104.20800 from package virtio-win-0.1.208 resolved it for me too.

Screens following downgrade for R/W NVMe SSD default and peak performance tests respectively:

@RoCE-geek, thanks for persisting with your hypothesis. I'll have a closer look at the driver in a few hours. I've also asked in the GitHub issue if someone can try the reproducer in RH. Hopefully we can track down where the actual root cause is and get it properly fixed.

I have dropped a reliable reproducer in the GitHub issue:

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-2283512999

https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-2283512999

I have dropped a post re the PhysicalBreaks registry entry tuning:

https://forum.proxmox.com/threads/h...-all-vms-using-virtio-scsi.124298/post-692887

https://forum.proxmox.com/threads/h...-all-vms-using-virtio-scsi.124298/post-692887

Per my post: https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-2283768657

EDIT: Probably jumping the gun... I thought the bad driver was released in November 2021, but it was January 2022.

I'm going to step away for a bit... ¯\_( ツ)_/¯

Last edited:

Looks like https://github.com/virtio-win/kvm-guest-drivers-windows/issues/735 is worth looking at...

There's been so many changes in this space it's hard to untangle. Hopefully Vadim at RH will have some insights if he can have a look.

It appears peripheral issues have had fixes since virtio-win-0.1.208, but an underlying core element is still producing this problem.

It will be interesting to see if anyone can reproduce the issue on RHEL or other, i.e. non-Debian platform...

There's been so many changes in this space it's hard to untangle. Hopefully Vadim at RH will have some insights if he can have a look.

It appears peripheral issues have had fixes since virtio-win-0.1.208, but an underlying core element is still producing this problem.

It will be interesting to see if anyone can reproduce the issue on RHEL or other, i.e. non-Debian platform...

Well everyone, you can see Vadim's (Red Hat) comment here: https://github.com/virtio-win/kvm-guest-drivers-windows/issues/756#issuecomment-2283909521

If, as Vadim suggests, it is "[l]ikely to Nutanix and us (RH) we don't see any problem if the IO transfer exceeds the virtual queue size. Others might have problem with that", I guess the question for the Proxmox / Debian side would be whether or not we can do anything about that...

@fiona and @fweber, would you both be the right staff to ask that question of?

If, as Vadim suggests, it is "[l]ikely to Nutanix and us (RH) we don't see any problem if the IO transfer exceeds the virtual queue size. Others might have problem with that", I guess the question for the Proxmox / Debian side would be whether or not we can do anything about that...

@fiona and @fweber, would you both be the right staff to ask that question of?