Hello everyone! I'm experiencing high IO delays (18% - 30% and sometimes higher) when I start a Windows 11 Pro VM on Proxmox VE 7.4-13. I suspect it might be caused by running everything on a ZFS RAID 1 storage but then again I do not experience high IO delays when I run a Linux VM...it's only when I start the Windows 11 VM. I'm only running one Linux and one Windows 11 VM on this hardware so that should not be overloading anything.

My hardware:

Intel NUC 12 Intel Core i7-1260P 12-Core, 3.4 GHz–4.7 GHz Turbo - NUC12WSHi7

Crucial RAM 64GB Kit (2x32GB) DDR4 3200MHz Laptop Memory - CT2K32G4SFD832A

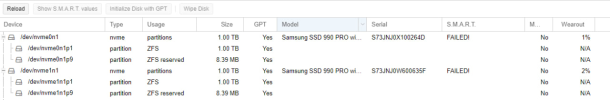

Crucial P2 2TB 3D NAND NVMe PCIe M.2 SSD Up to 2400MB/s - CT2000P2SSD8 (ZFS RAID 1)

Crucial BX500 2TB 3D NAND SATA 2.5-Inch Internal SSD, up to 540MB/s - CT2000BX500SSD1 (ZFS RAID 1)

Would this high IO delay be caused by the two different speeds on my storage devices (NVMe and SSD)? If so, is there anything I could change in the ZFS configuration to remedy this issue or is there something else I should be looking at?

My hardware:

Intel NUC 12 Intel Core i7-1260P 12-Core, 3.4 GHz–4.7 GHz Turbo - NUC12WSHi7

Crucial RAM 64GB Kit (2x32GB) DDR4 3200MHz Laptop Memory - CT2K32G4SFD832A

Crucial P2 2TB 3D NAND NVMe PCIe M.2 SSD Up to 2400MB/s - CT2000P2SSD8 (ZFS RAID 1)

Crucial BX500 2TB 3D NAND SATA 2.5-Inch Internal SSD, up to 540MB/s - CT2000BX500SSD1 (ZFS RAID 1)

Would this high IO delay be caused by the two different speeds on my storage devices (NVMe and SSD)? If so, is there anything I could change in the ZFS configuration to remedy this issue or is there something else I should be looking at?