I recently migrated my Windows 10 virtual desktop to Windows 11. However, the system has become very unresponsive and feels really sluggish. There is a lot of delay when clicking or typing.

I have scoured the Proxmox forums and Google, but so far I haven't found a solution. I ran some benchmarks in similar Windows 10 and 11 guests and narrowed it down to the virtual disk speeds. The difference is huge: the performance in Windows 11 is ~50% (or even worse with random rw) of that in Windows 10. I double checked the configs (pasted below) and I can't see a difference that could explain this. Both virtual drives are stored on a 6 drive RAID-Z2 SSD array with ZFS as filesystem and 8GB of ARC.

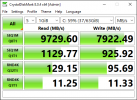

Results Windows 10 (also attached as image)

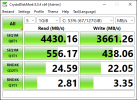

Results Windows 11 (also attached as image)

Here is what I tried so far:

Windows 10 guest

Windows 11 guest

I have scoured the Proxmox forums and Google, but so far I haven't found a solution. I ran some benchmarks in similar Windows 10 and 11 guests and narrowed it down to the virtual disk speeds. The difference is huge: the performance in Windows 11 is ~50% (or even worse with random rw) of that in Windows 10. I double checked the configs (pasted below) and I can't see a difference that could explain this. Both virtual drives are stored on a 6 drive RAID-Z2 SSD array with ZFS as filesystem and 8GB of ARC.

Results Windows 10 (also attached as image)

Code:

------------------------------------------------------------------------------

CrystalDiskMark 8.0.4 x64 (C) 2007-2021 hiyohiyo

Crystal Dew World: https://crystalmark.info/

------------------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

[Read]

SEQ 1MiB (Q= 8, T= 1): 9729.605 MB/s [ 9278.9 IOPS] < 763.75 us>

SEQ 1MiB (Q= 1, T= 1): 1129.767 MB/s [ 1077.4 IOPS] < 922.67 us>

RND 4KiB (Q= 32, T= 1): 129.146 MB/s [ 31529.8 IOPS] < 981.60 us>

RND 4KiB (Q= 1, T= 1): 11.247 MB/s [ 2745.8 IOPS] < 360.15 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 7922.494 MB/s [ 7555.5 IOPS] < 1042.13 us>

SEQ 1MiB (Q= 1, T= 1): 925.921 MB/s [ 883.0 IOPS] < 1126.28 us>

RND 4KiB (Q= 32, T= 1): 95.687 MB/s [ 23361.1 IOPS] < 1360.97 us>

RND 4KiB (Q= 1, T= 1): 11.334 MB/s [ 2767.1 IOPS] < 357.52 us>

Profile: Default

Test: 1 GiB (x5) [C: 59% (37/63GiB)]

Mode: [Admin]

Time: Measure 5 sec / Interval 5 sec

Date: 2022/08/14 17:52:38

OS: Windows 10 Professional [10.0 Build 19044] (x64)Results Windows 11 (also attached as image)

Code:

------------------------------------------------------------------------------

CrystalDiskMark 8.0.4 x64 (C) 2007-2021 hiyohiyo

Crystal Dew World: https://crystalmark.info/

------------------------------------------------------------------------------

* MB/s = 1,000,000 bytes/s [SATA/600 = 600,000,000 bytes/s]

* KB = 1000 bytes, KiB = 1024 bytes

[Read]

SEQ 1MiB (Q= 8, T= 1): 4430.158 MB/s [ 4224.9 IOPS] < 1626.83 us>

SEQ 1MiB (Q= 1, T= 1): 556.173 MB/s [ 530.4 IOPS] < 1819.13 us>

RND 4KiB (Q= 32, T= 1): 24.589 MB/s [ 6003.2 IOPS] < 5131.38 us>

RND 4KiB (Q= 1, T= 1): 2.808 MB/s [ 685.5 IOPS] < 1392.71 us>

[Write]

SEQ 1MiB (Q= 8, T= 1): 3661.265 MB/s [ 3491.7 IOPS] < 1820.54 us>

SEQ 1MiB (Q= 1, T= 1): 438.058 MB/s [ 417.8 IOPS] < 2326.13 us>

RND 4KiB (Q= 32, T= 1): 22.055 MB/s [ 5384.5 IOPS] < 5851.68 us>

RND 4KiB (Q= 1, T= 1): 3.353 MB/s [ 818.6 IOPS] < 1158.43 us>

Profile: Default

Test: 1 GiB (x5) [C: 53% (67/127GiB)]

Mode: [Admin]

Time: Measure 5 sec / Interval 5 sec

Date: 2022/08/14 17:46:40

OS: Windows 11 Professional [10.0 Build 22000] (x64)Here is what I tried so far:

- disabling ballooning: didn't help

- changing machine version: no change

- changing video card to VirtIO with more memory: no change

- using 2 or 1 CPU socket: no change

qemu64 in Proxmox. Afterwards I changed them back to Host. However, the CPU benchmarks returned identical results on Win10 and Win11, so I don't really suspect the CPU. Windows 10 guest

Code:

agent: 1,fstrim_cloned_disks=1

balloon: 2048

boot: order=sata0;scsi0;net0

cores: 8

cpu: host,flags=+md-clear;+pcid;+spec-ctrl;+ssbd;+aes

hotplug: disk,network,usb,memory,cpu

localtime: 1

machine: q35

memory: 8192

name: jodiWin10

net0: virtio=E6:98:6D:B5:9A:29,bridge=vmbr2,firewall=1,tag=30

numa: 1

onboot: 1

ostype: win10

sata0: none,media=cdrom

scsi0: jplsrv-zfs:vm-153-disk-0,cache=writeback,discard=on,iothread=1,size=64G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=44cc25a3-cf5a-4f54-910d-b169c2779df4

sockets: 1

tablet: 1

vmgenid: 594df829-3de8-42be-a023-3f18aacf4c92Windows 11 guest

Code:

agent: 1

balloon: 4096

bios: ovmf

boot: order=sata0;scsi0;net0

cores: 8

cpu: host,flags=+md-clear;+pcid;+spec-ctrl;+ssbd;+aes

efidisk0: jplsrv-zfs:vm-192-disk-1,efitype=4m,pre-enrolled-keys=1,size=1M

hotplug: disk,network,usb,memory,cpu

localtime: 0

machine: pc-q35-5.2

memory: 8192

meta: creation-qemu=6.1.0,ctime=1644500696

name: PCJOEP-WIN11

net0: virtio=B2:21:00:20:63:E2,bridge=vmbr2,firewall=1,tag=20

numa: 1

onboot: 1

ostype: win11

sata0: none,media=cdrom

scsi0: jplsrv-zfs:vm-192-disk-2,cache=writeback,discard=on,iothread=1,size=128G,ssd=1

scsihw: virtio-scsi-pci

smbios1: uuid=5c5c3f84-afca-4512-8027-c7e0dc625205

sockets: 1

tablet: 1

tpmstate0: jplsrv-zfs:vm-192-disk-0,size=4M,version=v2.0

vmgenid: 573547b1-6091-4980-bd86-94fe768c1abc