Hey guys,

thanks for the release of Proxmox Backup Server!

PBS looks very promising in regards to what our company needs:

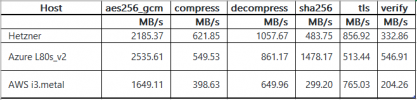

We are currently evaluating hardware for a backup server.

Host: a dedicated host on Hetzner

CPU: Intel(R) Xeon(R) CPU E5-1650 v3 (6 core, 12 threads)

Memory: 256 GB

Host: AWS EC2 i3.metal, dedicated instance

CPU: 80 x AMD EPYC 7551 32-Core Processor (2 Sockets)

Memory: 512 GB

CPU: 72 x Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz (2 Sockets)

Memory: 640 GB

Let's take a look at

The reason for the low restore speed is obvious:

Can you imagine a parallelization of pbs-restore in regards to restoring a single PBS VM backup, so we can take advantage of modern SSDs and NVMe drives?

Further links:

thanks for the release of Proxmox Backup Server!

PBS looks very promising in regards to what our company needs:

- Incremental backups of our VMs, e.g. every 15 minutes

- Flexible retention cycle, e.g. keep last 8, keep 22 hours, ...

- One pushing PVE client, several backup servers pull via sync jobs

We are currently evaluating hardware for a backup server.

- The performance of the incremental backups is fine, even with HDDs, no problems here

- The restore time is too high: at our current host at Hetzner we see restore times of 1h 17 - 1h 21 with avg speed of 156-165 MB/s with the bigger VMs of about 750 GB.

- I try to lower the restore time to about 0 hours and 15 - 45 minutes which requires restore speeds of 300 - 800 MB/s.

Host: a dedicated host on Hetzner

CPU: Intel(R) Xeon(R) CPU E5-1650 v3 (6 core, 12 threads)

Memory: 256 GB

- Source (PBS chunks): a 2 TB HDD, Target: the same HDD. Speed: 35 MB/s

- Source (PBS chunks): a 2 TB HDD, Target: SSD TOSHIBA_KHK61RSE1T92. Speed: 139 MB/s

- Source (PBS chunks): SSD TOSHIBA_THNSN81Q92CSE, Target: SSD TOSHIBA_KHK61RSE1T92. Speed: 156 MB/s - 165 MB/s

Host: AWS EC2 i3.metal, dedicated instance

CPU: 80 x AMD EPYC 7551 32-Core Processor (2 Sockets)

Memory: 512 GB

- Source (PBS chunks): mdadm RAID0 array of 2x 2TB NVMe, target: another RAID0 array of 2x 2TB NVMe. Speed: 140 MB/s

- Source (PBS chunks): mdadm RAID0 array of 8x 2TB NVMe, target: itself. Speed: 142 MB/s

CPU: 72 x Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz (2 Sockets)

Memory: 640 GB

- Source (PBS chunks): 2TB NVMe, target: another 2TB NVMe. Speed: 204 MB/s

- Source (PBS chunks): mdadm RAID0 array of 2x 2TB NVMe, target: another RAID0 array of 2x 2TB NVMe. Speed: 229 MB/s

- Source (PBS chunks): mdadm RAID0 array of 5x 2TB NVMe, target: another RAID0 array of 5x 2TB NVMe. Speed: 208 MB/s

- The host was capable of downloading the initial VM backup with 1200-2200 MB/s (10-18 Gbit/s), and the fio numbers speak for themselves (see attachment)

Let's take a look at

htop during the restore process:

The reason for the low restore speed is obvious:

/usr/bin/pbs-restoreonly keeps one thread active which results in nearly 100% utilization of one vCore- The single CPU thread is not fast enough to fill the I/O queues of my NVMes

- With SSDs and NVMes, the single-thread-CPU-performance becomes the bottleneck during the restore.

src/restore.rs- In the constructor

pub fn new(setup: BackupSetup), aproxmox-restore-workeris initialized withbuilder.max_threads(6)andbuilder.core_threads(4) - but the restore workers don't work together when restoring a VM: in function

pub async fn restore_imageI see no parallelism in the loopfor pos in 0..index.index_count()

Can you imagine a parallelization of pbs-restore in regards to restoring a single PBS VM backup, so we can take advantage of modern SSDs and NVMe drives?

Further links:

Attachments

Last edited: