Hey,

so because in the other thread no one was answering and basically ignoring this fact I have to reopen a new one.

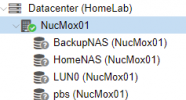

So: with the new Kernel PVE-5.15.30 my iSCSI Connections breaks and cant be revived. only the with the "old" 5.13.19-6 kernel my iSCSI connections are working.

I have currently 3 Intel Nucs running in a HA Cluster with 1 GB Nics.

I´m not using multipath (is this the problem?).

Kind regards,

so because in the other thread no one was answering and basically ignoring this fact I have to reopen a new one.

So: with the new Kernel PVE-5.15.30 my iSCSI Connections breaks and cant be revived. only the with the "old" 5.13.19-6 kernel my iSCSI connections are working.

I have currently 3 Intel Nucs running in a HA Cluster with 1 GB Nics.

I´m not using multipath (is this the problem?).

Kind regards,