fio --name=randrw --ioengine=libaio --direct=1 --sync=1 --rw=randrw --bs=3M --numjobs=1 --iodepth=1 --size=20G --runtime=120 --time_based --rwmixread=75

randrw: (g=0): rw=randrw, bs=(R) 3072KiB-3072KiB, (W) 3072KiB-3072KiB, (T) 3072KiB-3072KiB, ioengine=libaio, iodepth=1

fio-3.33

Starting 1 process

randrw: Laying out IO file (1 file / 20480MiB)

Jobs: 1 (f=1): [m(1)][100.0%][r=51.0MiB/s,w=12.0MiB/s][r=17,w=4 IOPS][eta 00m:00s]

randrw: (groupid=0, jobs=1): err= 0: pid=17888: Wed Jun 25 23:32:10 2025

read: IOPS=13, BW=40.3MiB/s (42.3MB/s)(4836MiB/120012msec)

slat (usec): min=96, max=559, avg=124.43, stdev=25.73

clat (msec): min=39, max=3352, avg=59.77, stdev=138.94

lat (msec): min=39, max=3352, avg=59.89, stdev=138.94

clat percentiles (msec):

| 1.00th=[ 42], 5.00th=[ 44], 10.00th=[ 44], 20.00th=[ 45],

| 30.00th=[ 46], 40.00th=[ 47], 50.00th=[ 47], 60.00th=[ 48],

| 70.00th=[ 50], 80.00th=[ 51], 90.00th=[ 55], 95.00th=[ 59],

| 99.00th=[ 169], 99.50th=[ 793], 99.90th=[ 2265], 99.95th=[ 3339],

| 99.99th=[ 3339]

bw ( KiB/s): min= 6144, max=73728, per=100.00%, avg=46079.65, stdev=12051.62, samples=214

iops : min= 2, max= 24, avg=15.00, stdev= 3.93, samples=214

write: IOPS=4, BW=13.7MiB/s (14.4MB/s)(1650MiB/120012msec); 0 zone resets

slat (usec): min=1642, max=2291, avg=1833.89, stdev=102.56

clat (msec): min=34, max=117, avg=38.54, stdev= 6.01

lat (msec): min=36, max=118, avg=40.37, stdev= 6.00

clat percentiles (msec):

| 1.00th=[ 36], 5.00th=[ 36], 10.00th=[ 36], 20.00th=[ 37],

| 30.00th=[ 37], 40.00th=[ 38], 50.00th=[ 38], 60.00th=[ 39],

| 70.00th=[ 39], 80.00th=[ 40], 90.00th=[ 41], 95.00th=[ 43],

| 99.00th=[ 58], 99.50th=[ 99], 99.90th=[ 117], 99.95th=[ 117],

| 99.99th=[ 117]

bw ( KiB/s): min= 6144, max=43008, per=100.00%, avg=16834.25, stdev=8036.03, samples=200

iops : min= 2, max= 14, avg= 5.47, stdev= 2.61, samples=200

lat (msec) : 50=82.65%, 100=15.63%, 250=1.11%, 500=0.14%, 750=0.05%

lat (msec) : 1000=0.09%, 2000=0.19%, >=2000=0.14%

cpu : usr=0.15%, sys=0.93%, ctx=2177, majf=0, minf=10

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=1612,550,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=40.3MiB/s (42.3MB/s), 40.3MiB/s-40.3MiB/s (42.3MB/s-42.3MB/s), io=4836MiB (5071MB), run=120012-120012msec

WRITE: bw=13.7MiB/s (14.4MB/s), 13.7MiB/s-13.7MiB/s (14.4MB/s-14.4MB/s), io=1650MiB (1730MB), run=120012-120012msec

notes:

The google drive uses the local disk like cache.

My drive grow in size, then, slowly down in size after send the file online.

The CPU with google drive was 4 cores. 40% usage.

The traffit on ethernet in 900mbps+, so, was the speed off switch and network card.

The local disk is important be bigger.

Windows 2019 essentials - 1809.

Google one - 30TB.

When restart computer, the shared is lost. You must login again. If use Administrator account, add to the share permissions and use him on credentials to mount on linux. This will free you geting a error permissions message on linux (after mount smb).

Command to mount:

mount -t cifs "//192.168.xxx.xx/g" /mnt/smb/gdrive_backup -o credentials=/etc/smbcredentials/backup.cred,domain=WORKGROUP,iocharset=utf8,vers=3.0,noserverino

Second day of tests.

mount -t cifs "//192.168.xxx.xx/g" /mnt/smb/gdrive_backup -o credentials=/etc/smbcredentials/backup.cred,domain=WORKGROUP,iocharset=utf8,vers=3.11,noserverino

fio --name=randrw --ioengine=libaio --direct=1 --sync=1 --rw=randrw --bs=3M --numjobs=1 --iodepth=1 --size=20G --runtim

e=120 --time_based --rwmixread=75

randrw: (g=0): rw=randrw, bs=(R) 3072KiB-3072KiB, (W) 3072KiB-3072KiB, (T) 3072KiB-3072KiB, ioengine=libaio, iodepth=1

fio-3.33

Starting 1 process

randrw: Laying out IO file (1 file / 20480MiB)

Jobs: 1 (f=1): [m(1)][100.0%][r=27.0MiB/s,w=9225KiB/s][r=9,w=3 IOPS][eta 00m:00s]

randrw: (groupid=0, jobs=1): err= 0: pid=19503: Thu Jun 26 09:35:25 2025

read: IOPS=13, BW=41.6MiB/s (43.6MB/s)(4992MiB/120023msec)

slat (usec): min=93, max=693, avg=132.46, stdev=33.65

clat (msec): min=36, max=2585, avg=57.31, stdev=134.86

lat (msec): min=36, max=2585, avg=57.44, stdev=134.86

clat percentiles (msec):

| 1.00th=[ 37], 5.00th=[ 40], 10.00th=[ 42], 20.00th=[ 43],

| 30.00th=[ 44], 40.00th=[ 45], 50.00th=[ 46], 60.00th=[ 46],

| 70.00th=[ 47], 80.00th=[ 49], 90.00th=[ 52], 95.00th=[ 56],

| 99.00th=[ 211], 99.50th=[ 860], 99.90th=[ 2400], 99.95th=[ 2601],

| 99.99th=[ 2601]

bw ( KiB/s): min= 6144, max=73728, per=100.00%, avg=47853.97, stdev=13825.56, samples=213

iops : min= 2, max= 24, avg=15.58, stdev= 4.50, samples=213

write: IOPS=4, BW=14.1MiB/s (14.8MB/s)(1698MiB/120023msec); 0 zone resets

slat (usec): min=1649, max=3049, avg=1892.63, stdev=160.48

clat (msec): min=34, max=245, avg=38.73, stdev=12.96

lat (msec): min=36, max=247, avg=40.62, stdev=12.95

clat percentiles (msec):

| 1.00th=[ 35], 5.00th=[ 36], 10.00th=[ 36], 20.00th=[ 36],

| 30.00th=[ 36], 40.00th=[ 37], 50.00th=[ 37], 60.00th=[ 39],

| 70.00th=[ 40], 80.00th=[ 40], 90.00th=[ 41], 95.00th=[ 42],

| 99.00th=[ 67], 99.50th=[ 88], 99.90th=[ 247], 99.95th=[ 247],

| 99.99th=[ 247]

bw ( KiB/s): min= 6144, max=43008, per=100.00%, avg=17093.70, stdev=8843.35, samples=202

iops : min= 2, max= 14, avg= 5.56, stdev= 2.88, samples=202

lat (msec) : 50=89.96%, 100=8.74%, 250=0.67%, 500=0.13%, 750=0.04%

lat (msec) : 1000=0.13%, 2000=0.13%, >=2000=0.18%

cpu : usr=0.16%, sys=1.02%, ctx=2245, majf=0, minf=10

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=1664,566,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=41.6MiB/s (43.6MB/s), 41.6MiB/s-41.6MiB/s (43.6MB/s-43.6MB/s), io=4992MiB (5234MB), run=120023-120023msec

WRITE: bw=14.1MiB/s (14.8MB/s), 14.1MiB/s-14.1MiB/s (14.8MB/s-14.8MB/s), io=1698MiB (1780MB), run=120023-120023msec

Is a liitle improvement in performance using 3.11 protocol for SMB.

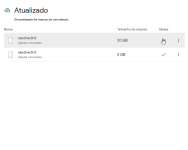

In attach is the explanation about sync.

The google drive uses the local disk like cache, so, if the vm is stored in ssd or nvme you will have best results.

The syncronization is async, so, the files is uploaded after stored on local disk. The ram usage is minimum.

I will now test in PBS, with comparation of the same backups in local storage (ssd) and remote. Tasks like verify, prune and etc.