Hello,

For reference I believe this started after upgrading to 7 (I also replaced my PSU at the time but not sure if that would cause the issue) but before I upgraded, it took a few minutes for my 2 HDDs to activate on boot. Now I have to manually deactivate using

Write up on my blog for what I do:

Proxmox 7 - Activating LVM volumes after failure to attach on boot

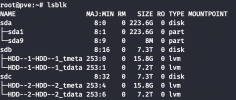

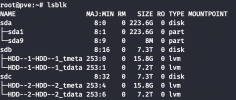

For reference, here is what lsblk shows:

Boot verbose messages:

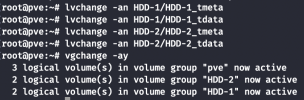

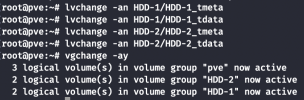

After manually activating:

If anyone has any ideas on what should be my next steps then I would greatly appreciate it.

For reference I believe this started after upgrading to 7 (I also replaced my PSU at the time but not sure if that would cause the issue) but before I upgraded, it took a few minutes for my 2 HDDs to activate on boot. Now I have to manually deactivate using

lvchange -an HDD-#/HDD-#_tmeta and lvchange -an HDD-#/HDD-#_tdata for the drives then attach via vgchange -ay.Write up on my blog for what I do:

Proxmox 7 - Activating LVM volumes after failure to attach on boot

For reference, here is what lsblk shows:

Boot verbose messages:

After manually activating:

If anyone has any ideas on what should be my next steps then I would greatly appreciate it.

Attachments

Last edited: