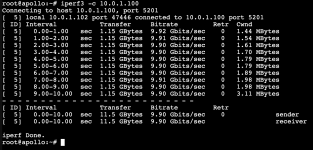

I am running a PVE cluster (3) on 6.8.12-4. I have a Dell R730XD, and 2 R630s. 2 boxes have the Intel(R) 10GbE 4P X710 rNDC, and the other has the BRCM 10G/GbE 2+2P 57800 rNDC. iperf3 runs great between my NAS and PVE hosts (and their containers), but not on the VMs runnings on those hosts. The throughput is good, but as you can see, the retries are through the roof!

Any ideas???

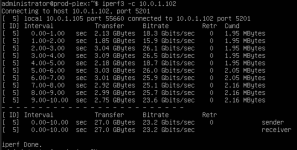

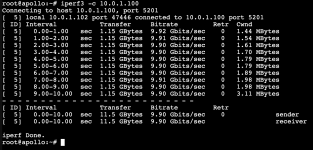

Performance between the NAS (10.0.1.100) and a PVE host (apollo - 10.0.1.102) is great.

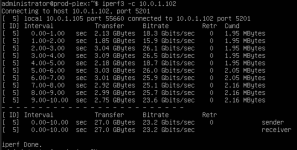

Performance between the PVE host (apollo - 10.0.1.102) and a VM (prod-plex - 10.0.1.105) is great. That VM is on the apollo PVE host.

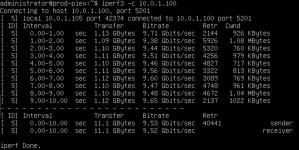

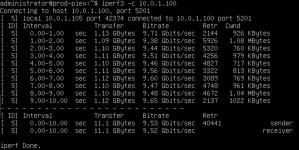

Performance between a VM (prod-plex - 10.0.1.105) and the NAS (10.0.1.100) is poor. The NAS is a Dell R720XD with an Intel(R) 10GbE 4P X710 rNDC.

This is obviously not a switching issue as the PVE hosts and my NAS are communicating perfectly.

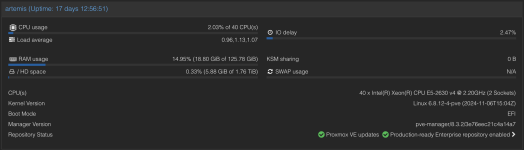

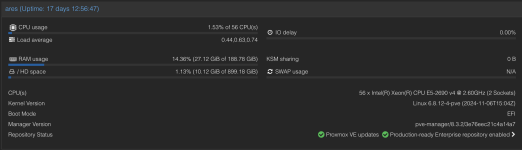

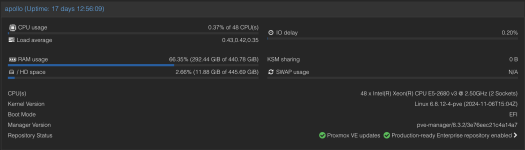

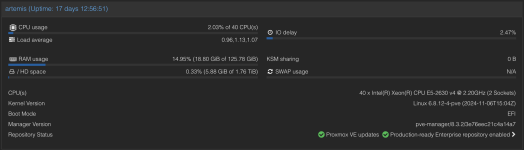

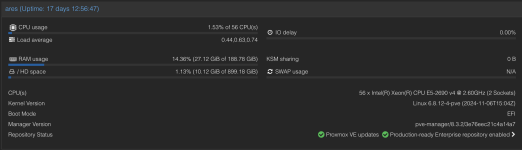

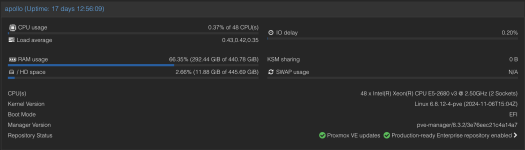

Please see the usage on the PVE hosts.

Any ideas???

Performance between the NAS (10.0.1.100) and a PVE host (apollo - 10.0.1.102) is great.

Performance between the PVE host (apollo - 10.0.1.102) and a VM (prod-plex - 10.0.1.105) is great. That VM is on the apollo PVE host.

Performance between a VM (prod-plex - 10.0.1.105) and the NAS (10.0.1.100) is poor. The NAS is a Dell R720XD with an Intel(R) 10GbE 4P X710 rNDC.

This is obviously not a switching issue as the PVE hosts and my NAS are communicating perfectly.

Please see the usage on the PVE hosts.