Hi - firstly, I have looked for a guide on how best to do this, but haven't found anything that I can make sense of, mostly because the majority appears to (understandably) be targeted towards Enterprise environments - if i've missed one, I would be very grateful for a link, and totally accepting of being called a plonker with poor google fu. I'm looking for help with a home lab setup...My storage setup is below - all SATA SSDs are Enterprise grade (Intel s3700). All my workloads (currently) will run on a single Node's resources.

3 Node cluster made up of micro PCs (Fujitsu Esprimo's).

- Node 1 - 400GB SATA & 100GB SATA; 32GB RAM

- Node 2 - 16 GB Optane NvME and 400GB SATA; 32GB RAM

- Node 3 - 200GB SATA and 500GB spinning platter HDD; 16GB RAM.

- NAS - WD-EX4100/4bay running RAID 5 with hot spare on WD gold disks.

(I know identical hardware easier/better - but this way I get to learn about way more scenarios.....and it was cheaper/what I could find cheaply on ebay....)

I run daily backups of all VMs to the NAS - RPO (recovery point objective) of 24 hours is absolutely fine for me - I'm aware dual disks per node would be 'better' but I am fine with single disk risk and 24 hr RPO. I have this running with LVM storage, and it's great, but there's lots of chat about ZFS and how it's "better".....so I want to play, but I'm totally new to ZFS. I'm therefore looking for a guide on how to set it up, covering:

1. Optimal partition sizes? I don't really understand this about ZFS and how ZFS uses disk partitions

2. How do I add the second 400GB SATA disk and expand the rpool/data dataset?

3. How do i see the current allocations across:

```

rpool

rpool/ROOT

rpool/ROOT/pve-1

rpool/data

```

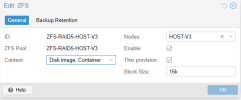

4. Optimal block size - granted this is workload dependant, though understand default of 128KB is generally recommended, though given I expect mostly logging, sensor data, and small writes to splunk/influxdb/syslog servers etc., I was going to go with 32KB?

5. I think the scenario I am aiming for is to get to having one zpool per node, with 3 datasets (ROOT, ROOT/pve-1 and data) with data spread across 2 disks (no replication) each with same basic config (ASHIFT 12, block size, pool, vdev and dataset names), with, for example Node 1 having around 480GB for data with the rest for OS etc.

Any help greatly appreciated!

3 Node cluster made up of micro PCs (Fujitsu Esprimo's).

- Node 1 - 400GB SATA & 100GB SATA; 32GB RAM

- Node 2 - 16 GB Optane NvME and 400GB SATA; 32GB RAM

- Node 3 - 200GB SATA and 500GB spinning platter HDD; 16GB RAM.

- NAS - WD-EX4100/4bay running RAID 5 with hot spare on WD gold disks.

(I know identical hardware easier/better - but this way I get to learn about way more scenarios.....and it was cheaper/what I could find cheaply on ebay....)

I run daily backups of all VMs to the NAS - RPO (recovery point objective) of 24 hours is absolutely fine for me - I'm aware dual disks per node would be 'better' but I am fine with single disk risk and 24 hr RPO. I have this running with LVM storage, and it's great, but there's lots of chat about ZFS and how it's "better".....so I want to play, but I'm totally new to ZFS. I'm therefore looking for a guide on how to set it up, covering:

1. Optimal partition sizes? I don't really understand this about ZFS and how ZFS uses disk partitions

2. How do I add the second 400GB SATA disk and expand the rpool/data dataset?

3. How do i see the current allocations across:

```

rpool

rpool/ROOT

rpool/ROOT/pve-1

rpool/data

```

4. Optimal block size - granted this is workload dependant, though understand default of 128KB is generally recommended, though given I expect mostly logging, sensor data, and small writes to splunk/influxdb/syslog servers etc., I was going to go with 32KB?

5. I think the scenario I am aiming for is to get to having one zpool per node, with 3 datasets (ROOT, ROOT/pve-1 and data) with data spread across 2 disks (no replication) each with same basic config (ASHIFT 12, block size, pool, vdev and dataset names), with, for example Node 1 having around 480GB for data with the rest for OS etc.

Any help greatly appreciated!