Good evening, I have been pouring over an interesting problem and it seems like I need some deep insight. Windows Server 2025 Core has much more than expected overhead.

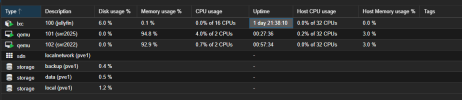

I am testing Windows Server 2025 Core (and Desktop) on Proxmox 8.3, with all paravirtualized features enabled and the agent installed.

On the VM all virt-io drivers have been installed and VBS has been explicitly disabled. The OS is newly installed, is completely idle, with no software installed, and running idle for quite some time, so no background or startup tasks.

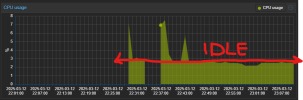

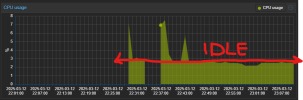

I have tested various combinations of cpu flags, and kvm arguments, both with and without HPET, on two seperate hosts, both proxmox production and test, both Kernel 6.8 and Kernel 6.11. However, try as I might, over the course of three days, with various combinations of settings, the windows VM is consuming a lot of the hosts resources, a scenario not expected when comparing host cpu consumption on Hyper-V or VMware. All the while the VM performs very, very well, snappy with very very good IO performance, but the host efficiency is clearly not as expected.

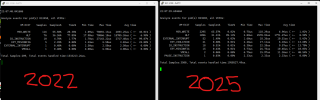

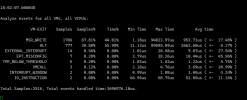

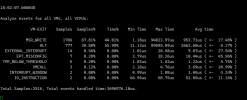

When analyzing perf, kvm does have a notable amount of VM exits, its difficult to assess the scale of the consumption versus the host resources and the allocated resources.

What am I doing wrong here, I have tried everything under the sun, but idle resource consumption is far greater than expected.

I will test all ideas good and bad, i'm committed to making this efficient at any cost to my own personal time investments. I start a new role as IT Manager of a robotics company in a few weeks. Proxmox is what I want to use as my primary solution for all containers and VM across the enterprise, both windows and linux, I expect to scale, but this level efficiency causes problems with virtualization density.

Thank you all, I appreciate your time.

I am testing Windows Server 2025 Core (and Desktop) on Proxmox 8.3, with all paravirtualized features enabled and the agent installed.

On the VM all virt-io drivers have been installed and VBS has been explicitly disabled. The OS is newly installed, is completely idle, with no software installed, and running idle for quite some time, so no background or startup tasks.

I have tested various combinations of cpu flags, and kvm arguments, both with and without HPET, on two seperate hosts, both proxmox production and test, both Kernel 6.8 and Kernel 6.11. However, try as I might, over the course of three days, with various combinations of settings, the windows VM is consuming a lot of the hosts resources, a scenario not expected when comparing host cpu consumption on Hyper-V or VMware. All the while the VM performs very, very well, snappy with very very good IO performance, but the host efficiency is clearly not as expected.

When analyzing perf, kvm does have a notable amount of VM exits, its difficult to assess the scale of the consumption versus the host resources and the allocated resources.

What am I doing wrong here, I have tried everything under the sun, but idle resource consumption is far greater than expected.

I will test all ideas good and bad, i'm committed to making this efficient at any cost to my own personal time investments. I start a new role as IT Manager of a robotics company in a few weeks. Proxmox is what I want to use as my primary solution for all containers and VM across the enterprise, both windows and linux, I expect to scale, but this level efficiency causes problems with virtualization density.

Thank you all, I appreciate your time.