Hello.

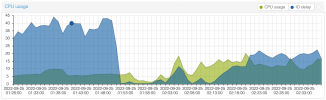

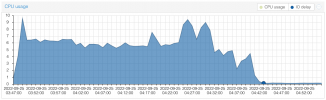

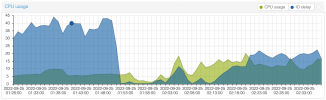

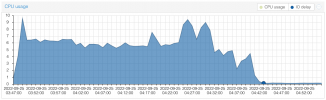

I am having big IO delay problems using Proxmox 7.2 and i see major speed issues, sometimes VM appearing to freeze.

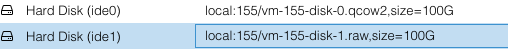

My current configuration is

CPU : AMD Ryzen 7 3700X 8-Core Processor

RAM : 64 GB DDR4

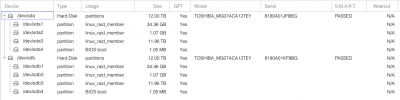

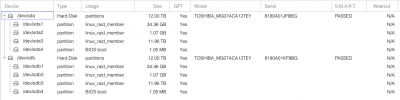

HDD: 2x12TB TOSHIBA MG07ACA1 (configured in software RAID1 probably)

Speed issues and VM freeze appears when i am trying to install new OS to new VMs and especially when i am using Windows :

I know that HDD are slow compared to SSD, but i did not imagine are that slow.

I have read that this HDD model is is using CMR/PRM technologies and not SHD. I also have to say that i installed 10 VM in the same time, Is there any tool that can rearrange memory zones so it can optimize the rear/write speed? Or just a simple copy of the whole image disk from the cli will solve this? I don't have time to reinstall all VMs again on another machine.

On this Proxmox machine, when the system is in idle i see no more than 0.5 IO delay, i don't know what are the normal values. I would also like to say that i used Proxmox (Virtual Environment 6.4-15 ) on other HDD systems and did not had this problem at all ... IO delay would not pass over 0.005 (using single HDD, not even RAID).

Thank you.

I am having big IO delay problems using Proxmox 7.2 and i see major speed issues, sometimes VM appearing to freeze.

My current configuration is

CPU : AMD Ryzen 7 3700X 8-Core Processor

RAM : 64 GB DDR4

HDD: 2x12TB TOSHIBA MG07ACA1 (configured in software RAID1 probably)

Speed issues and VM freeze appears when i am trying to install new OS to new VMs and especially when i am using Windows :

Code:

pveperf

CPU BOGOMIPS: 115195.36

REGEX/SECOND: 3861393

HD SIZE: 11053.95 GB (/dev/md2)

BUFFERED READS: 228.65 MB/sec

AVERAGE SEEK TIME: 16.23 ms

FSYNCS/SECOND: 53.92

DNS EXT: 20.71 msI know that HDD are slow compared to SSD, but i did not imagine are that slow.

I have read that this HDD model is is using CMR/PRM technologies and not SHD. I also have to say that i installed 10 VM in the same time, Is there any tool that can rearrange memory zones so it can optimize the rear/write speed? Or just a simple copy of the whole image disk from the cli will solve this? I don't have time to reinstall all VMs again on another machine.

On this Proxmox machine, when the system is in idle i see no more than 0.5 IO delay, i don't know what are the normal values. I would also like to say that i used Proxmox (Virtual Environment 6.4-15 ) on other HDD systems and did not had this problem at all ... IO delay would not pass over 0.005 (using single HDD, not even RAID).

Thank you.

Last edited: