This is no longer a question, since I figured it out on my own, but I'd like to share that information.

Spoiler alert, this is not directly related to proxmox, but more to MySQL maintenance, which might be obvious to experienced admins, but it wasn't to me.

I'm running Proxmox Virtual Environment 6.2-4 on HP Proliant DL360p 8gen server, with 4 cheapest SSDs of several brands in inefficient configuration of 2x RAID 1 (in hopes of easier recovery in case of corruption). Onboard raid controller is P420i Smart Array.

There are 4 VMs and 7 CTs on it.

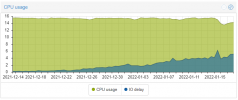

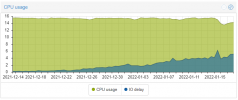

Recently I've noticed (by delays in other servers) that thigs are getting sluggish. Below are the graphs of IO delay increasing over time.

Yearly average

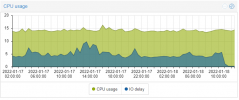

Monthly average

After some googling and using

determined that the mysql is responsible for majority of IO throughput. Despite the fact that it was not enormous (max read was ~5-70MB/s) it caused the delay. I'm sure poor RAID performance with no hardware cache module installed in the server was part of the issue and resulted in this traffic to be enough to slow things down - but it still remains relevant, even in better performing setups.

I have checked SMART records in all the disks, checked server hardware temperatures, found out that RAID controller gets hot (85C) and despite being within acceptable range (up to 100C) it was a possible suspect.

To try and help out the raid controller I'm planning to "hack" a cooling solution for it. I have also ordered a Cache Module for the server in hopes it will improve performance.

Meanwhile though, I started exploring DB maintenance. With help of dbForge Studio for Mysql I was able to easily run "CHECK" and "ANALYZE" on all of the tables. This yielded no interesting results, all tables reported "OK" status. Next in line was "OPTIMIZE":

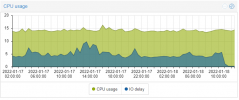

And just like that, the IO Delay is gone.

Hourly average

The database causing the issue has tables with around 8'000'000 and 14'000'000 records.

Hopefully this will be useful to some other unexperienced admin.

Spoiler alert, this is not directly related to proxmox, but more to MySQL maintenance, which might be obvious to experienced admins, but it wasn't to me.

I'm running Proxmox Virtual Environment 6.2-4 on HP Proliant DL360p 8gen server, with 4 cheapest SSDs of several brands in inefficient configuration of 2x RAID 1 (in hopes of easier recovery in case of corruption). Onboard raid controller is P420i Smart Array.

There are 4 VMs and 7 CTs on it.

Recently I've noticed (by delays in other servers) that thigs are getting sluggish. Below are the graphs of IO delay increasing over time.

Yearly average

Monthly average

After some googling and using

Code:

atop iotop iostatI have checked SMART records in all the disks, checked server hardware temperatures, found out that RAID controller gets hot (85C) and despite being within acceptable range (up to 100C) it was a possible suspect.

To try and help out the raid controller I'm planning to "hack" a cooling solution for it. I have also ordered a Cache Module for the server in hopes it will improve performance.

Meanwhile though, I started exploring DB maintenance. With help of dbForge Studio for Mysql I was able to easily run "CHECK" and "ANALYZE" on all of the tables. This yielded no interesting results, all tables reported "OK" status. Next in line was "OPTIMIZE":

Code:

m.group optimize note Table does not support optimize, doing recreate + analyze instead

m.group optimize status OKAnd just like that, the IO Delay is gone.

Hourly average

The database causing the issue has tables with around 8'000'000 and 14'000'000 records.

Hopefully this will be useful to some other unexperienced admin.