HDD never spin down

- Thread starter charlot rodolphe

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Using

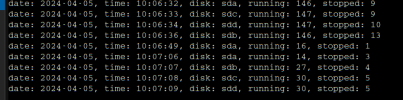

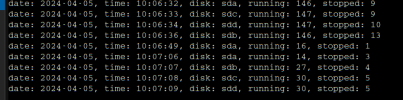

I see this which causes the disks to spin up:

Something from zfs keeps the disks spinning?

Code:

blktrace -d /dev/sdc -o - | blkparse -i -

Code:

8,32 7 173 1155.524252951 4140 I W 14991898680 + 8 [z_wr_int_1]

8,32 7 174 1155.524254007 4140 D W 14991898680 + 8 [z_wr_int_1]

8,32 7 175 1155.525452948 558788 A W 14856242120 + 32 <- (8,33) 14856240072

8,32 7 176 1155.525453616 558788 Q W 14856242120 + 32 [z_wr_int_0]

8,32 7 177 1155.525455476 558788 G W 14856242120 + 32 [z_wr_int_0]

Code:

8,32 4 616 1155.464635571 558694 P N [z_wr_iss]

8,32 4 617 1155.464635796 558694 U N [z_wr_iss] 1

8,32 4 618 1155.464636009 558694 I W 14855845584 + 8 [z_wr_iss]

8,32 4 619 1155.464637397 558694 D W 14855845584 + 8 [z_wr_iss]Something from zfs keeps the disks spinning?

I also tried this, however this can have an adverse side-effect: In my case, there is an LVM PV on that drive. When I disable it via a global filter, the VG does not get started at all.Hello, I found a way to keep unused disk standby.

I see all hdd become active when I use lvm commands (like lvdisplay), and pvestatd will call lvm functions to get the status of default local-lvm storage.

so I edit the /etc/lvm/lvm.conf, append a new filter rule in

devices{global_filter []

}

now it is

global_filter = [ "r|/dev/zd.*|", "r|/dev/mapper/pve-.*|", "r|/dev/sdb.*|" ]

and now pvestatd will not awake my /dev/sdb

I have resorted to exclude the device only for pvestatd, which indirectly uses /usr/share/perl5/PVE/Storage/LVMPlugin.pm in lvm_vgs():

Perl:

#my $cmd = ['/sbin/vgs', '--separator', ':', '--noheadings', '--units', 'b',

my $cmd = ['/sbin/vgs', '--config', 'devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }', '--separator', ':', '--noheadings', '--units', 'b',

'--unbuffered', '--nosuffix', '--options'];However, it ssems there are two more calls to LVM, like:

Code:

$cmd = ['/sbin/pvs', '--config', 'devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }', '--separator', ':', '--noheadings', '--units', 'k',

'--unbuffered', '--nosuffix', '--options',

'pv_name,pv_size,vg_name,pv_uuid', $device];and

Code:

my $cmd = [

'/sbin/lvs', '--separator', ':', '--noheadings', '--units', 'b',

'--unbuffered', '--nosuffix',

'--config', 'report/time_format="%s" devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }',

'--options', $option_list,

];

Last edited:

I have successfully disabled pvestatd via modification of /usr/share/perl5/PVE/Storage/LVMPlugin.pm.

However, I reverted that change, because it had an adverse side-effect: Every night, upon automatic backup, the first backup always failed with a timeout, because the disk did not spin up fast enough, giving an error like:

However, I reverted that change, because it had an adverse side-effect: Every night, upon automatic backup, the first backup always failed with a timeout, because the disk did not spin up fast enough, giving an error like:

Code:

cannot determine size of volume 'local-lvm:vm-120-disk-0' - command '/sbin/lvs --separator : --noheadings --units b --unbuffered --nosuffix --options lv_size /dev/pve/vm-120-disk-0' failed: got timeoutSame issue here. Did you find a solution?UsingI see this which causes the disks to spin up:Code:blktrace -d /dev/sdc -o - | blkparse -i -

Code:8,32 7 173 1155.524252951 4140 I W 14991898680 + 8 [z_wr_int_1] 8,32 7 174 1155.524254007 4140 D W 14991898680 + 8 [z_wr_int_1] 8,32 7 175 1155.525452948 558788 A W 14856242120 + 32 <- (8,33) 14856240072 8,32 7 176 1155.525453616 558788 Q W 14856242120 + 32 [z_wr_int_0] 8,32 7 177 1155.525455476 558788 G W 14856242120 + 32 [z_wr_int_0]

Code:8,32 4 616 1155.464635571 558694 P N [z_wr_iss] 8,32 4 617 1155.464635796 558694 U N [z_wr_iss] 1 8,32 4 618 1155.464636009 558694 I W 14855845584 + 8 [z_wr_iss] 8,32 4 619 1155.464637397 558694 D W 14855845584 + 8 [z_wr_iss]

Something from zfs keeps the disks spinning?

Maybe it is pvestatd, but not every 8 seconds. The disk starts spinning each time after several ten minutes.

What I have done so far:

What I have done so far:

- create a zfs patition "local-backup2" on /dev/sda at node "pve01"

- mounted the zfs partition as directory "local-backup"

- added a storage "backup-dir" in /local-backup2" at datacenter for content "VZDump backup file"

- excluded the devices in lvm.conf from scanning

- activated spindown in hdparm.conf

- excluded /dev/sda from smartd scans

Code:

root@pve01:~# mount |grep backup

local-backup2 on /local-backup2 type zfs (rw,relatime,xattr,noacl,casesensitive)

local-backup2/subvol-113-disk-1 on /local-backup2/subvol-113-disk-1 type zfs (rw,relatime,xattr,posixacl,casesensitive)

local-backup2/backup-dir on /local-backup2/backup-dir type zfs (rw,relatime,xattr,noacl,casesensitive)

Code:

root@pve01:~# tail -4 /etc/lvm/lvm.conf

devices {

# added by pve-manager to avoid scanning ZFS zvols

global_filter=["r|/dev/disk/by-label/local-backup2.*|", "r|/dev/sda.*|", "r|/dev/zd.*|", "r|/dev/mapper/pve-.*|"]

}

Code:

root@pve01:~# tail -4 /etc/hdparm.conf

/dev/disk/by-id/ata-ST1750LM000_HN-M171RAD_S34Dxxxxxxxxxx {

spindown_time = 120

}

Code:

root@pve01:~# cat /etc/smartd.conf

[...]

/dev/sda -d ignore

DEVICESCAN -d removable -n standby -m root -M exec /usr/share/smartmontools/smartd-runner

[...]Excuse me, respected Professor Wolfgang. May I ask for your advice on the hardware export issue of Proxmox.Hi,

here is a checklist what can keep the disk running

https://rudd-o.com/linux-and-free-software/tip-letting-your-zfs-pool-sleep

Here is my question link: https://forum.proxmox.com/threads/h...f-all-virtual-machines-in-the-cluster.138730/

Finally I found the reason: I accidentaly installed one LXC on this hdd. After moving the LXC to the ssd it works; the hdd keeps standby status.Maybe it is pvestatd, but not every 8 seconds. The disk starts spinning each time after several ten minutes.

What I have done so far:

Spindown works as configured in hdparm.conf. But the disk starts spinning with same blktrace messages as user flove (see above).

- create a zfs patition "local-backup2" on /dev/sda at node "pve01"

- mounted the zfs partition as directory "local-backup"

- added a storage "backup-dir" in /local-backup2" at datacenter for content "VZDump backup file"

- excluded the devices in lvm.conf from scanning

- activated spindown in hdparm.conf

- excluded /dev/sda from smartd scans

Some time has passed since the post have been created, anyone aware if this perhaps has been implemented?

Hi, looks like an option for my problem. But i cant get the code work. I copied the following section to the config file. But i am getting the following error:I also tried this, however this can have an adverse side-effect: In my case, there is an LVM PV on that drive. When I disable it via a global filter, the VG does not get started at all.

I have resorted to exclude the device only for pvestatd, which indirectly uses /usr/share/perl5/PVE/Storage/LVMPlugin.pm in lvm_vgs():

Perl:#my $cmd = ['/sbin/vgs', '--separator', ':', '--noheadings', '--units', 'b', my $cmd = ['/sbin/vgs', '--config', 'devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }', '--separator', ':', '--noheadings', '--units', 'b', '--unbuffered', '--nosuffix', '--options'];

However, it ssems there are two more calls to LVM, like:

Code:$cmd = ['/sbin/pvs', '--config', 'devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }', '--separator', ':', '--noheadings', '--units', 'k', '--unbuffered', '--nosuffix', '--options', 'pv_name,pv_size,vg_name,pv_uuid', $device];

and

Code:my $cmd = [ '/sbin/lvs', '--separator', ':', '--noheadings', '--units', 'b', '--unbuffered', '--nosuffix', '--config', 'report/time_format="%s" devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }', '--options', $option_list, ];

"Parse error at byte 111831 (line 2454): unexpected token". Any suggestions? Sorry, i am not an expert in such deep linux stuff and new in proxmox universe

Code:

my $cmd = [

'/sbin/lvs', '--separator', ':', '--noheadings', '--units', 'b',

'--unbuffered', '--nosuffix',

'--config', 'report/time_format="%s" devices { global_filter=["r|/dev/zd.*|","r|/dev/mapper/.*|","r|/dev/sda.*|"] }',

'--options', $option_list,

];

Last edited:

Here's what I did:

Code:

||/ Name Version Architecture Description

+++-==============-============-============-============================================

ii pve-manager 8.1.5 amd64 Proxmox Virtual Environment Management Tools

Diff:

--- /usr/share/perl5/PVE/Storage/LVMPlugin.pm 2024-03-31 21:05:44.756599416 -0700

+++ /usr/share/perl5/PVE/Storage/LVMPlugin.pm 2024-03-31 21:03:55.585610904 -0700

@@ -111,7 +111,8 @@

sub lvm_vgs {

my ($includepvs) = @_;

- my $cmd = ['/sbin/vgs', '--separator', ':', '--noheadings', '--units', 'b',

+ my $cmd = ['/sbin/vgs', '--config', 'devices { global_filter=["r|/dev/mapper/.*|","r|/dev/sd.*|"] }',

+ '--separator', ':', '--noheadings', '--units', 'b',

'--unbuffered', '--nosuffix', '--options'];

my $cols = [qw(vg_name vg_size vg_free lv_count)];

@@ -510,13 +511,13 @@

# In LVM2, vgscans take place automatically;

# this is just to be sure

- if ($cache->{vgs} && !$cache->{vgscaned} &&

- !$cache->{vgs}->{$scfg->{vgname}}) {

- $cache->{vgscaned} = 1;

- my $cmd = ['/sbin/vgscan', '--ignorelockingfailure', '--mknodes'];

- eval { run_command($cmd, outfunc => sub {}); };

- warn $@ if $@;

- }

+ #if ($cache->{vgs} && !$cache->{vgscaned} &&

+ # !$cache->{vgs}->{$scfg->{vgname}}) {

+ # $cache->{vgscaned} = 1;

+ # my $cmd = ['/sbin/vgscan', '--ignorelockingfailure', '--mknodes'];

+ # eval { run_command($cmd, outfunc => sub {}); };

+ # warn $@ if $@;

+ #}

# we do not acticate any volumes here ('vgchange -aly')

# instead, volumes are activate individually laterI can't get this to work. I am using hd-idle

Things I have tried

Any suggestions?

Things I have tried

- disabling smartd

- disabling smartmontools

- disabling pvestatd

- LVM devices

Code:

global_filter=["r|/NAS.*|", "r|/dev/disk/by-label/NAS.*|", "r|/dev/sda.*|", "r|/dev/sdb.*|", "r|/dev/sdc.*|", "r|/dev/sdd.*|", "r|/dev/zd.*|", "r|/dev/mapper/pve-.*|"] - Changing from storage directories > ZFS storage

Any suggestions?

Last edited:

Thank you for this, it is very close.Hello, I do not have time to write a nice post but here are my evernote notes:

spindown / sleep disks

you can edit /etc/lvm/lvm.conf to exclude your drives. Just reject them via global filter.

- ok if:

- pvestatd stop (disable disks stats)

- then hdparm -y or -Y /dev/sda / sdd

- usb 500 GB slim doesn't accept but 8TB de ATLAS ok

- disable selected stats: https://forum.openmediavault.org/index.php?thread/30290-proxmox-spin-down-hdd/

The problem is pvestatd which is constantly scanning your drives.

eg.: dont scan sda and sdb:

global_filter = [ "r|/dev/sda.*|", "r|/dev/sdb.*|" ,"r|/dev/zd.*|", "r|/dev/mapper/pve-.*|" "r|/dev/mapper/.*-(vm|base)--[0-9]+--disk--[0-9]+|"]

other: https://forum.proxmox.com/threads/pvestatd-doesnt-let-hdds-go-to-sleep.29727/

other: https://forum.proxmox.com/threads/upgrade-from-5-x-to-6-x-harddrives-dont-sleep-no-more.69672/

blktrace -d /dev/sdc -o - | blkparse -i - which showed immediately that lvs and vgs where responsible.

I excluded the drive in lvm.conf, and it is working now. Code:

sleep 300 && hdparm -C /dev/sdc

/dev/sdc:

drive state is: standby

Edited LVM.conf:

Filter by-id

global_filter = ["r|/dev/disk/by-id/ata-ST8000DM004-2CX188_ZCT0D032*|","r|/dev/sdd*|", "r|/dev/zd.*|", "r|/dev/mapper/pve-.*|" "r|/dev/mapper/.*-(vm|base)--[0-9]+--disk--[0-9]+|"]

[]add /mnt/pve/BackupsDS718 to sleep DS718 ?

To manualy spindown SDA:

hdparm -y /dev/sda

OKKKKK but not yet automatic (doesn't spindown after XX minutes).

Trying this: (not working)

Added at: /etc/hdparm.conf

/dev/sda {

spindown_time = 60

apm = 127

}

This Works: (For Sata 8TB datastore Windows) (disable scan in lvm.conf before !)

- hdparm -S 12 /dev/sdc

- /dev/sdc:

- setting standby to 12 (1 minutes)

- or

- hdparm -S 240 /dev/sdc

- /dev/sdc:

- setting standby to 240 (1 minutes)

- [] But does it stay after a reboot ???

- If not, bulletproof version: create a bash file: /root/scripts/cron_hdparm.sh

#!/bin/sh

#sleep [B]Sata 8TB Datatstore W2019[/B]

echo \/-----------------------------------\/

date

/usr/sbin/hdparm -C /dev/disk/by-id/ata-ST8000DM004-2CX188_ZCT0D032

/usr/sbin/hdparm -S 240 /dev/disk/by-id/ata-ST8000DM004-2CX188_ZCT0D032

#sleep 10

#hdparm -C /dev/disk/by-id/ata-ST8000DM004-2CX188_ZCT0D032

- Then Cron it twice a day !

crontab -e

#6h and 18h each day

0 6,18 * * * /root/scripts/cron_hdparm.sh >> /root/scripts/cron_hdparm.log 2>&1

infos config sleep hdparm:

==> spindown_time: see https://blog.bravi.org/?p=134

0 = disabled

1..240 = multiples of 5 seconds (5 seconds to 20 minutes)

241..251 = 1..11 x 30 mins 252 = 21 mins

253 = vendor defined (8..12 hours)

254 = reserved

255 = 21 mins + 15 secs

I will not make any response or support, take my note as they are, hope it helps, sorry, no time here.

If you try and it works and you have time, please give your experience below.

Save energy ! ;-)

But I discovered form this link.

I know this is an old thread but I figured I will post a solution I found for those who would like to lower case temps and save a few watts by spinning down drives.

As evidenced in the screenshot above I found that every 10 seconds commands 'lvs' and 'vgs' are issued on the host which prevents drive spin-down. In my case all of the drives except 1 are part of 2 zfs pools and do not need to be scanned by 'lvs' and 'vgs' - which by default look at all block devices found in /dev.

The solution for me was to edit /etc/lvm/lvm.conf and add drives I do not want scanned to the filter section -...

As evidenced in the screenshot above I found that every 10 seconds commands 'lvs' and 'vgs' are issued on the host which prevents drive spin-down. In my case all of the drives except 1 are part of 2 zfs pools and do not need to be scanned by 'lvs' and 'vgs' - which by default look at all block devices found in /dev.

The solution for me was to edit /etc/lvm/lvm.conf and add drives I do not want scanned to the filter section -...

The correct syntax for adding a sd device is .* not*

So e.g.

Code:

"r|/dev/sde.*|"

Code:

"r|/dev/sde*|"Without the dot before the asterisk, only one LVM group displayed and drive still woken up, now it works properly, I did still also add the drive by-id as well which seems to work fine.

I added a 12TB spindle for future planned use, so no point it spinning whilst its not even partitioned yet, thanks for everyone who input into this.

However if I load the disk summary page it wakes up the drive, so there is an extra step needed for complete immunity to wake up.

Think these are the culprits, these run for every drive when accessing the disk summary page. Can add -n standby for smartctl, not sure about udevadm command.

Code:

3305 1238671 udevadm info -p /sys/block/sde --query all

3305 1238671 udevadm info -p /sys/block/sde --query all

3309 1238672 /usr/sbin/smartctl -H /dev/sdeFixed, I patched 'Diskmanage.pm'

Code:

my $SMARTCTL = "/usr/sbin/smartctl";

Code:

my $SMARTCTL = "/usr/sbin/smartctl -n standby";Have to do 'service restart pvedaemon' after.

It will prevent accessing individual disk's smart values from the GUI and they will display as UNKNOWN, looks like the proxmox code freaks out if the initial scan hits a drive in standby, so would need a further patch, or just access it in cli. But the main disk summary screen remains functional, shows partitions. Will leave it as is for now (no GUI smart), I have verified the new commands it spits out do give valid output, it seems the extra argument is maybe breaking a validity check in the proxmox code or something, and I am not great at perl.

Last edited:

I've read and tried to apply the tips in this thread, but so far no luck.

I have a pool of 8 SAS drives (all in a z2 zfs pool) with spindown configured with sdparm, known to be working as I did get to work it one TrueNAS before swichting to Proxmox.

Here is my lvm.conf:

Hoever, when running

Even when disabling smartmontools/smartd, the output does not change. This makes me think that something is calling smartctl explicitly on my drives.

After doing changes I rebooted the node to make sure all changes are applied, still, no luck.

I'd be grateful for any input

I have a pool of 8 SAS drives (all in a z2 zfs pool) with spindown configured with sdparm, known to be working as I did get to work it one TrueNAS before swichting to Proxmox.

Here is my lvm.conf:

Code:

devices {

# added by pve-manager to avoid scanning ZFS zvols and Ceph rbds

global_filter=["r|/dev/zd.*|", "r|/dev/rbd.*|", "r|/dev/mapper/pve-.*|", "r|/dev/sda.*|", "r|/dev/sdb.*|", "r|/dev/sdc.*|", "r|/dev/sdd.*|", "r|/dev/sde.*|", "r|/dev/sdf.*|", "r|/dev/sdg.*|", "r|/dev/sdh.*|"]

}Hoever, when running

blktrace -d /dev/sda -o - | blkparse -i - I still get output like this:

Code:

8,0 5 1 0.000000000 26959 D R 36 [smartctl]

8,0 5 2 0.000662194 26959 D R 18 [smartctl]

8,0 5 3 0.001259270 26959 D R 252 [smartctl]

8,0 5 4 0.001902752 26959 D R 36 [smartctl]

8,0 5 5 0.002512097 26959 D R 32 [smartctl]

8,0 5 6 0.003184064 26959 D R 8 [smartctl]

8,0 5 7 0.003758121 26959 D R 64 [smartctl]

8,0 5 8 0.006147579 26959 D R 64 [smartctl]

8,0 17 1 0.000614037 0 C R [0]

8,0 17 2 0.001248970 0 C R [0]

8,0 17 3 0.001878224 0 C R [0]

8,0 17 4 0.002464220 0 C R [0]

8,0 17 5 0.003103347 0 C R [0]

8,0 17 6 0.003718553 0 C R [0]

8,0 17 7 0.006112511 0 C R [0]

8,0 17 8 0.007223956 0 C R [0]

8,0 17 9 0.008250268 0 C R [0]

8,0 17 10 0.008913322 0 C R [0]

8,0 17 11 0.009493070 0 C R [0]Even when disabling smartmontools/smartd, the output does not change. This makes me think that something is calling smartctl explicitly on my drives.

After doing changes I rebooted the node to make sure all changes are applied, still, no luck.

I'd be grateful for any input

I did edit the smart daemon configuration (by default it checks everything it finds on the system), I added drives manually and with the -n standby flag, so smart isnt requested if drive is in idle or standby state. However you said you have killed the daemon, so that would rule that out. Otherwise only time I seen smart get called is when you check the disk summary screen, which I posted about in my above post. My spindle has been spun down for several days now.

With that said my drive is not yet configured to use for anything, I just installed it physically ready for future use and ran a smart long test on it, so its possible some extra smart commands get called for drives configured in a pool.

The change I mentioned above might fix your problem, as smartctl syntax seems to be defined in one place and then called as a variable for the different bits of code that call it.

The value of the SMART checks seems limited, I know e.g. it doesnt warn you when CRC errors are being logged in SMART, as it was happening to one of my SATA SSDs due to a kink in the cable near the connector, obviously I now replaced the cable, and took greater care in installing the new one. What alerted me to the issue was the pool checksum errors.

With that said my drive is not yet configured to use for anything, I just installed it physically ready for future use and ran a smart long test on it, so its possible some extra smart commands get called for drives configured in a pool.

The change I mentioned above might fix your problem, as smartctl syntax seems to be defined in one place and then called as a variable for the different bits of code that call it.

The value of the SMART checks seems limited, I know e.g. it doesnt warn you when CRC errors are being logged in SMART, as it was happening to one of my SATA SSDs due to a kink in the cable near the connector, obviously I now replaced the cable, and took greater care in installing the new one. What alerted me to the issue was the pool checksum errors.

Last edited:

Thanks for your reply.

I double checked to ensure that no service is running:

I also made sure I had no open proxmox web ui - the disks still jump around between ACTIVE and IDLE BY TIMER (every few seconds).

As far as I can tell they only reach IDLE_A, not even IDLE_B.

For sanity, I also disabled all VMs/Containers which can have access to the pool in question (and therefore the 8 disks), nothing.

I also tried editng

I double checked to ensure that no service is running:

Code:

root@proxmox:~# systemctl --type=service --state=running

UNIT LOAD ACTIVE SUB DESCRIPTION

chrony.service loaded active running chrony, an NTP client/server

cron.service loaded active running Regular background program processing daemon

dbus.service loaded active running D-Bus System Message Bus

getty@tty1.service loaded active running Getty on tty1

ksmtuned.service loaded active running Kernel Samepage Merging (KSM) Tuning Daemon

lxc-monitord.service loaded active running LXC Container Monitoring Daemon

lxcfs.service loaded active running FUSE filesystem for LXC

ModemManager.service loaded active running Modem Manager

NetworkManager.service loaded active running Network Manager

polkit.service loaded active running Authorization Manager

postfix@-.service loaded active running Postfix Mail Transport Agent (instance -)

pve-cluster.service loaded active running The Proxmox VE cluster filesystem

pve-container@105.service loaded active running PVE LXC Container: 105

pve-container@107.service loaded active running PVE LXC Container: 107

pve-firewall.service loaded active running Proxmox VE firewall

pve-ha-crm.service loaded active running PVE Cluster HA Resource Manager Daemon

pve-ha-lrm.service loaded active running PVE Local HA Resource Manager Daemon

pve-lxc-syscalld.service loaded active running Proxmox VE LXC Syscall Daemon

pvedaemon.service loaded active running PVE API Daemon

pvefw-logger.service loaded active running Proxmox VE firewall logger

pveproxy.service loaded active running PVE API Proxy Server

pvescheduler.service loaded active running Proxmox VE scheduler

pvestatd.service loaded active running PVE Status Daemon

qmeventd.service loaded active running PVE Qemu Event Daemon

rpcbind.service loaded active running RPC bind portmap service

rrdcached.service loaded active running LSB: start or stop rrdcached

spiceproxy.service loaded active running PVE SPICE Proxy Server

ssh.service loaded active running OpenBSD Secure Shell server

systemd-journald.service loaded active running Journal Service

systemd-logind.service loaded active running User Login Management

systemd-udevd.service loaded active running Rule-based Manager for Device Events and Files

user@0.service loaded active running User Manager for UID 0

watchdog-mux.service loaded active running Proxmox VE watchdog multiplexer

webmin.service loaded active running Webmin server daemon

wpa_supplicant.service loaded active running WPA supplicant

zfs-zed.service loaded active running ZFS Event Daemon (zed)I also made sure I had no open proxmox web ui - the disks still jump around between ACTIVE and IDLE BY TIMER (every few seconds).

As far as I can tell they only reach IDLE_A, not even IDLE_B.

For sanity, I also disabled all VMs/Containers which can have access to the pool in question (and therefore the 8 disks), nothing.

I also tried editng

/usr/share/perl5/PVE/Diskmanage.pm as you suggested (tried both with -n idle and -n standbyfollowed by service pvedaemon restart, did not change anything. Even with a broken command (so that smartctl cannot get called), It still shows smartctl checking in blktrace. So something is still calling smartctl - and I cannot say what process is responsible for it. (I can see it with htop --filter smartctl)Why don't you replace smartctl with a script that calls "ps" in a way to show its caller?