Hi, I have an HP Proliant machine with proxmox and I am having very low read / write speeds on my ZFS drive. These problems are even capable of blocking all my VMs

Mi server: ProLiant DL360e Gen8

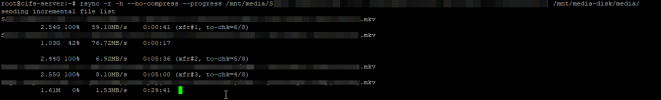

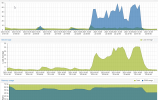

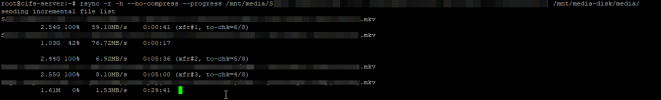

This causes the system to become extremely slow, and eventually all virtual machines are locked due to increased IO Delay and load.

When I limit the speed of the disks in the virtual machines to 50MB/s nothing changes, the speed starts to drop rapidly.

the ZFS pool configuration is as follows::

Thank you very much for creating Proxmox and thank you very much for your help.

Mi server: ProLiant DL360e Gen8

- 2 x Intel(R) Xeon(R) CPU E5-2470 v2 @ 2.40GHz

- 72 GB ECC RAM @ 1333 Mhz

- 3 x SanDisk SSD Plus Sata III 1TB (535 MB/S) in SmartArray P420 (HBA mode) <<<---- This is configured with ZFS as RAID 5

- 1 x Comercial SATA SSD for Proxmox HOST

This causes the system to become extremely slow, and eventually all virtual machines are locked due to increased IO Delay and load.

When I limit the speed of the disks in the virtual machines to 50MB/s nothing changes, the speed starts to drop rapidly.

the ZFS pool configuration is as follows::

Code:

root@proxmox-1:~# zpool get all

NAME PROPERTY VALUE SOURCE

Main_VM_Storage size 2.72T -

Main_VM_Storage capacity 30% -

Main_VM_Storage altroot - default

Main_VM_Storage health ONLINE -

Main_VM_Storage guid 12320134737522795333 -

Main_VM_Storage version - default

Main_VM_Storage bootfs - default

Main_VM_Storage delegation on default

Main_VM_Storage autoreplace off default

Main_VM_Storage cachefile - default

Main_VM_Storage failmode wait default

Main_VM_Storage listsnapshots off default

Main_VM_Storage autoexpand off default

Main_VM_Storage dedupratio 1.00x -

Main_VM_Storage free 1.89T -

Main_VM_Storage allocated 844G -

Main_VM_Storage readonly off -

Main_VM_Storage ashift 12 local

Main_VM_Storage comment - default

Main_VM_Storage expandsize - -

Main_VM_Storage freeing 0 -

Main_VM_Storage fragmentation 31% -

Main_VM_Storage leaked 0 -

Main_VM_Storage multihost off default

Main_VM_Storage checkpoint - -

Main_VM_Storage load_guid 3251330473448672712 -

Main_VM_Storage autotrim off default

Main_VM_Storage feature@async_destroy enabled local

Main_VM_Storage feature@empty_bpobj active local

Main_VM_Storage feature@lz4_compress active local

Main_VM_Storage feature@multi_vdev_crash_dump enabled local

Main_VM_Storage feature@spacemap_histogram active local

Main_VM_Storage feature@enabled_txg active local

Main_VM_Storage feature@hole_birth active local

Main_VM_Storage feature@extensible_dataset active local

Main_VM_Storage feature@embedded_data active local

Main_VM_Storage feature@bookmarks enabled local

Main_VM_Storage feature@filesystem_limits enabled local

Main_VM_Storage feature@large_blocks enabled local

Main_VM_Storage feature@large_dnode enabled local

Main_VM_Storage feature@sha512 enabled local

Main_VM_Storage feature@skein enabled local

Main_VM_Storage feature@edonr enabled local

Main_VM_Storage feature@userobj_accounting active local

Main_VM_Storage feature@encryption enabled local

Main_VM_Storage feature@project_quota active local

Main_VM_Storage feature@device_removal enabled local

Main_VM_Storage feature@obsolete_counts enabled local

Main_VM_Storage feature@zpool_checkpoint enabled local

Main_VM_Storage feature@spacemap_v2 active local

Main_VM_Storage feature@allocation_classes enabled local

Main_VM_Storage feature@resilver_defer enabled local

Main_VM_Storage feature@bookmark_v2 enabled local

Main_VM_Storage feature@redaction_bookmarks enabled local

Main_VM_Storage feature@redacted_datasets enabled local

Main_VM_Storage feature@bookmark_written enabled local

Main_VM_Storage feature@log_spacemap active local

Main_VM_Storage feature@livelist enabled local

Main_VM_Storage feature@device_rebuild enabled local

Main_VM_Storage feature@zstd_compress enabled localThank you very much for creating Proxmox and thank you very much for your help.