Thank you for looking into this!

Task Log:

journalctl:

https://pastebin.com/fj8U64CB

Task Log:

INFO: starting new backup job: vzdump 201 --mailto x@y.de.de --compress 0 --node pve --quiet 1 --storage Proxmox-Backup --mode snapshot --all 0 --mailnotification always

INFO: Starting Backup of VM 201 (qemu)

INFO: Backup started at 2021-08-19 22:26:44

INFO: status = running

INFO: VM Name: Nextcloud

INFO: include disk 'scsi0' 'local-zfs:vm-201-disk-1' 450G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: skip unused drive 'Proxmox-Backup:201/vm-201-disk-0.qcow2' (not included into backup)

INFO: creating vzdump archive '/media/usb/Backup5TB1/Proxmox-Backup/dump/vzdump-qemu-201-2021_08_19-22_26_43.vma'

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

INFO: started backup task '83f5b3b9-e043-4e66-906f-8bc59c12afb2'

INFO: resuming VM again

INFO: 0% (1.4 GiB of 450.0 GiB) in 5s, read: 292.2 MiB/s, write: 273.4 MiB/s

INFO: 1% (4.6 GiB of 450.0 GiB) in 40s, read: 93.6 MiB/s, write: 88.4 MiB/s

INFO: 2% (9.2 GiB of 450.0 GiB) in 1m 34s, read: 86.3 MiB/s, write: 82.8 MiB/s

INFO: 3% (13.6 GiB of 450.0 GiB) in 2m 29s, read: 82.1 MiB/s, write: 81.9 MiB/s

INFO: 4% (18.5 GiB of 450.0 GiB) in 3m 29s, read: 83.3 MiB/s, write: 83.2 MiB/s

INFO: 5% (22.6 GiB of 450.0 GiB) in 4m 19s, read: 83.7 MiB/s, write: 83.7 MiB/s

INFO: 6% (27.4 GiB of 450.0 GiB) in 5m 20s, read: 82.0 MiB/s, write: 82.0 MiB/s

INFO: 7% (31.9 GiB of 450.0 GiB) in 6m 16s, read: 81.8 MiB/s, write: 81.8 MiB/s

INFO: 8% (36.3 GiB of 450.0 GiB) in 7m 10s, read: 83.7 MiB/s, write: 83.7 MiB/s

INFO: 9% (41.0 GiB of 450.0 GiB) in 8m 8s, read: 83.3 MiB/s, write: 83.3 MiB/s

INFO: 10% (45.4 GiB of 450.0 GiB) in 9m 4s, read: 79.9 MiB/s, write: 79.9 MiB/s

Expected: $VAR1 = {

'id' => '304921:158',

'arguments' => {},

'execute' => 'qmp_capabilities'

};

Received: $VAR1 = {

'error' => {},

'return' => {},

'id' => '837591:1'

};

ERROR: VM 201 qmp command 'query-backup' failed - got wrong command id '837591:1' (expected 304921:158)

INFO: aborting backup job

INFO: resuming VM again

ERROR: Backup of VM 201 failed - VM 201 qmp command 'query-backup' failed - got wrong command id '837591:1' (expected 304921:158)

INFO: Failed at 2021-08-19 22:36:07

INFO: Backup job finished with errors

TASK ERROR: job errors

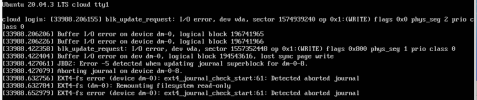

journalctl:

https://pastebin.com/fj8U64CB