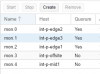

So I noticed that the two monitors that are not in quomrum do not have /0 after there ports on the WebGUI under the Monitor screen.

service ceph status:

ceph.service - LSB: Start Ceph distributed file system daemons at boot time

Loaded: loaded (/etc/init.d/ceph)

Active: active (exited) since Fri 2017-06-09 16:01:31 EDT; 1 weeks 6 days ago

Jun 09 16:01:30 int-p-mid1 ceph[1811]: === mon.4 ===

Jun 09 16:01:30 int-p-mid1 ceph[1811]: Starting Ceph mon.4 on int-p-mid1...

Jun 09 16:01:30 int-p-mid1 ceph[1811]: Running as unit ceph-mon.4.1497038490.341517916.service.

Jun 09 16:01:30 int-p-mid1 ceph[1811]: Starting ceph-create-keys on int-p-mid1...

Jun 09 16:01:31 int-p-mid1 systemd[1]: Started LSB: Start Ceph distributed file system daemons at boot time.

Ceph Status:

2017-06-22 16:15:35.517812 7f05e8329700 0 -- :/1543168526 >> 192.168.110.200:67 89/0 pipe(0x7f05e4060030 sd=3 :0 s=1 pgs=0 cs=0 l=1 c=0x7f05e405dd20).fault

cluster 1c47df50-7ed0-47d9-af71-d92152a95edf

health HEALTH_OK

monmap e3: 3 mons at {0=192.168.110.202:6789/0,1=192.168.110.203:6789/0,2=1 92.168.110.201:6789/0}

election epoch 116, quorum 0,1,2 2,0,1

osdmap e1221: 15 osds: 15 up, 15 in

flags sortbitwise,require_jewel_osds

pgmap v1472042: 192 pgs, 2 pools, 450 GB data, 115 kobjects

1345 GB used, 2411 GB / 3756 GB avail

192 active+clean

client io 32521 B/s rd, 1757 kB/s wr, 8 op/s rd, 42 op/s wr

root@int-p-mid1:~# ceph status

2017-06-22 16:15:49.365284 7f94c0301700 0 -- :/426723825 >> 192.168.110.204:6789/0 pipe(0x7f94bc060030 sd=3 :0 s=1 pgs=0 cs=0 l=1 c=0x7f94bc05dd20).fault

cluster 1c47df50-7ed0-47d9-af71-d92152a95edf

health HEALTH_OK

monmap e3: 3 mons at {0=192.168.110.202:6789/0,1=192.168.110.203:6789/0,2=192.168.110.201:6789/0}

election epoch 116, quorum 0,1,2 2,0,1

osdmap e1221: 15 osds: 15 up, 15 in

flags sortbitwise,require_jewel_osds

pgmap v1472052: 192 pgs, 2 pools, 450 GB data, 115 kobjects

1345 GB used, 2411 GB / 3756 GB avail

192 active+clean

client io 84242 B/s rd, 385 kB/s wr, 19 op/s rd, 14 op/s wr

Nothing out of the ordinary when restarting. What next? I am been banging my head on the table over this lol.