Coming back to report the success I had last night. I let this sit in my bucket of "wait for developers to fix" for quite some time. Last night I mustered up the strength to want to try again. It seems as though I have got it to work.

Proxmox Host Setup:

I am not sure how much of this is necessary, but I am not going to go back and remove stuff to see if it works, this is what it is, and its staying that way

Updated to 7.3-4

Grub command (/etc/default/grub):

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt video=vesafb:off video=efifb:off initcall_blacklist=sysfb_init"

my "/etc/modprob.d/blacklist.conf" file has this in it:

blacklist igb

blacklist i915

my "/etc/modprob.d/vfio.conf" file has this in it:

options vfio-pci ids=8086:150e,8086:4680 disable_vga=1

Running kernel 5.15.83-1-pve

Ubuntu 22.04 LTS Guest:

Kernel:

5.15.0-56-generic

Grub command (/etc/default/grub):

GRUB_CMDLINE_LINUX_DEFAULT="i915.force_probe=4680 i915.enable_gvt=1"

Built and installed these three repos, but they might not be necessary:

Another interesting thread:

https://github.com/intel/media-driver/issues/1371

-----------------------------------------------------------------------------------------------------

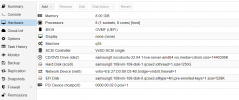

After doing that, I transferred my plexmediaserver directory from the old plex machine to the new one, enabled hardware encoding in settings and started multiple streams for an extended period of time. No crashes, kernel panics or other instability. Just very little CPU usage, and minimal power consumption:

View attachment 44914

and 1 transcode on my phone at the same time (weird clouds at the bottom are the PIP transcode intro to a movie), also showing the servers total power consumption (it runs more than just plex):

View attachment 44915

Edit: Updated guest OS kernel and version.