Dear fellows,

this ist my very first Proxmox problem.

I upgraded from PVE 6.4 to 7.1 which was all milk and honey and ran fine without any trouble.

But since the upgrade, I cannot backup a specific LXC container in suspend mode, only stop mode works.

The error shown is as follows:

What really drives me crazy is that this very exact rsync command completes successfully without any errors if I just issue it on the command line. I addition, first and final sync complete before it occurs.

The following is the ouput of pct config <machine id>:

Thanks in advance for your kind help and a Merry Christmas to you all!

Kind regards,

christian

this ist my very first Proxmox problem.

I upgraded from PVE 6.4 to 7.1 which was all milk and honey and ran fine without any trouble.

But since the upgrade, I cannot backup a specific LXC container in suspend mode, only stop mode works.

The error shown is as follows:

INFO: including mount point rootfs ('/') in backup

INFO: excluding bind mount point mp0 ('/opt/cloud-data') from backup (not a volume)

INFO: mode failure - some volumes do not support snapshots

INFO: trying 'suspend' mode instead

INFO: backup mode: suspend

INFO: ionice priority: 7

INFO: CT Name: nextcloud-vfs

INFO: including mount point rootfs ('/') in backup

INFO: excluding bind mount point mp0 ('/opt/cloud-data') from backup (not a volume)

INFO: starting first sync /proc/2403890/root/ to /var/tmp/vzdumptmp3095719_108

INFO: first sync finished - transferred 19.67G bytes in 215s

INFO: suspending guest

INFO: starting final sync /proc/2403890/root/ to /var/tmp/vzdumptmp3095719_108

INFO: resume vm

INFO: guest is online again after 17 seconds

ERROR: Backup of VM 108 failed - command 'rsync --stats -h -X -A --numeric-ids -aH --delete --no-whole-file --inplace --one-file-system --relative '--exclude=/opt/cloud-data' '--exclude=/tmp/?*' '--exclude=/var/tmp/?*' '--exclude=/var/run/?*.pid' '--exclude=/opt/cloud-data' /proc/2403890/root//./ /var/tmp/vzdumptmp3095719_108' failed: exit code 23

What really drives me crazy is that this very exact rsync command completes successfully without any errors if I just issue it on the command line. I addition, first and final sync complete before it occurs.

The following is the ouput of pct config <machine id>:

Any other LXC container with a similar configuration exept mp0: and idmap works perfectly with backups.arch: amd64

cores: 16

hostname: nextcloud-vfs

memory: 65536

mp0: /rpool/data/vm-files/cloud-data,mp=/opt/cloud-data

nameserver: 1.1.1.1

net0: name=eth0,bridge=vmbr1,firewall=1,gw=192.168.56.1,gw6=fe80::2:4fff:fe7b:f1a1,hwaddr=4E:BD:69:A5:9D:BD,ip=192.168.56.48/24,ip6=fd16:c367:ccbe:8::48/64,type=veth

onboot: 1

ostype: debian

protection: 1

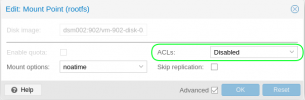

rootfs: Disk-Images-OS:108/vm-108-disk-0.raw,size=64G

searchdomain: DMZ.lan

startup: order=6,up=120,down=600

swap: 131072

unprivileged: 1

lxc.mount.entry: /dev/random dev/random none bind,ro,create=file 0 0

lxc.mount.entry: /dev/urandom dev/urandom none bind,ro,create=file 0 0

lxc.mount.entry: /dev/random var/spool/postfix/dev/random none bind,ro,create=file 0 0

lxc.mount.entry: /dev/urandom var/spool/postfix/dev/urandom none bind,ro,create=file 0 0

lxc.idmap: u 0 100000 1000

lxc.idmap: g 0 100000 1000

lxc.idmap: u 1000 1000 1

lxc.idmap: g 1000 1000 1

lxc.idmap: u 1001 101001 64535

lxc.idmap: g 1001 101001 64535

Thanks in advance for your kind help and a Merry Christmas to you all!

Kind regards,

christian

Last edited: