@RoCE-geek, if I understand correctly, your investigation focused on the number and cause of the VM exits and the influence of the Hyper-V enlightenments on these VM exits -- and thank you for that, I see that Fiona already opened a feature request for reevaluating the Hyper-V enlightenments. However, I'm not sure whether your hosts are also affected by the increased CPU usage issue summarized above (you initially mentioned it's hard to determine whether you're affected or not [4]) -- could you please clarify? I'm asking because it looks like you performed most tests on AMD machines, and it would be interesting if they also see the same CPU usage issues, or if only Intel CPUs are affected so far, see above.

Hi

@fweber, thanks a lot for your interest here!

I completely understand that the whole situation about such (more or less similar) symptoms is misleading. Even for me, and for many others.

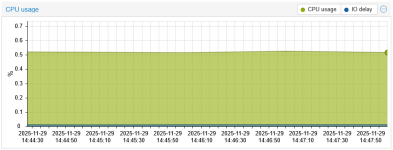

Disclaimer: all our systems are tuned to maximum BIOS/HOST/GUEST performance, so no dynamic performance/power control is active.

So what are my points here:

- regardless of HW architecture, since Win11-24H2 and WS2025, there's (at least) doubled virtualization overhead (in terms of much more often VM-EXITS)

- this is highly critical, because all about virtualization is about efficiency, maximized density, and optimum performance (and corresponding ROI, TCO, etc.)

- I'm also limited to "increased idle load only", so using generic CPUs (like x86-64-v3, or EPYC-Milan-v2, etc.) is a proven prevention of VBS/HVCI problems

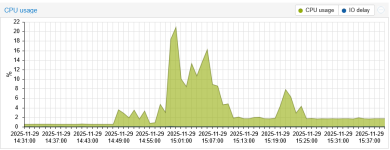

- this increased VM-EXITS problem is not just about "decreased performance", "decreased VM density", but in also (almost) blocks C3 states completely, which in effect blocks Turbo Boost activation. To be clear, as of now, I'm not focused on C3/TB, just on the basic increase of host idle load.

- my hit-or-miss research was focused on the "root cause", which AFAIK are the specific MSR/MSR_WRITE events, especially

HVE synthetic timers

- up to Win11-23H2/WS2022, there was no such abuse, as others demonstrated just acceptable idle VM-EXITS rate (acceptable for Win VMs)

- I proved that this phenomenon of increased idle load is the same for Intel and AMD, so it's a general Windows behavior, platform independent

- The key difference is probably the final influence on each platform, i.e. how are this increased VM-EXITS transposed into the "bad behavior"

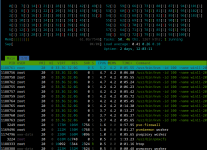

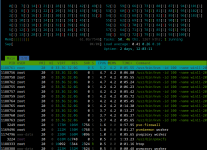

I can start with simple presentation. According to my previous report, this is how

8 vCPU WS2025 VM behaves on 8C/16T Xeon:

Code:

VM-EXIT Samples Samples% Time% Min Time Max Time Avg time

MSR_WRITE 1673 66.63% 27.71% 0.76us 79588.30us 402.91us ( +- 23.29% )

HLT 778 30.98% 70.69% 35.60us 73612.25us 2210.59us ( +- 6.97% )

EXTERNAL_INTERRUPT 42 1.67% 0.02% 2.17us 24.89us 10.26us ( +- 8.83% )

EPT_MISCONFIG 13 0.52% 1.58% 4.44us 29025.62us 2957.39us ( +- 77.36% )

PAUSE_INSTRUCTION 5 0.20% 0.00% 0.90us 5.79us 2.22us ( +- 41.42% )

Total Samples:2511, Total events handled time:2432802.46us.

Now please focus ALMOST on the same, but now I just increased this VM to

16 vCPUs, so there's 1:1 vCPU to "HT CPU" binding:

Code:

VM-EXIT Samples Samples% Time% Min Time Max Time Avg time

MSR_WRITE 5287 66.46% 10.79% 0.72us 57574.01us 136.29us ( +- 18.70% )

HLT 2499 31.41% 88.92% 80.25us 60730.51us 2376.21us ( +- 3.20% )

EXTERNAL_INTERRUPT 124 1.56% 0.02% 1.18us 123.37us 11.78us ( +- 8.53% )

EPT_VIOLATION 43 0.54% 0.15% 0.63us 9819.67us 230.65us ( +- 98.98% )

IO_INSTRUCTION 2 0.03% 0.12% 26.28us 8268.61us 4147.44us ( +- 99.37% )

Total Samples:7955, Total events handled time:6678366.11us.

As you can see, high increase happened, as it's really extreme overhead/EXIT-rate - all from the only one idle Windows VM (with 1:1 logical CPU binding).

And the result?

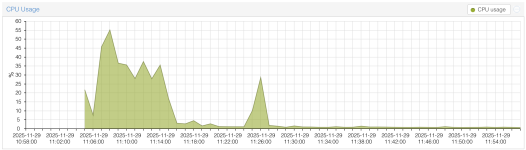

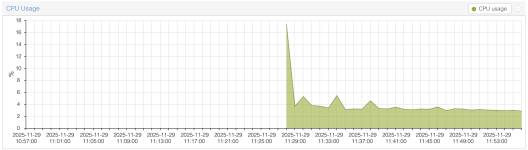

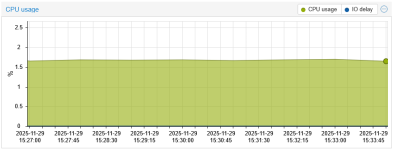

So we have almost 4% host idle load, caused just by one dumb/dummy idle Windows VM, without any "over-provisioning" - although while on "idle", there should be almost "no increase".

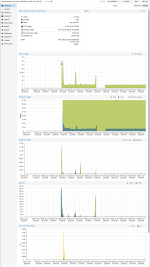

And this is what happens when we will clone this VM, so we will have 2x 16vCPUs on 8C/32T Xeon:

Code:

VM-EXIT Samples Samples% Time% Min Time Max Time Avg time

MSR_WRITE 12629 68.53% 15.99% 0.65us 35876.31us 310.33us ( +- 5.85% )

HLT 5377 29.18% 83.84% 0.77us 36201.30us 3821.93us ( +- 1.61% )

EXTERNAL_INTERRUPT 266 1.44% 0.03% 1.03us 3789.18us 29.92us ( +- 53.97% )

EPT_VIOLATION 122 0.66% 0.04% 0.73us 7968.85us 85.81us ( +- 78.95% )

EPT_MISCONFIG 17 0.09% 0.01% 14.64us 1778.29us 127.11us ( +- 81.22% )

IO_INSTRUCTION 13 0.07% 0.06% 10.11us 9798.40us 1073.66us ( +- 73.39% )

PAUSE_INSTRUCTION 3 0.02% 0.03% 0.91us 7021.83us 2342.01us ( +- 99.91% )

VMCALL 1 0.01% 0.00% 9.45us 9.45us 9.45us ( +- 0.00% )

Total Samples:18428, Total events handled time:24511276.02us.

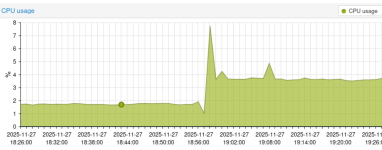

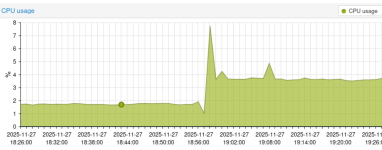

Host CPU load values: from ~1.9% (1x 8vCPU), to ~3.6% (1x 16 vCPU), to ~5.1% (2x 16 vCPU). All for "idle" Windows VMs.

But look - this is definitely not minor, as there's no linear scale up to 100%, because the only first 50% rule for the physical cores load (and latency, etc.).

So even a few idle Win11-24H2/WS2025 VMs can create significant load,

limiting total VM density and available free CPU power for real APP workload.

What probably matters (in terms of final increased idle load and other effects):

- Platform power management (BIOS)

- Host power management (OS)

- CPU base clock and max clock

- vCPU to CPU core ratio

- CPU generation

And back to your main question: At the beginning, I wasn't sure if these increased Win-VM-EXITS are common for both AMD and Intel, so if there's something specifically bound to the platform itself. But the increased synthetic timers buzz is omnipresent, as it's simply crippled in the new Windows kernel itself. I still cannot imagine the initial bright idea for such virtulization-cripling "optimization".

And I've been also limited to the "visible" idle CPU load increase only, but as I've no completely free AMD hosts, my experiments were still not clear, i.e. not easily quantifiable. Another reason is much higher total raw performance (than some non-production Intel hosts), what is for testing a quite limited factor (in terms of reproducibility).

As a preliminary (and very limited) extrapolation, I see something like 20-50% VM-density potential decrease, if this bad behavior will not be eliminated.

And as always, tests are just tests. Until we'll have more production WS2025 VMs, the final impact will not be known. But this is a "loop" problem, as after my digging here, I'm now to "pause" all new WS2025 deployments, as even (as low as) 20% decrease of VM density will have huge production impact.

Host CPU: Intel(R) Xeon(R) Gold 6244 CPU @ 3.60GHz (1 Socket)

PVE: 8.4.0 (running kernel: 6.8.12-17-pve)

VM config:

Code:

agent: 1

bios: ovmf

cores: 16

cpu: x86-64-v3

machine: pc-q35-8.2

memory: 16384

meta: creation-qemu=9.2.0

numa: 0

ostype: win11

scsi0: XXX:vm-XXX-disk-1,iothread=1,size=60G,ssd=1

scsihw: virtio-scsi-single

sockets: 1