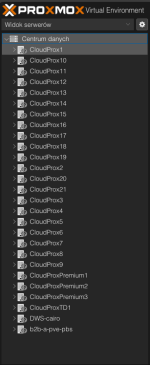

We have a cluster of 25 nodes, all machines are backed up on PBS. The last update to the latest version, on proxmox nodes on which we have VM and LXC, was carried out on 24.11. However, on 3.12 in the morning, a problem appeared with one node. CPU load on one node increased to 100%, it was impossible to work on LXC's under it. In the system log we saw information about CPU throttling which was preceded by problem with communication to other nodes (critical messages in log). Additionally, we could not log in to the cluster GUI on any of the nodes. We restarted this node at 09:13:37, and after this, problem with throttling appeared on another machine. We had to restart the entire cluster to fix this.

I saw an unusually high load average before the incident. Such a high load has never appeared in the history of this server.

System:

Debian 12

Linux 6.8.12-4-pve (2024-11-06T15:04Z)

pve-manager/8.3.0/c1689ccb1065a83b

Packet versions:

libpve-rs-perl/stable 0.9.0

proxmox-backup-client/stable 3.2.9-1

proxmox-backup-file-restore/stable 3.2.9-1

proxmox-widget-toolkit/stable 4.3.1

pve-i18n/stable 3.3.1

Syslog (when the problem appeared):

2024-12-03T09:02:59.927819+00:00 CloudProx5 pmxcfs[1408]: [status] crit: cpg_send_message failed: 6

2024-12-03T09:03:00.894253+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retry 90

2024-12-03T09:03:00.931447+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 10

2024-12-03T09:03:01.895661+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retry 100

2024-12-03T09:03:01.895882+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retried 100 tim

es

2024-12-03T09:03:01.895980+00:00 CloudProx5 pmxcfs[1408]: [dcdb] crit: failed to send SYNC_START message

2024-12-03T09:03:01.896043+00:00 CloudProx5 pmxcfs[1408]: [dcdb] crit: leaving CPG group

2024-12-03T09:03:01.932802+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 20

2024-12-03T09:03:02.933752+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 30

2024-12-03T09:03:03.676600+00:00 CloudProx5 kernel: [ 1702.060476] sched: RT throttling activated

2024-12-03T09:03:03.934744+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 40

2024-12-03T09:03:04.935681+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 50

2024-12-03T09:03:05.936649+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 60

2024-12-03T09:03:06.937594+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 70

2024-12-03T09:03:07.938539+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 80

2024-12-03T09:03:08.939442+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 90

2024-12-03T09:03:09.940523+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 100

2024-12-03T09:03:09.940632+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retried 100 t

imes

/var/log/apt/history.log.1.gz:

Start-Date: 2024-11-21 13:14:22

Commandline: apt dist-upgrade -y

Install: proxmox-kernel-6.8.12-4-pve-signed:amd64 (6.8.12-4, automatic), libpve-network-api-perl:amd64 (0

.10.0, automatic)

Upgrade: pve-docs:amd64 (8.2.3, 8.2.5), proxmox-widget-toolkit:amd64 (4.2.3, 4.3.1), libpve-rs-perl:amd64

(0.8.10, 0.9.0), pve-firmware:amd64 (3.13-2, 3.14-1), pve-qemu-kvm:amd64 (9.0.2-3, 9.0.2-4), libjs-extjs

:amd64 (7.0.0-4, 7.0.0-5), proxmox-mail-forward:amd64 (0.2.3, 0.3.1), libpve-cluster-api-perl:amd64 (8.0.

7, 8.0.10), pve-ha-manager:amd64 (4.0.5, 4.0.6), libpve-storage-perl:amd64 (8.2.5, 8.2.9), libpve-guest-c

ommon-perl:amd64 (5.1.4, 5.1.6), proxmox-kernel-6.8:amd64 (6.8.12-2, 6.8.12-4), pve-cluster:amd64 (8.0.7,

8.0.10), novnc-pve:amd64 (1.4.0-4, 1.5.0-1), proxmox-backup-file-restore:amd64 (3.2.7-1, 3.2.9-1), ifupd

own2:amd64 (3.2.0-1+pmx9, 3.2.0-1+pmx11), qemu-server:amd64 (8.2.4, 8.2.7), libpve-access-control:amd64 (

8.1.4, 8.2.0), pve-container:amd64 (5.2.0, 5.2.2), pve-i18n:amd64 (3.2.3, 3.3.0), proxmox-archive-keyring

:amd64 (3.0, 3.1), proxmox-backup-client:amd64 (3.2.7-1, 3.2.9-1), libpve-http-server-perl:amd64 (5.1.1,

5.1.2), proxmox-firewall:amd64 (0.5.0, 0.6.0), pve-manager:amd64 (8.2.7, 8.2.10), libpve-common-perl:amd6

4 (8.2.3, 8.2.9), libpve-network-perl:amd64 (0.9.8, 0.10.0), libpve-notify-perl:amd64 (8.0.7, 8.0.10), pv

e-firewall:amd64 (5.0.7, 5.1.0), libpve-cluster-perl:amd64 (8.0.7, 8.0.10)

End-Date: 2024-11-21 13:17:11

Start-Date: 2024-11-22 06:54:02

Commandline: /usr/bin/unattended-upgrade

Remove: proxmox-kernel-6.8.12-1-pve-signed:amd64 (6.8.12-1)

End-Date: 2024-11-22 06:54:23

Start-Date: 2024-11-24 12:00:35

Commandline: apt dist-upgrade -y

Upgrade: pve-docs:amd64 (8.2.5, 8.3.1), proxmox-ve:amd64 (8.2.0, 8.3.0), qemu-server:amd64 (8.2.7, 8.3.0)

, pve-i18n:amd64 (3.3.0, 3.3.1), pve-manager:amd64 (8.2.10, 8.3.0)

End-Date: 2024-11-24 12:01:05

Start-Date: 2024-11-24 12:02:11

Commandline: apt autoremove

Remove: libperl5.32:amd64 (5.32.1-4+deb11u3), libvpx6:amd64 (1.9.0-1+deb11u3), libcodec2-0.9:amd64 (0.9.2

-4), g++-10:amd64 (10.2.1-6), libidn11:amd64 (1.33-3), libleveldb1d:amd64 (1.23-4), libx264-160:amd64 (2:

0.160.3011+gitcde9a93-2.1), libmpdec3:amd64 (2.5.1-1), libaom0:amd64 (1.0.0.errata1-3+deb11u1), libx265-1

92:amd64 (3.4-2), libfftw3-double3:amd64 (3.3.10-1), libdav1d4:amd64 (0.7.1-3+deb11u1), libtiff5:amd64 (4

.2.0-1+deb11u5), libsigsegv2:amd64 (2.14-1), libllvm11:amd64 (1:11.0.1-2), libflac8:amd64 (1.3.3-2+deb11u

2), libbpf0:amd64 (1:0.3-2), libldap-2.4-2:amd64 (2.4.57+dfsg-3+deb11u1), libpostproc55:amd64 (7:4.3.7-0+

deb11u1), libisc-export1105:amd64 (1:9.11.19+dfsg-2.1), libpython3.9-stdlib:amd64 (3.9.2-1), libavcodec58

:amd64 (7:4.3.7-0+deb11u1), libcbor0:amd64 (0.5.0+dfsg-2), libboost-coroutine1.74.0:amd64 (1.74.0+ds1-21)

, net-tools:amd64 (2.10-0.1), libpython3.9:amd64 (3.9.2-1), liburing1:amd64 (0.7-3), libavutil56:amd64 (7

:4.3.7-0+deb11u1), libwebp6:amd64 (0.6.1-2.1+deb11u2), libswscale5:amd64 (7:4.3.7-0+deb11u1), libprocps8:

amd64 (2:3.3.17-5), guile-2.2-libs:amd64 (2.2.7+1-9), libdns-export1110:amd64 (1:9.11.19+dfsg-2.1), libsw

resample3:amd64 (7:4.3.7-0+deb11u1), libprotobuf23:amd64 (3.12.4-1+deb11u1), libsrt1.4-gnutls:amd64 (1.4.

2-1.3), libavformat58:amd64 (7:4.3.7-0+deb11u1), perl-modules-5.32:amd64 (5.32.1-4+deb11u3), libpython3.9

-minimal:amd64 (3.9.2-1), libigdgmm11:amd64 (20.4.1+ds1-1), python3.9:amd64 (3.9.2-1), libstdc++-10-dev:a

md64 (10.2.1-6), libicu67:amd64 (67.1-7), liburcu6:amd64 (0.12.2-1), python3.9-minimal:amd64 (3.9.2-1), l

ibavfilter7:amd64 (7:4.3.7-0+deb11u1)

End-Date: 2024-11-24 12:02:40

Start-Date: 2024-11-25 06:54:48

Commandline: /usr/bin/unattended-upgrade

Upgrade: linux-libc-dev:amd64 (6.1.115-1, 6.1.119-1)

End-Date: 2024-11-25 06:54:52

Journalctl log from corosync is attached as a file.

After this incident, we updated pve to newest version:

root@CloudProx5:~# pveversion -v

proxmox-ve: 8.3.0 (running kernel: 6.8.12-4-pve)

pve-manager: 8.3.0 (running version: 8.3.0/c1689ccb1065a83b)

proxmox-kernel-helper: 8.1.0

pve-kernel-5.15: 7.4-15

proxmox-kernel-6.8: 6.8.12-4

proxmox-kernel-6.8.12-4-pve-signed: 6.8.12-4

proxmox-kernel-6.8.12-2-pve-signed: 6.8.12-2

pve-kernel-5.15.158-2-pve: 5.15.158-2

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx11

libjs-extjs: 7.0.0-5

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.4

libpve-access-control: 8.2.0

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.10

libpve-cluster-perl: 8.0.10

libpve-common-perl: 8.2.9

libpve-guest-common-perl: 5.1.6

libpve-http-server-perl: 5.1.2

libpve-network-perl: 0.10.0

libpve-rs-perl: 0.9.1

libpve-storage-perl: 8.2.9

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.5.0-1

proxmox-backup-client: 3.3.0-1

proxmox-backup-file-restore: 3.3.0-1

proxmox-firewall: 0.6.0

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.3.1

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.3

pve-cluster: 8.0.10

pve-container: 5.2.2

pve-docs: 8.3.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.2

pve-firewall: 5.1.0

pve-firmware: 3.14-1

pve-ha-manager: 4.0.6

pve-i18n: 3.3.2

pve-qemu-kvm: 9.0.2-4

pve-xtermjs: 5.3.0-3

qemu-server: 8.3.0

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.6-pve1

Issue is similar to bug described in this thread:

https://bugzilla.proxmox.com/show_bug.cgi?id=5868

We can provide more logs if you need.

I saw an unusually high load average before the incident. Such a high load has never appeared in the history of this server.

System:

Debian 12

Linux 6.8.12-4-pve (2024-11-06T15:04Z)

pve-manager/8.3.0/c1689ccb1065a83b

Packet versions:

libpve-rs-perl/stable 0.9.0

proxmox-backup-client/stable 3.2.9-1

proxmox-backup-file-restore/stable 3.2.9-1

proxmox-widget-toolkit/stable 4.3.1

pve-i18n/stable 3.3.1

Syslog (when the problem appeared):

2024-12-03T09:02:59.927819+00:00 CloudProx5 pmxcfs[1408]: [status] crit: cpg_send_message failed: 6

2024-12-03T09:03:00.894253+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retry 90

2024-12-03T09:03:00.931447+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 10

2024-12-03T09:03:01.895661+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retry 100

2024-12-03T09:03:01.895882+00:00 CloudProx5 pmxcfs[1408]: [dcdb] notice: cpg_send_message retried 100 tim

es

2024-12-03T09:03:01.895980+00:00 CloudProx5 pmxcfs[1408]: [dcdb] crit: failed to send SYNC_START message

2024-12-03T09:03:01.896043+00:00 CloudProx5 pmxcfs[1408]: [dcdb] crit: leaving CPG group

2024-12-03T09:03:01.932802+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 20

2024-12-03T09:03:02.933752+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 30

2024-12-03T09:03:03.676600+00:00 CloudProx5 kernel: [ 1702.060476] sched: RT throttling activated

2024-12-03T09:03:03.934744+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 40

2024-12-03T09:03:04.935681+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 50

2024-12-03T09:03:05.936649+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 60

2024-12-03T09:03:06.937594+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 70

2024-12-03T09:03:07.938539+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 80

2024-12-03T09:03:08.939442+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 90

2024-12-03T09:03:09.940523+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retry 100

2024-12-03T09:03:09.940632+00:00 CloudProx5 pmxcfs[1408]: [status] notice: cpg_send_message retried 100 t

imes

/var/log/apt/history.log.1.gz:

Start-Date: 2024-11-21 13:14:22

Commandline: apt dist-upgrade -y

Install: proxmox-kernel-6.8.12-4-pve-signed:amd64 (6.8.12-4, automatic), libpve-network-api-perl:amd64 (0

.10.0, automatic)

Upgrade: pve-docs:amd64 (8.2.3, 8.2.5), proxmox-widget-toolkit:amd64 (4.2.3, 4.3.1), libpve-rs-perl:amd64

(0.8.10, 0.9.0), pve-firmware:amd64 (3.13-2, 3.14-1), pve-qemu-kvm:amd64 (9.0.2-3, 9.0.2-4), libjs-extjs

:amd64 (7.0.0-4, 7.0.0-5), proxmox-mail-forward:amd64 (0.2.3, 0.3.1), libpve-cluster-api-perl:amd64 (8.0.

7, 8.0.10), pve-ha-manager:amd64 (4.0.5, 4.0.6), libpve-storage-perl:amd64 (8.2.5, 8.2.9), libpve-guest-c

ommon-perl:amd64 (5.1.4, 5.1.6), proxmox-kernel-6.8:amd64 (6.8.12-2, 6.8.12-4), pve-cluster:amd64 (8.0.7,

8.0.10), novnc-pve:amd64 (1.4.0-4, 1.5.0-1), proxmox-backup-file-restore:amd64 (3.2.7-1, 3.2.9-1), ifupd

own2:amd64 (3.2.0-1+pmx9, 3.2.0-1+pmx11), qemu-server:amd64 (8.2.4, 8.2.7), libpve-access-control:amd64 (

8.1.4, 8.2.0), pve-container:amd64 (5.2.0, 5.2.2), pve-i18n:amd64 (3.2.3, 3.3.0), proxmox-archive-keyring

:amd64 (3.0, 3.1), proxmox-backup-client:amd64 (3.2.7-1, 3.2.9-1), libpve-http-server-perl:amd64 (5.1.1,

5.1.2), proxmox-firewall:amd64 (0.5.0, 0.6.0), pve-manager:amd64 (8.2.7, 8.2.10), libpve-common-perl:amd6

4 (8.2.3, 8.2.9), libpve-network-perl:amd64 (0.9.8, 0.10.0), libpve-notify-perl:amd64 (8.0.7, 8.0.10), pv

e-firewall:amd64 (5.0.7, 5.1.0), libpve-cluster-perl:amd64 (8.0.7, 8.0.10)

End-Date: 2024-11-21 13:17:11

Start-Date: 2024-11-22 06:54:02

Commandline: /usr/bin/unattended-upgrade

Remove: proxmox-kernel-6.8.12-1-pve-signed:amd64 (6.8.12-1)

End-Date: 2024-11-22 06:54:23

Start-Date: 2024-11-24 12:00:35

Commandline: apt dist-upgrade -y

Upgrade: pve-docs:amd64 (8.2.5, 8.3.1), proxmox-ve:amd64 (8.2.0, 8.3.0), qemu-server:amd64 (8.2.7, 8.3.0)

, pve-i18n:amd64 (3.3.0, 3.3.1), pve-manager:amd64 (8.2.10, 8.3.0)

End-Date: 2024-11-24 12:01:05

Start-Date: 2024-11-24 12:02:11

Commandline: apt autoremove

Remove: libperl5.32:amd64 (5.32.1-4+deb11u3), libvpx6:amd64 (1.9.0-1+deb11u3), libcodec2-0.9:amd64 (0.9.2

-4), g++-10:amd64 (10.2.1-6), libidn11:amd64 (1.33-3), libleveldb1d:amd64 (1.23-4), libx264-160:amd64 (2:

0.160.3011+gitcde9a93-2.1), libmpdec3:amd64 (2.5.1-1), libaom0:amd64 (1.0.0.errata1-3+deb11u1), libx265-1

92:amd64 (3.4-2), libfftw3-double3:amd64 (3.3.10-1), libdav1d4:amd64 (0.7.1-3+deb11u1), libtiff5:amd64 (4

.2.0-1+deb11u5), libsigsegv2:amd64 (2.14-1), libllvm11:amd64 (1:11.0.1-2), libflac8:amd64 (1.3.3-2+deb11u

2), libbpf0:amd64 (1:0.3-2), libldap-2.4-2:amd64 (2.4.57+dfsg-3+deb11u1), libpostproc55:amd64 (7:4.3.7-0+

deb11u1), libisc-export1105:amd64 (1:9.11.19+dfsg-2.1), libpython3.9-stdlib:amd64 (3.9.2-1), libavcodec58

:amd64 (7:4.3.7-0+deb11u1), libcbor0:amd64 (0.5.0+dfsg-2), libboost-coroutine1.74.0:amd64 (1.74.0+ds1-21)

, net-tools:amd64 (2.10-0.1), libpython3.9:amd64 (3.9.2-1), liburing1:amd64 (0.7-3), libavutil56:amd64 (7

:4.3.7-0+deb11u1), libwebp6:amd64 (0.6.1-2.1+deb11u2), libswscale5:amd64 (7:4.3.7-0+deb11u1), libprocps8:

amd64 (2:3.3.17-5), guile-2.2-libs:amd64 (2.2.7+1-9), libdns-export1110:amd64 (1:9.11.19+dfsg-2.1), libsw

resample3:amd64 (7:4.3.7-0+deb11u1), libprotobuf23:amd64 (3.12.4-1+deb11u1), libsrt1.4-gnutls:amd64 (1.4.

2-1.3), libavformat58:amd64 (7:4.3.7-0+deb11u1), perl-modules-5.32:amd64 (5.32.1-4+deb11u3), libpython3.9

-minimal:amd64 (3.9.2-1), libigdgmm11:amd64 (20.4.1+ds1-1), python3.9:amd64 (3.9.2-1), libstdc++-10-dev:a

md64 (10.2.1-6), libicu67:amd64 (67.1-7), liburcu6:amd64 (0.12.2-1), python3.9-minimal:amd64 (3.9.2-1), l

ibavfilter7:amd64 (7:4.3.7-0+deb11u1)

End-Date: 2024-11-24 12:02:40

Start-Date: 2024-11-25 06:54:48

Commandline: /usr/bin/unattended-upgrade

Upgrade: linux-libc-dev:amd64 (6.1.115-1, 6.1.119-1)

End-Date: 2024-11-25 06:54:52

Journalctl log from corosync is attached as a file.

After this incident, we updated pve to newest version:

root@CloudProx5:~# pveversion -v

proxmox-ve: 8.3.0 (running kernel: 6.8.12-4-pve)

pve-manager: 8.3.0 (running version: 8.3.0/c1689ccb1065a83b)

proxmox-kernel-helper: 8.1.0

pve-kernel-5.15: 7.4-15

proxmox-kernel-6.8: 6.8.12-4

proxmox-kernel-6.8.12-4-pve-signed: 6.8.12-4

proxmox-kernel-6.8.12-2-pve-signed: 6.8.12-2

pve-kernel-5.15.158-2-pve: 5.15.158-2

ceph-fuse: 16.2.11+ds-2

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx11

libjs-extjs: 7.0.0-5

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.1

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.4

libpve-access-control: 8.2.0

libpve-apiclient-perl: 3.3.2

libpve-cluster-api-perl: 8.0.10

libpve-cluster-perl: 8.0.10

libpve-common-perl: 8.2.9

libpve-guest-common-perl: 5.1.6

libpve-http-server-perl: 5.1.2

libpve-network-perl: 0.10.0

libpve-rs-perl: 0.9.1

libpve-storage-perl: 8.2.9

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 6.0.0-1

lxcfs: 6.0.0-pve2

novnc-pve: 1.5.0-1

proxmox-backup-client: 3.3.0-1

proxmox-backup-file-restore: 3.3.0-1

proxmox-firewall: 0.6.0

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.3.1

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.7

proxmox-widget-toolkit: 4.3.3

pve-cluster: 8.0.10

pve-container: 5.2.2

pve-docs: 8.3.1

pve-edk2-firmware: 4.2023.08-4

pve-esxi-import-tools: 0.7.2

pve-firewall: 5.1.0

pve-firmware: 3.14-1

pve-ha-manager: 4.0.6

pve-i18n: 3.3.2

pve-qemu-kvm: 9.0.2-4

pve-xtermjs: 5.3.0-3

qemu-server: 8.3.0

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.6-pve1

Issue is similar to bug described in this thread:

https://bugzilla.proxmox.com/show_bug.cgi?id=5868

We can provide more logs if you need.

Attachments

Last edited: