I am very happy to discuss issues with you here. But I have a problem now. After I created a Linux virtual machine in proxmox, I allocated 40G of space to it, and then I wanted to check its real-time disk usage. Rate, for example, he uses 10G in the system. I want to get this approximate value. When I use du -h to get it in the proxmox shell interface, there are always some errors and inconveniences. I would like to ask if it can be passed. API interface to obtain the disk usage of the specified virtual machine.

Check the disk usage of a virtual machine

- Thread starter Smith-haokai

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

you can get the disk usage inside a guest using the

Though, this will only work if the QEMU guest agent is installed inside the guest!

Hope this helps!

you can get the disk usage inside a guest using the

/qemu/{vmid}/agent/get-fsinfo node endpoint.Though, this will only work if the QEMU guest agent is installed inside the guest!

Please elaborate. If there really are errors, we'd like to know!When I use du -h to get it in the proxmox shell interface, there are always some errors and inconveniences.

Hope this helps!

Thank you very much for your answer. When I executed the command, I got some disk information, but it did not The specific disk usage in the virtual machine.Hi,

you can get the disk usage inside a guest using the/qemu/{vmid}/agent/get-fsinfonode endpoint.

Though, this will only work if the QEMU guest agent is installed inside the guest!

Please elaborate. If there really are errors, we'd like to know!

Hope this helps!

If I use du -h to check the disk usage of the virtual machine, the value obtained is not accurate. I find that it will be greater than the actual disk usage of the virtual machine.

Last edited:

Normally the output should also contain keys such as

Please provide the output of

Also, what OS installed inside the VM? What version of the QEMU guest agent is installed inside the VM?

total-bytes and used-bytes, but I do not see them in your screenshot. (Also for the future: Please post something like that in code tags, not as screenshot - it will be much more readable!)Please provide the output of

pveversion -v to help investigate this further.Also, what OS installed inside the VM? What version of the QEMU guest agent is installed inside the VM?

Please eloborate. What's the "actual disk usage" in your case, i.e. how did you obtain that value?If I use du -h to check the disk usage of the virtual machine, the value obtained is not accurate. I find that it will be greater than the actual disk usage of the virtual machine.

okey. When I use pversion -v the output is as follows

VM:centos 7

qemu version: qemu-guest-agent-2.12.0-3.el7.x86_64

Code:

proxmox-ve: 8.0.1 (running kernel: 6.2.16-3-pve)

pve-manager: 8.0.3 (running version: 8.0.3/bbf3993334bfa916)

pve-kernel-6.2: 8.0.2

pve-kernel-6.2.16-3-pve: 6.2.16-3

ceph-fuse: 17.2.6-pve1+3

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown2: 3.2.0-1+pmx2

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-3

libknet1: 1.25-pve1

libproxmox-acme-perl: 1.4.6

libproxmox-backup-qemu0: 1.4.0

libproxmox-rs-perl: 0.3.0

libpve-access-control: 8.0.3

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.0.5

libpve-guest-common-perl: 5.0.3

libpve-http-server-perl: 5.0.3

libpve-rs-perl: 0.8.3

libpve-storage-perl: 8.0.1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-2

proxmox-backup-client: 2.99.0-1

proxmox-backup-file-restore: 2.99.0-1

proxmox-kernel-helper: 8.0.2

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.4.0

proxmox-widget-toolkit: 4.0.5

pve-cluster: 8.0.1

pve-container: 5.0.3

pve-docs: 8.0.3

pve-edk2-firmware: 3.20230228-4

pve-firewall: 5.0.2

pve-firmware: 3.7-1

pve-ha-manager: 4.0.2

pve-i18n: 3.0.4

pve-qemu-kvm: 8.0.2-3

pve-xtermjs: 4.16.0-3

qemu-server: 8.0.6

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.1.12-pve1

Code:

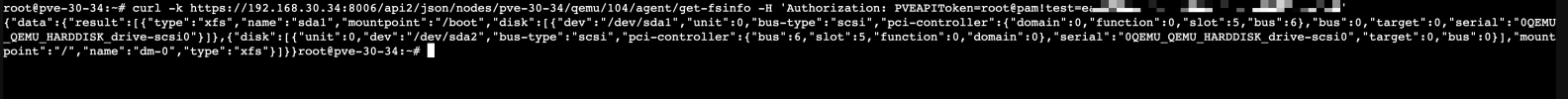

root@pve-30-34:~# curl -k https://192.168.30.34:8006/api2/json/nodes/pve-30-34/qemu/104/agent/get-fsinfo -H 'Authorization: PVEAPIToken=root@pam!test=ea9570db-44d4-4016-974c-b239312557d8'

{"data":{"result":[{"name":"sda1","mountpoint":"/boot","disk":[{"pci-controller":{"domain":0,"bus":6,"slot":5,"function":0},"bus":0,"serial":"0QEMU_QEMU_HARDDISK_drive-scsi0","target":0,"bus-type":"scsi","dev":"/dev/sda1","unit":0}],"type":"xfs"},{"disk":[{"serial":"0QEMU_QEMU_HARDDISK_drive-scsi0","target":0,"bus":0,"pci-controller":{"slot":5,"function":0,"bus":6,"domain":0},"bus-type":"scsi","unit":0,"dev":"/dev/sda2"}],"mountpoint":"/","name":"dm-0","type":"xfs"}]}}root@pve-30-34:~#VM:centos 7

qemu version: qemu-guest-agent-2.12.0-3.el7.x86_64

> apt install jq> qm agent 100 get-fsinfo | jq -r '.[] | select(has("total-bytes")) | .mountpoint + " is " + ((."used-bytes" / ."total-bytes" * 100) | round | tostring) + "% full"'Example output for a *nix VM:

/var/log/journal is 67% full/mnt/data is 67% full/tmp is 1% fullExample output for a Windows VM:

C:\ is 67% fullBut it would still be nice if the Proxmox web gui would show this in each VM's main status window.

> apt install jq

> qm agent 100 get-fsinfo | jq -r '.[] | select(has("total-bytes")) | .mountpoint + " is " + ((."used-bytes" / ."total-bytes" * 100) | round | tostring) + "% full"'

Example output for a *nix VM:

/var/log/journal is 67% full

/mnt/data is 67% full

/tmp is 1% full

Example output for a Windows VM:

C:\ is 67% full

But it would still be nice if the Proxmox web gui would show this in each VM's main status window.

I would second this

Played with your script. Made it for all running vm's on the host and skipped the snap mounted ones> apt install jq

> qm agent 100 get-fsinfo | jq -r '.[] | select(has("total-bytes")) | .mountpoint + " is " + ((."used-bytes" / ."total-bytes" * 100) | round | tostring) + "% full"'

Example output for a *nix VM:

/var/log/journal is 67% full

/mnt/data is 67% full

/tmp is 1% full

Example output for a Windows VM:

C:\ is 67% full

But it would still be nice if the Proxmox web gui would show this in each VM's main status window.

for i in `qm list|grep running|cut -c5-10`; do echo " VMID NAME STATUS MEM(MB) BOOTDISK(GB) PID";qm list|grep $i;qm agent $i get-fsinfo | jq -r '.[] | select(has("total-bytes")) | .mountpoint + " is " + ((."used-bytes" / ."total-bytes" * 100) | round | tostring) + "% full"'|grep -v snap;echo; doneBut it would still be nice if the Proxmox web gui would show this in each VM's main status window.

I'd like to +1 this request. When I view a VM in PVE 8.4 it shows me "bootdisk size" but I see no reason it shouldn't list the disks qemu agent reports with bar graphs for how full they each are, similar to how it displays for LXC containers.

(It would be even more useful if there could be a graph that shows disk usage over time too.)

Apologies if this is already in PVE 9, I am waiting to upgrade my nodes

edit: I found this bug/feature request open for this: https://bugzilla.proxmox.com/show_bug.cgi?id=1373

Last edited:

Thi would be a great feature

I just updated the bug report with a reference to this thread and @MacGuyver 's sample code.

Thanks for referencing that bug report, @smalltrex ! That bug's been open since 2017. ZFS didn't even allow that kind of filesystem query then, so hopefully we're in a better position now for this feature to become reality.I'd like to +1 this request. When I view a VM in PVE 8.4 it shows me "bootdisk size" but I see no reason it shouldn't list the disks qemu agent reports with bar graphs for how full they each are, similar to how it displays for LXC containers.

(It would be even more useful if there could be a graph that shows disk usage over time too.)

Apologies if this is already in PVE 9, I am waiting to upgrade my nodes

edit: I found this bug/feature request open for this: https://bugzilla.proxmox.com/show_bug.cgi?id=1373

I just updated the bug report with a reference to this thread and @MacGuyver 's sample code.

+1 to get the VM disk data into proxmox dashboard as I do not see it in PVE 9.0.6 yet.

I am also for this functionality +1 it would be very convenient and cool to see this function in the near future

I thought it was a bug because it's so straightforward to have information about disk usage. I mean, especially for VM's that share disks you want to know when one is running out of space.

ZFS in particular can complicate things.I thought it was a bug because it's so straightforward to have information about disk usage. I mean, especially for VM's that share disks you want to know when one is running out of space.

There's the space that a dataset or pool has, and underneath those datasets or pools there might be other datasets or pools that have their own dedicated space.

Thin vs thick provisioning also makes a difference, and surely other factors I'm not aware of.

It's not an unsurmountable task, but unfortunately it is nontrivial to do well.

I think it might be easier to have something like qemu-guest-agent within a VM periodically report out the output of something like

df for each virtual disk. But even then, that would never be live, and depending on the disk contents might cause slowdowns, which would make it a bad idea on certain VMs or LXCs.I've never seenand depending on the disk contents might cause slowdowns

df being slow. du sure, but not df. I don't have Petabytes of data though. When or why would this happen?I was literally just hedging there.I've never seendfbeing slow.dusure, but notdf. I don't have Petabytes of data though. When or why would this happen?

I have no idea how `df` and `du` work on massive filesystems with a ton of small files, or whatever other configurations people's PVE servers might be in.

I do know from experience that grabbing stats from a busy filesystem is going to slow something down at some point under the right (wrong) conditions. I was trying to make the point that if this is implemented as a feature, it'll have to be balanced against the PVE dev team's need not to introduce (semi-)frequent slowdown into their system.

A big part of the complexity here is that people run on different storage architectures. A lot of VM and LXC stores might be on a mirror pair of SATA SSDs … or HDDs, or slow but reliable network storage. Trying to stat filesystems like that could be a disaster if it's done in a blocking way.