I'm running a ZFS raid-z3 zfs pool on a Proxmox node that serves as a backup host. I create snapshots on different Proxmox hosts with ZFS mirror pools and pull them to the backup node.

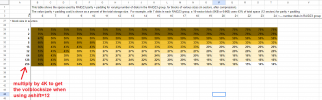

I noticed that the same snapshots of zVOLs are occupying almost twice the storage space on the backup node compared to the source server. I have read in several places that this is supposed to be caused by volblocksize in conjunction with the ashift=12 option. But I still don't understand how I can mitigate this or what my best option is.

I'm aware that this issue does not occur with zfs filesystems and backups with PBS are not affected as well. But my question is specifically related on transferring existing zVOLs from an existing ZFS mirror pool to a raid-z3 pool.

Here are my requirements or considerations:

Would switching from raid-z3 to raid-z2 make the situation better? If so, how much?

I noticed that the same snapshots of zVOLs are occupying almost twice the storage space on the backup node compared to the source server. I have read in several places that this is supposed to be caused by volblocksize in conjunction with the ashift=12 option. But I still don't understand how I can mitigate this or what my best option is.

I'm aware that this issue does not occur with zfs filesystems and backups with PBS are not affected as well. But my question is specifically related on transferring existing zVOLs from an existing ZFS mirror pool to a raid-z3 pool.

Here are my requirements or considerations:

- I want to maximize on data security, especially fault tolerance. I would like the pool to be still online even if at least two disks are failing at the same time.

- I'm willing to sacrifice on storage space, that's why I've chosen raid-z3. But only being able to use half of the disk space left after subtracting 3 disks from the array for redundancy is not acceptable to me.

Would switching from raid-z3 to raid-z2 make the situation better? If so, how much?